1 Introduction

The purpose of this article is to summarise the six years of development of the mobile music meta-environment UrMus. The conceptualisation and development of UrMus started in the early Summer of 2009 and is still ongoing. In this article, we discuss the underlying design philosophies of UrMus and how its overall design evolved. We focus on providing a broad overview of the whole system as it stands today while pointing to important areas open to further advances.

1.1 Mobile Platforms

Mobile platforms are still in flux today. When UrMus was originally designed, the tablet had not yet entered the commodity market and smart phones like the iPhone (at the time also smaller than contemporary versions) were the only form factor. This made a number of concerns regarding mobile devices as music platforms very pressing. In particular, screen real estate is extremely precious and difficult to manage. At the same time, the new use of multi-touch for most of the input is co-located on the same screen real estate. Even today we see the handheld mobile device in the form factor of a phone as an important performance platform because it allows agile single-hand performance and is particularly suited for motion-based non-screen interactions.

Since then, the form factors have diversified. For tablet devices, there is a hybridisation happening that connects traditional laptops with tablets. The precise impact of changes in form factor is under-researched, although some experimental results can be found in Qi Yang’s dissertation (Yang, 2015). It appears from this work that multi-touch interactions are complicated and it is not straightforward to relate device size to interaction performance. This is potentially due to a somewhat complex interrelation of ballistic targeting speeds (Fitts’ law type tasks), which get slower for larger devices, to visual and occlusion related problems, which are slower for smaller devices. For very large surfaces one can also assume ergonomic restrictions (limb-extensions, for example) to play a role. We will discuss this line of work in more detail in section 6.

A main focus of UrMus’ original design is to provide comprehensive yet completely open and programmable support of the devices’ input and output capabilities so that these can be exploited by the artist (Essl, 2010b; Essl & Müller, 2010). This means supporting a wide range of input capabilities, from multi-touch, over motion sensors, cameras, and microphones. Current mobile devices provide a rather expansive design space that can be used to create musical performance interactions (Essl & Rohs, 2007, 2009, pp. 197–207).

2 Design Philosophy

When development of UrMus started, no general-purpose programmable music synthesis environments for mobile music existed. The iPhone had just appeared on the market and Google’s Android platform had yet to be released. Hence, it was completely open how to address the characteristics of mobile devices at the time. Existing programmable music environments were designed for a desktop paradigm and it was not clear why that would work well under the new device constraints. We saw this as an opportunity to start from a clean slate and design an environment from scratch. Ultimately, numerous factors informed the initial design as well as subsequent extensions and modifications.

2.1 The Notion of a Meta-environment

When the first paper on UrMus was published, it was branded a meta-environment. A meta-environment is an environment that is flexible with regards to its representation to such an extent that it can be made to look like a wide range of environments that already exist or can be conceived. This means that the look-and-feel is not locked into one particular representation.

In the realm of music programming and sound synthesis environments, the question of representation is usually not explicitly asked. The developer usually chooses a particular representation and rigidly links it to the functionality of the environment. For example, the Max/MSP/PureData family of dataflow programming environments use a dataflow-chart graphical representation. Programming languages like SuperCollider and ChucK operate on a textual representation that defines one choice of textual programming language syntax(1) and a select few editing representations that are closely related to the textual representation and syntactic choice. One can consider the initial choices of representation to be paradigmatic in the sense that they adhere to a particular view of representations. In the context of this article, we call an environment non-paradigmatic if it leaves the choice of representation or paradigm to the user to a considerable extent. Hence a meta-environment as defined here is a non-paradigmatic environment.

Many traditional environments start off with a set paradigm but later add extensions that allow diversification of representations. For example, while Max/MSP and Pure Data were initially conceived as graphical languages, objects like Lua (Wakefield & Smith, 2007, pp. 1–4) offer the embedding of text-based programming in the environment. And while ChucK (Wang & Cook, 2004, pp. 812–815) and SuperCollider (McCartney, 2002, pp. 61–68) are initially conceived as text-based, various extensions have added graphical representations to them (Salazar et al., 2006, pp. 63–66). It is important to note, however, that this has not lead to the ability to completely change the starting paradigm.

Flexibility in representation is related to a high degree of modifiability. This is not a new concept outside the realm of music environments. For example, the UNIX-based text editor Emacs was developed in lisp but also maintained access to a lisp interpreter, which allowed deep and substantial modifications to the editor's functions and aspects of its representation later on. Some environments are designed to serve as a kind of development system to allow the design of a wide range of outcomes. Adobe Flash is one example.

Are meta-environments a good idea? This question remains as yet unanswered. However, in the case of UrMus, the focus on a meta-structure to choose representations is informed by research suggesting that no single representation works for everybody and that one should seek to match representations with cognitive style. Eaglestone and co-workers conducted a study of the relationship of cognitive styles to preferences in music environments. They found evidence for multiple cognitive styles across composers which in turn dictate how composers interact with synthesis software. From this, they suggest that one should design systems with these cognitive styles in mind (Eaglestone et al., 2007, pp. 466–473). However, this suggests an important challenge: What is the right system design for an individual composer? In traditional design of environments, the author of the environment picks the representation and hence makes presumptions about what will likely work for the user. The idea driving a meta-environment is to allow the individual to create a representation that works well with their cognitive style. This innovation, however, comes with an associated cost. Now, some of the burden of matching the representation is put onto the user leading to a higher entry cost. Despite this additional burden, the goal of having UrMus be a meta-environment was an important original design constraint (Essl, 2010b).

2.2 Openness, Flexibility and Accessibility

A persistent question of any user-bound system is the level of entry and usability, which in turn trades off against complexity and flexibility of the system. As quoted by Geiger (2005, pp. 120–136), computer music system pioneer Max Matthews already indicated this struggle:

He (the user) would like to have a very powerful and flexible language in which he can specify any sequence of sounds. At the same time he would like a very simple language in which much can be said in a few words.

This is particularly relevant for creative technologies that try not to limit the creative vocabulary that an artist can choose. Philosophically, the intent to liberate notions of music beyond set stylistic presumptions was strongly advocated by Edgar Varèse (Varèse & Wen-Chung, 1966, pp. 11–19) from whom we also received the notion that music can be viewed as “organised sound”. This notion has of course been tremendously influential for much of electronic music and experimental music over the past century (see, for example, Risset, 2004, pp. 27–54). In the author’s view, this has specific implications for a music environment that does not intent to impose a particular style or notion of music beyond the openness that Varèse envisioned.

We view this vision of openness to extend beyond pure musical concerns. Contemporary mobile music performance today is rarely an expression of pure sound. Rather, visual and gestural aspects regularly play an important synergetic role with sonic expression. Hence we view the ability to compose visual and interaction aspects of mobile performance as critical, interrelated part of the music environment.

For our purposes, we will consider full programmability to be the most flexibility any computational system can achieve. We will consider it to be completely flexible if through programmability, any relationship between device-supported input modalities and outputs can be achieved in principle. A fully programmable system, so defined, will have expansive creative flexibility.

An important differentiation to other environments is that general programmability in the case of UrMus includes interaction paradigms and representations. This is relevant as it has been argued that the representation of the interface is presented to the user itself has musical performance characteristics. This is a specific instance of viewing an environment’s interface as a musical creation and performance interface, turning it into a kind of musical instrument. Fiebrink et al. (2007, pp. 164–167) summarise this notion as follows: “One driving philosophy of the NIME community is that controller design greatly influences the sort of music one can make”. From this, we argue that rigid representations make a choice for the artist using the environment and our philosophy looks to return that choice to the artist.

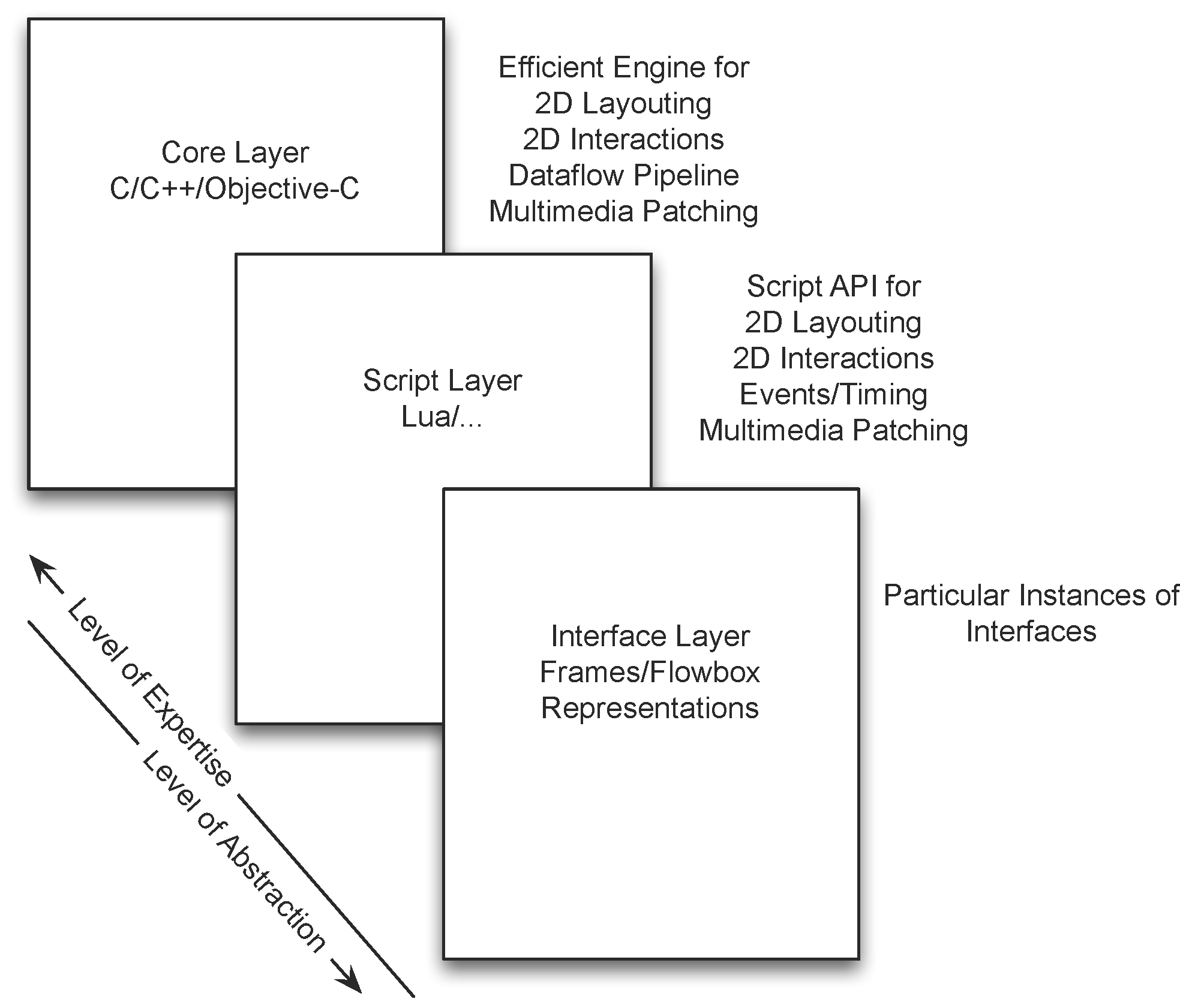

While some creative artists are proficient programmers, the range of computational literacy among artists is actually rather wide among those who want to engage creatively with mobile technology. Hence the introduction of a level of gradation that supports a wide range of expertise is desirable. For this reason, UrMus supports a multi-layered design following Shneiderman (2003, pp. 1–8), who recommends systems that provide what he calls “universal accessibility” by having multiple layers which provide increased simplification and abstraction for inexperienced end users and increased access to raw programmability for computationally literate users. UrMus’ core design supports three broad layers (compare Fig. 1):

- The lowest layer is the realisation of UrMus itself in C++. This layer is already designed to support extensions of the system and hence encourages expert programmers to leverage the full computational capabilities of the device within UrMus. In particular, the addition of new API elements in Lua, as well as adding new dataflow elements (called FlowBoxes) is structured in a way to be modular and accessible by external programmers.

- The medium layer is the support of a script language named Lua (Ierusalimschy, 2006) which has access to higher level abstractions in form of an API of many device capabilities. The design of the API follows current trends in intermediate programming found in systems (such as Processing (Reas & Fry, 2007)) or in computer game modding (such as World of Warcraft). In fact, UrMus’ 2-D graphics rendering API closely mimics that of Processing to allow transferability of creative programs developed in Processing. It also has elements of World of Warcraft’s Lua API. In general, the API is designed to give maximum flexibility of programming while reducing the programming complexity in such a way to make actions as immediate as possible. For example, the drawing of a graphical rectangle is reduced to drawing the rectangle and perhaps choosing colour and fill. In C++ the same requires substantially more complex programming in OpenGLES.

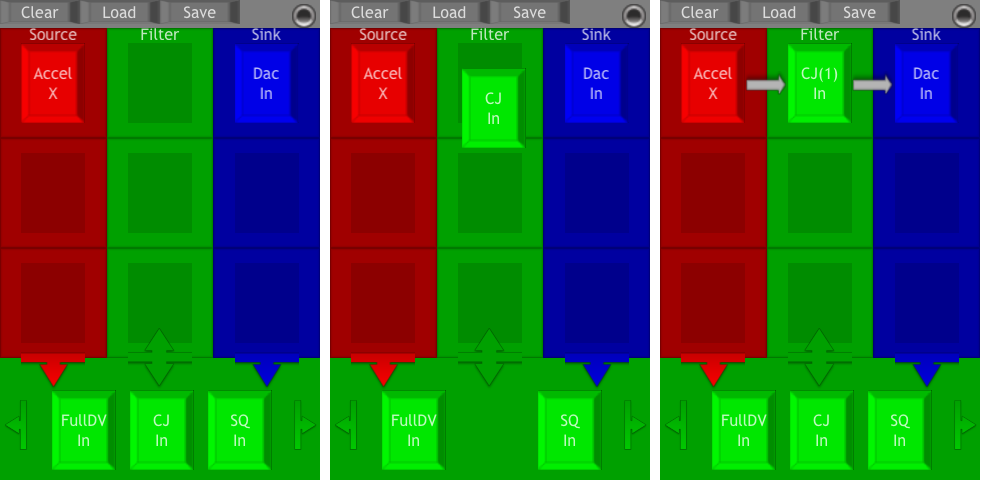

- The highest layer is a realisation of interactions implemented in Lua. This can range from an extremely simple performance interface linked to one fixed sound synthesis algorithm, to complex high-level graphical programming systems. UrMus comes with a default interface for graphical patching (see Fig. 2) that provides substantial flexibility in mapping device sensor input to sound synthesis algorithms. However, by reducing the interaction to mere patching, sounding interactions can be achieved in mere seconds. These have been used with primary school children.

It is important to note that this gradation is a pre-designed rough layering and that in fact additional layers are both present and can be added. For example, at the C++ level, standardised interfacing standards allow users with some basic knowledge of C to add working synthesis modules to the Lua API. On the Lua levels, user-generated libraries can and have simplified some programming tasks. A particularly important sub-layer at the lowest level is the support of a multi-rate dataflow engine. This design allows UrMus to realise not only procedural programming via Lua, but also allows for a dataflow programming environment to be realised in Lua.

The layering supports another desirable design characteristic: Clean separation and “pluggable” interfacing (Simone et al., 1995, pp. 44–54; Villar & Gellersen, 2007, pp. 49–56). If one has an interface that can perform with many different synthesis patches, there should be the potential for a clean interface between them so that either interface or sound synthesis can be hot-swapped out at any time. The layered design as well as the separation of the dataflow engine from the graphical rendering engine in UrMus achieves this design goal.3 Design Goals

3.1 Liveness and Performativity

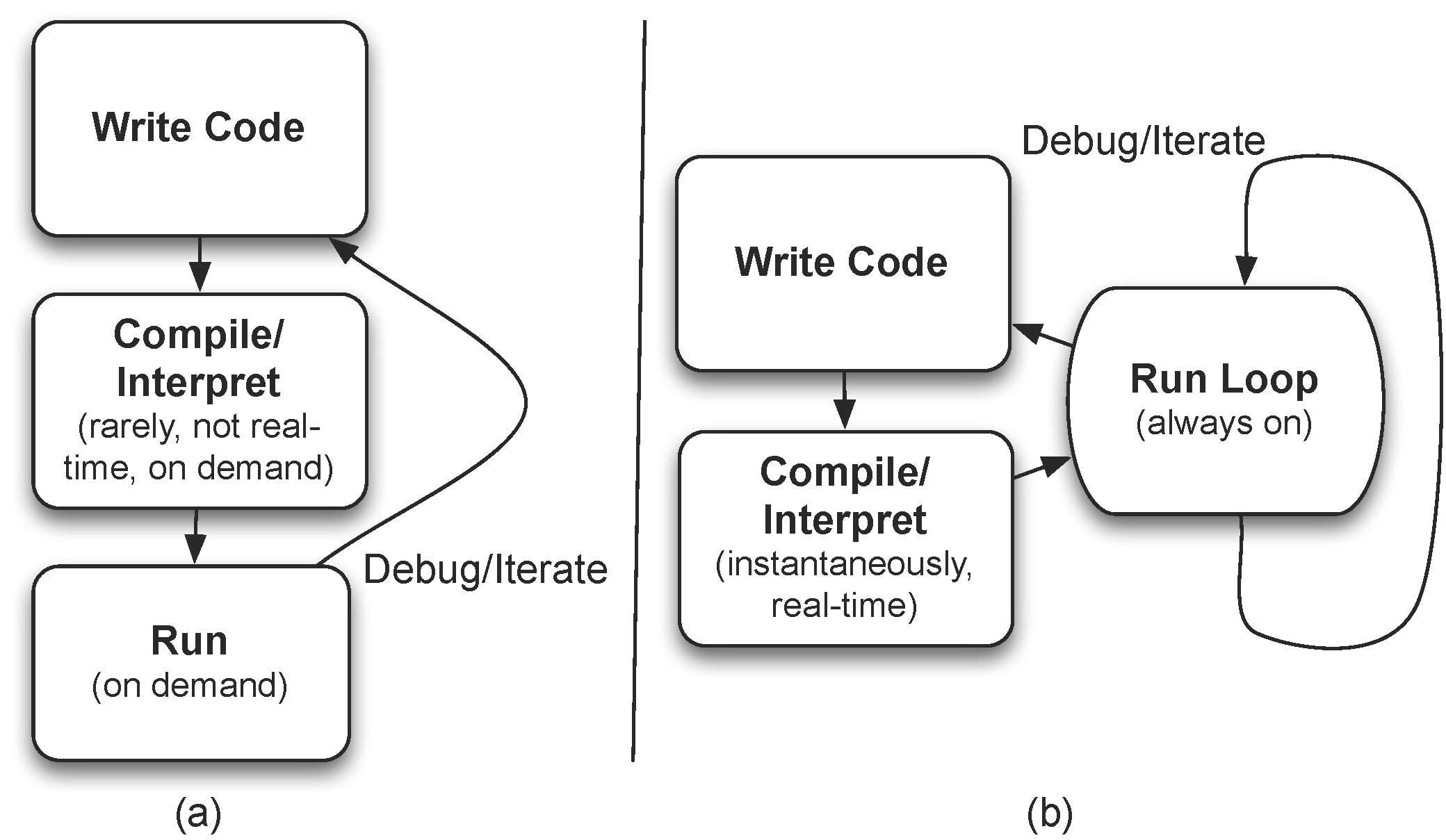

Music often is a performing art-form and one of the most attractive of mobile commodity devices is that their form factor readily supports performative use. Hence the support of performativity is a critical part of a mobile music system and is central in the design of UrMus. Another notion that is important in this context is liveness. While debates of the notion are ongoing (Magnusson, 2014, pp. 8–16) it is probably sufficient for our purpose to think of it as referring to the interactivity a system provides that allows performative forms of expression. For example, a “live coding” system is a system that enables interactive forms of programming. Some authors have described this concept as “on-the-fly programming” (Wang & Cook, 2004, pp. 812–815; Essl, 2009). Liveness is realised in actual systems by enabling that a program can be dynamically and interactively modified while it is running.

Support of liveness impacts UrMus’ designs in numerous ways:

- On the lowest level, it requires the design of highly efficient realisations of most supported systems to push relevant operations into real-time range.

- On the intermediary level, it in part drove the adoption of Lua for two reasons. One is that the runtime execution of Lua code was at the time the fastest embeddable script language available (Wrenholt, 2007). Second, Lua is well suited to support what we called design-by-moulding (Essl, 2009). The idea here is that live programming is hampered if large portions of code have to be written that lead to an appreciable outcome only after completion. This style of programming reduced the liveness of the perceived interaction. Hence a system should support and perhaps encourage highly differential and iterative programming strategies. Rather than rebuild full run-time states from scratch, run-time states are gradually modified by running small segments of code. Lua’s run-time state naturally supports this condition. Fig. 3 shows a conventional coding work-cycle compared to a live-coding work-cycle as realised in UrMus via Lua and persistent run state.

- On the highest layer, this means that building graphical relationships should be possible with a minimum of user knowledge and intervention. This requirement cascades down into lower levels and significantly impacts the design of the sound rendering engine of UrMus at the C++ level. We will be discussing the support of on-the-fly patching in section 5.4.

- Finally, liveness also is relevant when supporting collaborative performances. We will be discussing this aspect in section 8.

3.2 Programming, Remote Programming and Collaborative Programming

We have already mentioned that supporting and advancing on-device programming is one strategy used to leverage the creative power of mobile devices. Historically, this was also important because it was different than the standard programming paradigm for mobile devices which are remote. Mobile programs are developed on a remote machine (such as a laptop or desktop computer), compiled there, and then installed as apps on the mobile device. In many ways, this is attractive as larger screen and keyboard allow drastically improved programming experiences. However, the development cycle as conceived this way is not well suited for performative use. There are further restrictions that accompany remote programming. Typical app development introduces provisioning processes that add a layer of security but further delay the time between remote programming and on-device outcome. Finally, provisioning meant that some devices could only be developed from a restricted set of remote platforms (iOS development required XCode which is only available for MacOS) hence limiting participation.

In UrMus, Lua serves as a device-independent abstraction. UrMus’ Lua API is not bound by platform specifics and hence allows transcending silos and monopolies created by mobile device providers and their operating system. For this reason, UrMus’ design supports remote programming at the Lua level but not below it. While this was not part of the initial design of UrMus (Essl, 2010b), it was the first major addition after its initial release, driven by the overwhelming need to support collaborative programming for classroom use.

UrMus supports remote collaborative programming via a web-based editing environment that is provided by UrMus on the mobile device itself. UrMus launches a web-service which runs a Javascript based web-editing environment which can interpret and run Lua code on the device, while supporting a differential design-by-moulding paradigm.

The presence of remote programming on a device that itself is programmable and performative immediately invites the potential of collaborative live programming. That is, a user on the device can perform actions, while code is written remotely on the same device. Hence the interface and capabilities dynamically change and remote programmer and mobile user collaborate in creating an outcome (Lee & Essl, 2013, pp. 28–34). One can conceive of multiple programmers writing code on one or more devices. Yet, collaboration brings its own set of challenges. If multiple programmers modify the same run-time state, conflicts can arise. To address these, we developed mechanisms to isolate state spaces as well as merge them, and we also developed variable views with high degrees of liveness to allow collaborative programmers to observe dynamically changing state spaces (Lee & Essl, 2014, pp. 263–268).

3.3 Networks and Network Participation

Within UrMus, supporting network capabilities is of critical importance. Networks provide opportunities for remote collaboration, but also for forms of remote data transport that themselves can support a range of collaborative performance types or just attractive technical realisations. Networks are the gateway to what has been branded big data as well as scaling computational capabilities such as cloud computing. Historically, the layer for universal network access was at the level of network protocols such as TCP/IP or UDP. Today, higher level protocols provide such a rich access of networking capabilities that it is no longer clear that data-level protocols are the most suitable for performative use. Control-level networking for musical performance is well-supported by the Open Sound Control (OSC) protocol, which sits on top of a UDP connection. Data and service centric networking is widely supported via URL-based HTTP transfer. For this reason, UrMus currently supports OSC and URL-based HTTP for network transfer at the Lua level. The interface to the web-based Lua editor also uses standard HTTP.

One of the key innovations in UrMus’ networking support is the recognition that addressing network users is an important performative problem (Essl, 2011). Musicians may want to dynamically join and leave a networked performance, hence requiring support for joining and leaving that is easy to manage technologically. Within UrMus we re-appropriate Zeroconf network discovery technology for this purpose. On the Lua level, UrMus can launch, discover, and remove network services, and use these discoveries to connect to remote devices. This technology removes a range of difficulties in traditional connectivity setups based on static network addresses (IP numbers, URLs) by defining connectivity based on the semantic role of the participant in the network that can be both dynamically changing and short-lived. Consider the following example: A piece has one performer playing the role of lead instrumentalist. A technical issue occurs where a device fails and has to be replaced with another device. The semantic role of the new device is the same as the old, but the IP associated with the device may be changed. By identifying the semantic role, rejoining the network performance with a new device is robust, while having to manage the network address (IP) would potentially make the same performance fragile. This is a process that is flexible and applicable outside the context of mobile performance and has also since appeared in non-mobile music networking setups (Malloch et al., 2013, pp. 3087–3090).

3.4 Projection for Audience Communication

UrMus was also designed to project visual content to an audience, which many consider to be a crucial aspect of computer music performances. Early on in UrMus’ development, iOS did not offer high level support for projections, which therefore had to be achieved on an OpenGLES level. We developed this level to allow a range of projection strategies. The standard, since added to iOS as automatic default support, is to mirror the rendered display onto the projection. However, a performer may want to differentiate between performance display, and audience display. For this reason, UrMus also offered a separate screen-rendering mode where different rendering pages were associated with the device and the projection. This gives the artist full control over what visual content is to be shared and it can be dynamically chosen if it is the performance interface, or some other visual content. Functionally, they share the same program state so visualisations can of course be fully interwoven with any aspects of the performance interface.

3.5 Machine Learning and Performance

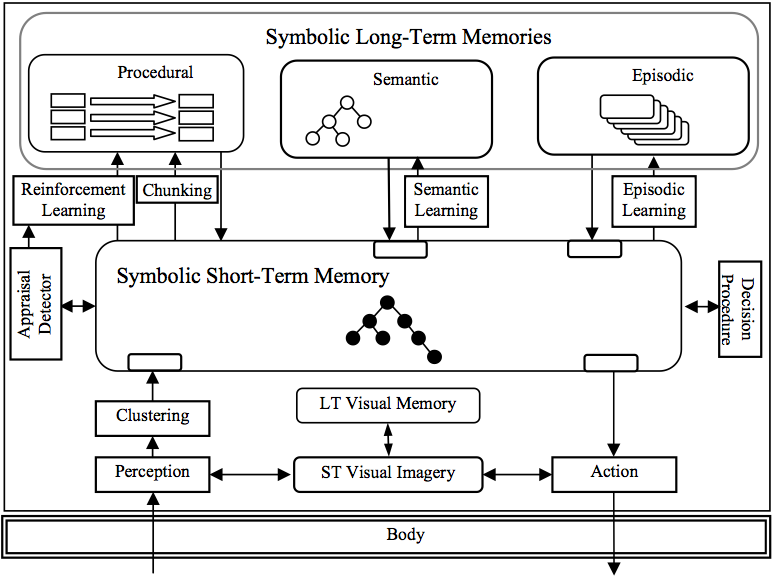

A critical question raised for any music environment is, what current technological trends are worth including? Within UrMus, we take an experimental view on this question. The utility of new technology can only be explored if there are exposure and opportunity. For this reason, we tend to err on the side of incorporating new potential when it is available. An example of a trend in research in music technology interactions is the incorporation of machine learning techniques (Fiebrink et al., 2008; Fiebrink 2011; Gillian 2011; Caramiaux & Tanaka, 2013). Weka is one of the most popular environments to use for machine learning integration in music performance, only a mobile Java version exists prohibiting cross-platform portability. In UrMus we experimented with integrating a machine learning environment called Soar (Laird, 2008) which emphasises cognitive aspects in their machinery. It can be used via a rule-based language and provides a range of contemporary machine learning methods (Derbinsky & Essl, 2011). We used the environment to realise machine-learned collaborative drumming via reinforcement learning (Derbinsky & Essl, 2012). Rule-based patterns have also appeared in a somewhat different context to create expressivity in music performance on mobile platforms (Fabiani et al., 2011, pp. 116–119).

Cognitive architectures are appealing for use in music systems because they provide approachable analogies for the construction of artificial musical agents. Mental processes such as remembering and forgetting musical phrases have intuitive analogies that transcend abstract notions of mathematical approaches to machine learning. We believe that this makes machine learning more accessible while retaining far reaching flexibility. Furthermore, cognitive architectures already provide a layer of abstraction over typical machine learning techniques by emphasising the design of agents with intelligent behaviour over low-level learning.

Fig. 4 shows the structural architecture of Soar. It has various forms of memory allowing for symbolic and episodic storage, retrieval, and forgetting. Procedural memory deals with decision making and encodes conditional rules of action.

Soar is integrated in UrMus as an extension to the Lua API. The core part of the integration is the ability to load arbitrary Soar rule sets, then allow creating and changing input states, execute aspects of the rule set and learning algorithms, and finally read outputs. Pragmatically, this means that a composer who wants to engage with machine learning in UrMus will have to learn how to construct Soar rule files. Hence a wide variety of intelligent agents can be modelled inside UrMus using this approach.

4 Related Work

The emergence of portable computational devices such as PDA which later would develop into smart phones and current multi-touch-centric devices provided a new avenue for considering music system design. Mobility is an attractive performance dimension early recognised and advocated by Tanaka (2004, pp. 154–156).

As early as 2003, Geiger (2003) offered a port of PureData for a Linux-based PDA despite PDAs being slow and providing very limited screen real estate. This may perhaps be the first full fledged synthesis environment on a mobile platform. Substantially more limited, though still rather flexible, is an even earlier sequencer type environment called nanoloop by Wittchow (Behrendt, 2005), which turned a Gameboy into a music performance system of a sequential musical style. A more recent example of a performance environment that supports specific musical styles while allowing performative flexibilities is ZooZBeat (Weinberg et al., 2009).

SpeedDial was an attempt to bring live-patching to 12-key touch-key smart phones (Essl, 2009) by allowing the routing of dataflow paths through show number sequences, and predates the turn of mobile smartphone architectures towards multi-touch screens. UrMus is a follow-up to the SpeedDial, though completely redesigned from scratch. SenSynth is a recent emerging patching environment (McGee et al., 2012) that contains many elements of SpeedDial on a multi-touch platform but focuses purely on patching.

Few systems are designed to make mobile phones fully fledged music performance systems. Most retain a separation between performer and instrument designer/programmer. In fact, it has been argued that this is the right approach given the device size and other limitations (Tanaka et al., 2012). Tahiroglu et al. (2012, pp. 807–808) proposed principles of designs of Mobile Music graphical user interfaces.

Musical performances on mobile devices in this paradigm are supported by software libraries and APIs that are usually in a system level programming language such as C++ and is meant to provide sound synthesis and potentially some interaction and networking capabilities. MobileSTK offered the STK synthesis engine (Cook & Scavone, 1999) for the now largely obsolete Symbian mobile operating system (Essl & Rohs, 2006). Nowadays Apple’s iOS and Google&rsqos Android operating system are the two most widely supported platforms. Perhaps the most widely used synthesis library for these mobile platforms is libpd (Brinkmann et al., 2011), offering a port of the PureData synthesis engine for mobile devices. There is also a SuperCollider port for Android (Shaw, 2010) which has been used in mobile performance projects (Allison & Dell, 2012). The MuMo library (Bryan et al., 2010, pp. 174–177) offers an STK (Cook & Scavone, 1999) port and low level audio support for iOS development while also providing wrappers for OSC networking and improved access to sensors at the C++ level. These libraries facilitate accelerated musical application development but are generally not intended to provide capabilities for non-programmers or real-time manipulation. There are also ports of CSound to iOS and Android platforms (Lazzarini et al., 2012; Yi & Lazzarini, 2012) and an infrastructure to allow to compile Faust code into program code for mobile devices (Michon et al., 2015, pp. 396–399). A tablet version of ChucK’s miniAudicle text editor has also appeared (Salazar & Wang, 2014).

RjDj is a commercial project that runs on a background Pure Data engine which has since become broadly available as libpd (Brinkmann et al., 2011). It is not intended to be a general music environment, but rather provides a platform for allowing artists to create interactive music projects on in PD remotely. More recently the ReacTable mobile app(2) provides music performance based on proximity graphical mapping.

Few projects directly tackle live-coding for musical purposes on mobile devices. SpeedDial appears to be the first project that employed an on-the-fly paradigm on mobile devices (Essl, 2009). Nick Collins released a trilogy of mobile apps to promote the TOPLAP live-coding collective that incorporated graphical small instruction set live coding with specific aesthetics on mobile devices (Essl, 2009). There are some efforts to support non-musical on-device programming on mobile devices. Codea allows on-device programming on iPad devices using the lua script language (Saëns, 2012). Among a plethora of emerging cross-platform programming settings, LiveCode (Holgate, 2012) explicitly offers a compiler-free paradigm inspired by a live-coding ethos.

A number of OSC remote control applications have emerged. In conjunction with a remote OSC host that generates sound these become a part networked music environments. In particular, Control (Roberts, 2011) is interesting as it crosses over into programmability and offers new ways to control on-the-fly interface construction over the network (Roberts et al., 2012). This work also crosses over into an emerging field of investigation that seeks to build music environments on the web platform (Roberts et al., 2014, pp. 239–242).

Mobile music programming has since also entered university level pedagogy (Essl, 2010a), where programming and live-performance are co-taught to an interdisciplinary student body and mobile phone ensembles have formed (Wang et al., 2008).

When UrMus was originally conceived, a few projects existed that address interaction design for mobile devices. Probably the closest was RjDj, a commercial environment using pure data as the audio engine.(3) One of the authors’ personal motivations to develop UrMus was an academic one. There was a need for an open environment to use for research and teaching, which was lacking in the current landscape of mobile environment. Music apps were often too tightly mixed with commercial interests to allow open exploration. At the same time, we saw this as an opportunity to incorporate a broad range of design options into a new setup.

Audio processing engines have a long-standing history going back to its origins with Music I by Max Matthews. Ultimately, multiple paradigms have emerged to allow users to generate and process music. The most dominant paradigms are text-based systems, such as CSound (Boulanger, 2000), Arctic/Nyquist (Dannenberg et al., 1986, pp. 67–78; Dannenberg, 1997a, pp. 71–82; 1997b, pp. 50–60), SuperCollider (McCartney, 2002, pp. 61–68) or ChucK (Wang & Cook, 2004, pp. 812–815) on the one hand, and graphical patching systems, such as Max/MSP (Puckette, 2002, pp. 31–43) or pure data (pd) (Puckette, 1996, pp. 37–41, 1997, pp. 1–4) on the other hand. For a more detailed review of audio processing languages and systems see (Wang, 2008; Geiger, 2005, pp. 120–136).

UrMus tries to not commit to any particular paradigm per se, but rather looks to offer an environment in which many different paradigms can be instantiated. It features the ability to do full 2D UI design. In a less general form this can also be seen in RjDj as well as other iPhone apps such as MrMr.(4) MrMr main design is that of an Open Sound Control remote client with configurable UI based on predefined widgets. UrMus bases its UI design on more general concepts such as frames and widgets can be derived and designed from those. Also, UrMus does not look to be an OSC remote, but is primarily intended to incorporate all processing, whether audio, or multi-media directly on the mobile device.

The idea of having performance interfaces has previously appeared in various forms. In fact the Max/MSP and pure data interfaces themselves mix in performance elements through widgets. The Audicle serves a similar purpose for ChucK (Wang et al., 2006, pp. 49–52). The idea of Audicle to offer multiple representations, called faces, is very much related to UrMus. Some aspects of the Audicle was rather closely tied to the needs of live coding and the underlying language ChucK.

Vessel is a multi-media scripting system based on Lua (Wakefield & Smith, 2007, pp. 1–4). In this sense, it is closely related to UrMus. However, UrMus’ goals are rather different from Vessel’s. The primary function of Lua in UrMus is not to serve to script multi-media and synthesis functionality but rather to serve as a programmatic API and a middle layer between lower level functionality. For example, the synthesis computations in UrMus’ data flow engine UrSound (Essl, 2010c, 2012) are fully realised in C, whereas Vessel is designed for algorithmic generation. However, with increased computational performance on mobile devices one could see merging the ideas of Vessel and UrMus, and this might be facilitated by the fact that they already share the same scripting language.

Many mobile apps have been developed to facilitate music making in various ways. Many of them either are not music systems or have a narrow musical focus (such as sequencing). A review of the tapestry of music apps on the Google Play mobile app store up to 2012 (Dubus et al., 2012, pp. 541–546) found that at the time 0% of the music apps could be classified as sound programming engines. An extensive review of Music apps on the Apple mobile app store of 2015 (Axford, 2015) also does not appear to document sound programming environments either. Using Google Books search engine as access methodology, the word “code” appears twice, but only once relating to “coding” in the sense of programming. The project is called BitWiz Audio Synth 2 and allows arithmetic wave-shape synthesis via expressions written in a C-like syntax. General purpose programming is not possible in this environment. The word “programming” appears five times, but exclusively in the context of programming drum loops.

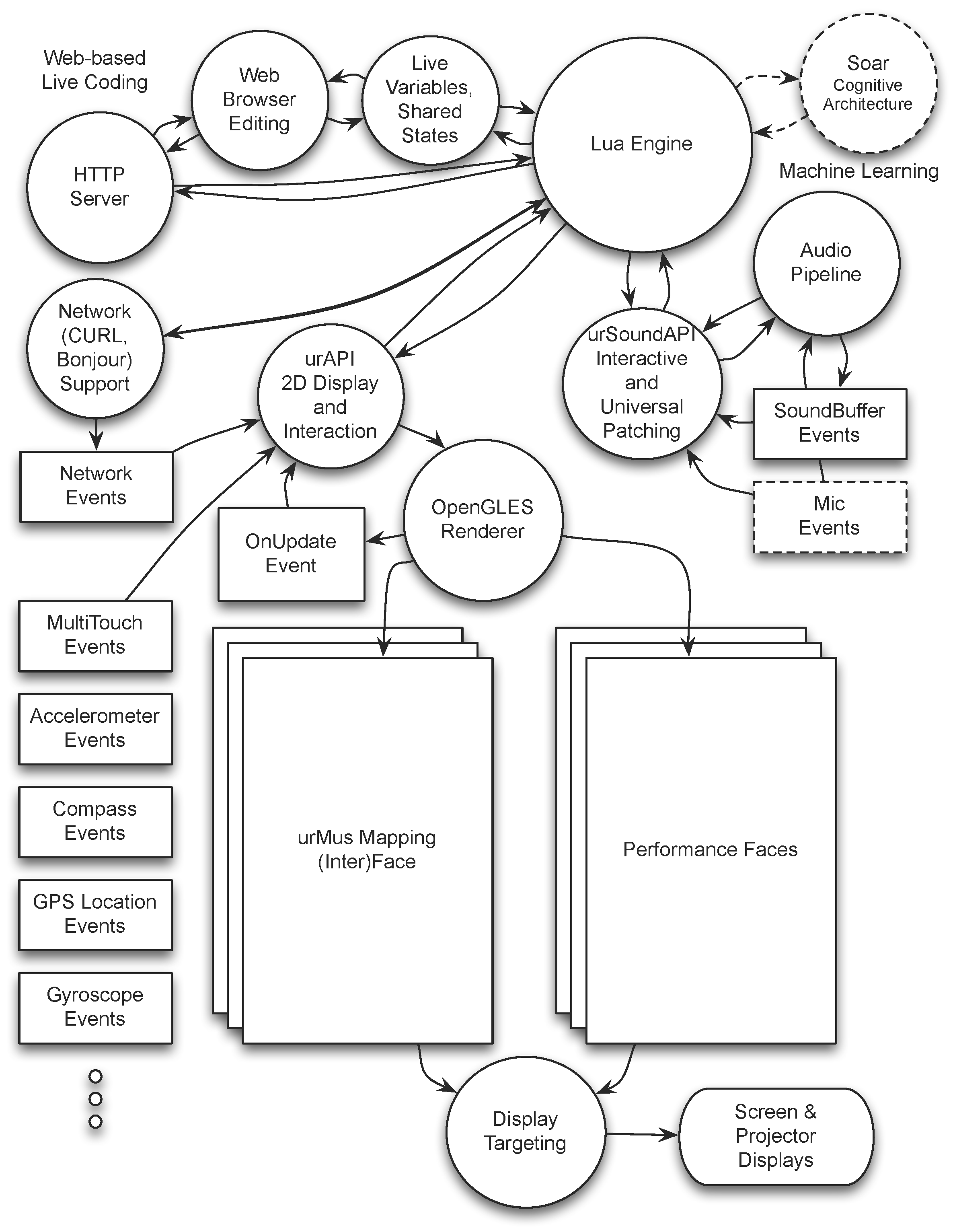

5 Aspects of System Design

An overview of UrMus’ system architecture can be seen in Fig. 5. The Lua engine is a central core of the architecture, as all components of the lower-level functionality get exposed to higher levels via a Lua API. The overall design strives for modularity to allow expansion and modification of as few components as possible. More important than this low level design choice are higher level motivations of the architecture, which we will be discussing in greater detail in this section.

5.1 Data-flow and Procedural Programming: Streams versus Events

The UrMus environment provides procedural programming via Lua. It also offers the potential to realise dataflow-based programming via an engine within UrMus called urSound (Essl, 2010c). It is accessible at the Lua level but in many ways provides an independent realisation of a programming paradigm. To understand this phenomenon, there are two ways to access inputs and outputs in UrMus. In the dataflow paradigm, incoming data can be piped into the dataflow as a data stream. In the procedural paradigm (Lua) incoming data is offered up via events. In our experience, this is grounds for confusion for new users of UrMus, as they tend to expect to only have one paradigmatic option.

5.2 Efficient Programming: Multi-rate and Events

An important reason for a number of design decisions have to do with the computational performance of mobile devices, which does lag behind full-powered CPUs. The emphasis of event-based programming and the realisation of a multi-rate pipeline both are part of this design goal. The multi-rate pipeline is designed such that data can flow at locally appropriate rates. Standard single-rate pipelines traditionally run at audio-rates which is a very high rate compared to the rate of data changes provided by many inputs. Audio is propagated at a 44.1–48kHz rate, while typical gesture-relevant inputs do not need and often are not provided at rates above 100–1000Hz. Hence, allowing sensor data to be processed at its natural rate leads to a design-induced performance improvement of 40–400 computations per second. The broader principle here is to provide an architecture encouraging computation only when necessary. This very same principle also motivates a strongly event-based design. UrMus by default does not provide a main run-loop. Instead, it offers two basic ways to execute code: directly, and via a triggering event. Direct execution is not done in a run loop and hence is largely meant for one-shot computation that will end. It is suitable for setting up the program state and modifying it directly via programming. An example of direct execution code would be a program startup code that sets up the interface and interactions and perhaps a synthesis algorithm. After visual and interaction elements are created, this code has no further purpose and can naturally end.

Events are the mechanism provided to realise everything else. Events are instances that trigger at certain moments. Individual event types can be linked to Lua code (the “Event Handler”) that will be executed every time that particular event happens. Certain event-relevant data will be passed to that Lua code. The vast majority of events are related to sensory input, such as multi-touch events, accelerometer, gyroscope, magnetic field sensor, etc. Some events are triggered by changes to the underlying layout (regions are moved, for example). One event is linked to the graphical rendering update called OnUpdate. This event also contains the time that has passed since the last graphical update.

Events lead to program segments that only execute when something relevant happens. Idling or polling is removed from the system. Timing can be naturally realised by using the provided elapsed time information.

5.3 Time

Time is handled in two distinct ways in UrMus: One is via the OnUpdate linked to graphical rendering. The elapsed time between graphical rendering frames gives time granularity at the level of frame rates and is ideal for timings related to animation or user interaction. Graphical frame rate is subject to variation. However, given that the elapsed time to the last frame is given as an argument it is very easy to realise steady visual animations by using the elapsed time information to advance the animation.

The second is offered up via the dataflow pipeline, which contains numerous timing related mechanisms (Essl, 2012). In principle, any input (called sources) and any output (called sinks) can provide timing. For example, if the input is the microphone signal, it comes with audio-rate timing. If an output is a user-triggered interaction, then the timing is the pattern of interaction chosen by the user. This means that in principle there is no master rate in UrMus’s dataflow engine and the same patch can be operated at different rates, depending on what timing sources or sinks it is connected to. Furthermore, the rate of the dataflow network in UrMus is not necessarily global but can be local. Some aspects of a dataflow unit may require that the timing is propagated. For example, filters tend to have the character that the rate at the input is related to the rate at the output of the filter. In UrMus we call this property coupled. However, other inputs and outputs may not have that relationship. For example, changing the frequency of a sine oscillator does not require an update of the output. This property is called decoupled. This means that rates can be changed locally at decoupled connections. The result is a multi-rate dataflow pipeline where the timing depends on the local flow rate of information. This does make the latter mechanism more complicated to understand and handle. However, it also leaves substantial flexibility and computational efficiency. Furthermore, UrMus offers a range of FlowBoxes that allow manipulation of local timing, or the observation of timed information at different rates (Essl, 2012).

5.4 Rapid Dataflow Patching and Semantic Types

The realisation of dataflow programming that is useful for live performance requires rapid reconfiguration of dataflow connections. A particular innovation in UrMus is the development of semantic types that allow dataflows of different semantic meanings to connect more rapidly. For example, take the case of the desire to replace an input to an existing dataflow patch. The original input was an accelerometer, having a range of -1 to +1 units of gravity. The new input is a gyroscope with a range of 0 to 360 degrees. Reconnecting these two types leads to a semantic mismatch that traditionally the user has to add conversions form.

The core of the solution is that all amplitude data is converted to a normed format allowing seamless interconnectivity without the user having to specify the relationship between the two semantics manually. The semantic conversion now happens inside the source and sink flowboxes automatically. This reduces user input and reduces typical changes to dataflow to simple reconnections that are guaranteed to work meaningfully (Essl, 2010c). This idea is not limited to dataflow representations and has since also been realised in the text-based live-programming environment Gibber (Roberts et al., 2014, pp. 239–242).

5.5 Interactive Mobile Visuals

While music environments are primarily about supporting music, in reality sound rarely lives in isolation and art often co-exists with other forms of performance. In particular, visual art forms an important aspect of performance. UrMus currently supports 2-D graphical rendering primitives modelled closely after those offered by Processing (Reas & Fry, 2007), hence providing substantial capability to create visual output interactively.

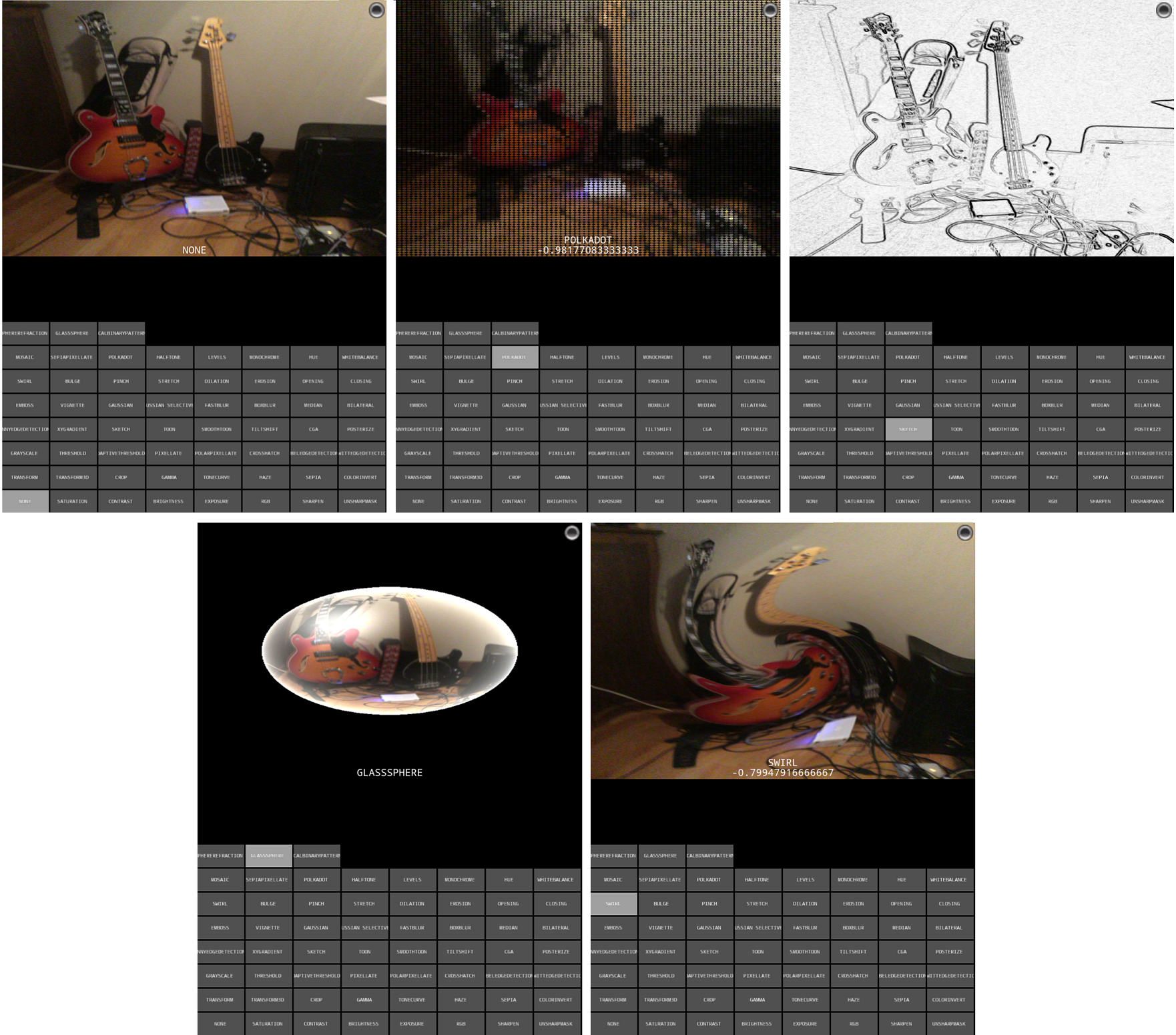

Visual information can provide rich means for generating input. The camera provides access to this information and for this reason, camera support was an important aspect in UrMus’ development. Early development of visual rendering and camera interactions were published (O’Keefe & Essl, 2011). Since then, the capability has substantially enhanced by adding support for GPU shader texture manipulation via the GPUImage library (Larson, 2011). The advantage of shader-based graphical manipulation is speed. Otherwise prohibitively computationally expensive image effects can be applied in real time. This is particularly attractive because it can also be applied to live camera feeds. Most, though not all, of GPUImage’s shader filters are supported in UrMus and they can be applied globally to all textures or selectively to individual textures including live camera textures. Effects include a range of blur, hatching, edge-enhancing, deformation, and colour filters. Some effects can be used to increase detection algorithms. For example, the PIXELLATE effect allows for fast averaging of sub-regions of the camera image, hence can improve on segmented brightness detection algorithms by off-loading the costly averaging step onto the GPU. Some examples of filters applied to a live camera image in UrMus can be seen in Fig. 6. These filters can be manipulated via filter parameters at interactive rates allowing for rich and dynamic mapping to sound and gestures. Different textures can receive different filters and filter parameters.

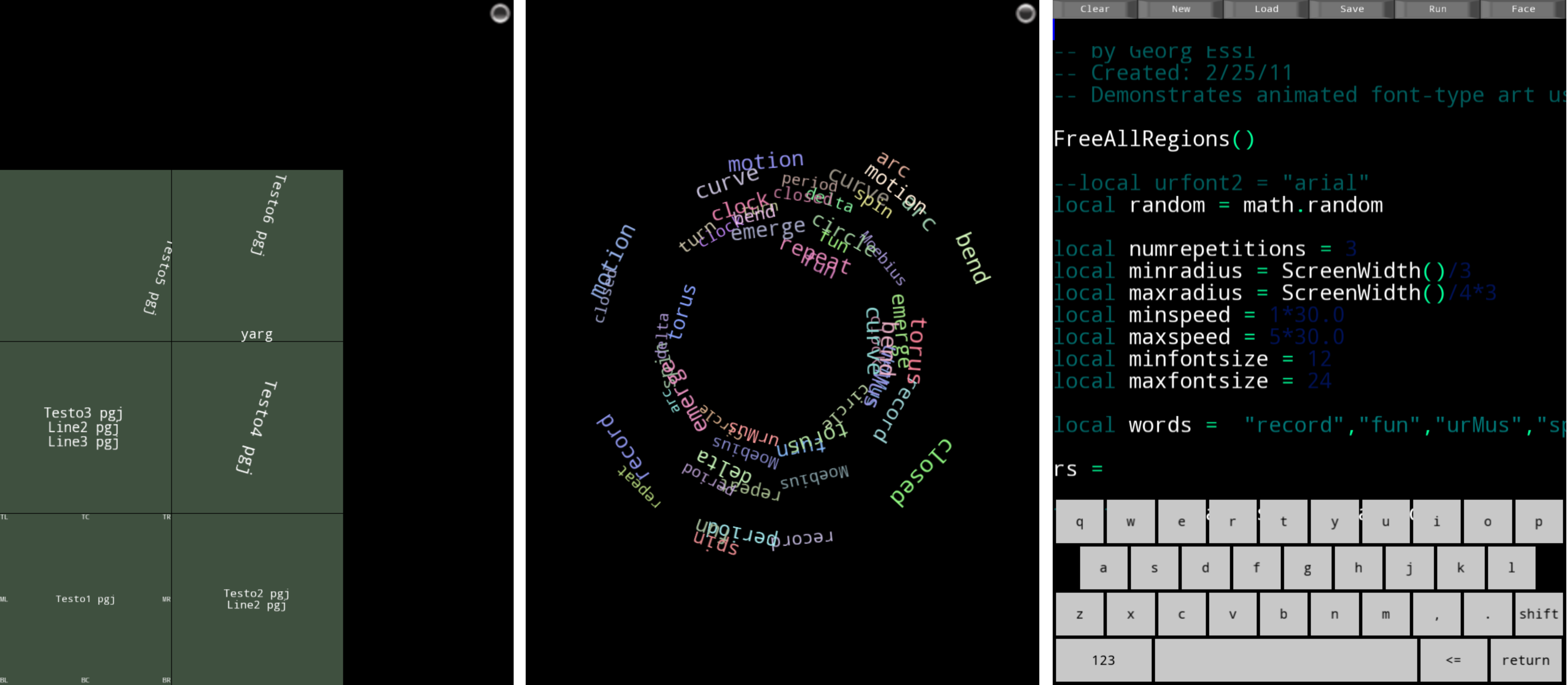

5.6 Font Art

Font rendering is important for any environment that engages in text-based interaction with the user. However, since font rendering itself can be part of artistic expression, it should be supported with great flexibility. In UrMus we offer flexible font rendering via the use of the FreeType 2 open source library(5) allowing flexible rendering of glyphs with some text alignment support, rotation, font size and drop shadows. As these can be modified interactively UrMus can support such interactive art forms such as temporal typography (Lee & Essl, 2015b, pp. 65–69) or live writing (Lee & Essl, 2015a). Examples of font renderings realised in UrMus can be seen in Fig. 7.

6 Interfaces and Representations

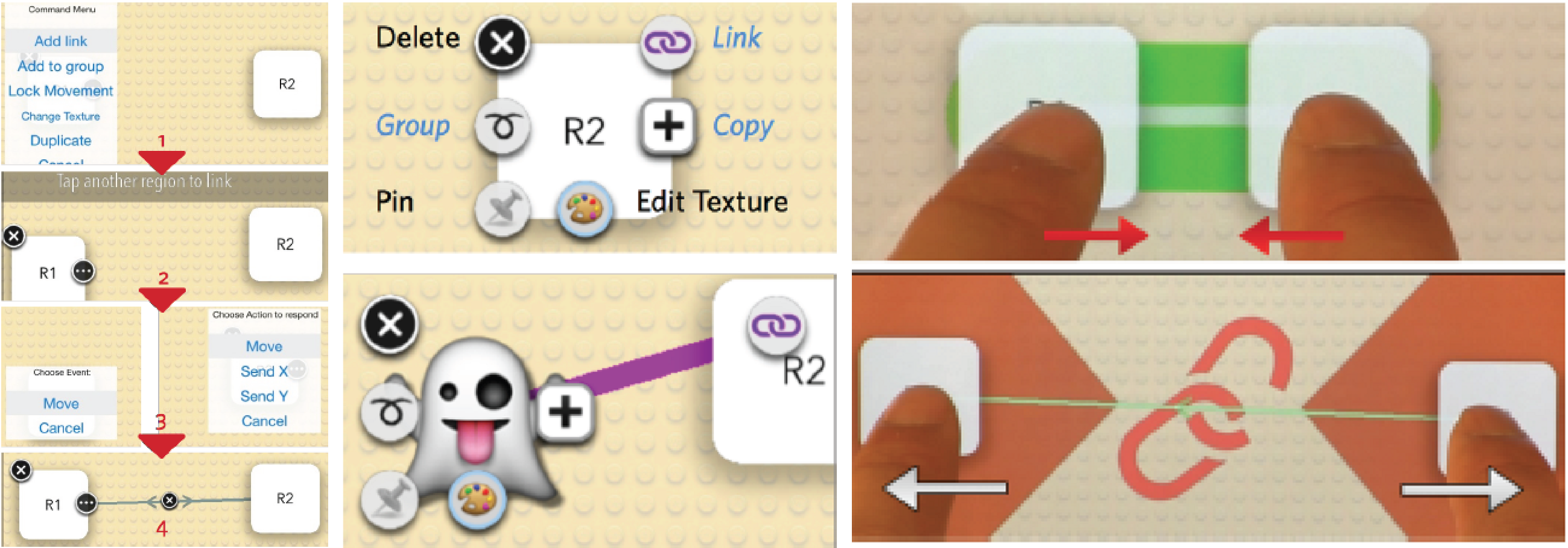

Deciding what interaction paradigms are most suitable for mobile devices and hence for mobile music systems is an important open problem. Some steps in gaining a better understanding of the problem were taken in Qi Yang’s dissertation, which looks at gesture based interactions more broadly, but also critically investigated the questions of multi-touch interactions and their UI representations on mobile devices (Yang & Essl, 2015, pp. 369–373; Yang, 2015). The user study included tasks such as building a piano interface with various interaction paradigms. Three paradigms were implemented: (1) A menu-driven interaction that mimics more traditional desktop GUI workflows; (2) an icon-based linking paradigm that is similar to graphical patching approaches taken in music systems such as MAX/Msp or PureData; and (3) a novel gesture-based interaction paradigm that seeks to provide functionality such as linking and grouping by multi-touch gestures with a visual cue system (see Fig. 8). All of these representations were realised in UrMus and took advantage of UrMus’ meta-environment design.

Additionally, Fitts’ law type ballistic motion experiments were performed on different size mobile devices to get a better understanding how device form factor and, in particular, hand-position induced occlusion influence raw interaction performance on these devices. The results are surprisingly complex. Most interesting, perhaps, is the result that multi-touch gesture-based interactions perform worse than menu and icon-based interactions. In all these studies, motion patterns were also recorded, allowing a Fitts’ law type evaluation. These were then compared against a pure Fitts’ law study that took occlusion into account. Counter-intuitively, the pure Fitts’ law study indicated that ballistic motion on smaller iPads were slower than on larger iPad, when target sizes are held at constant physical size. This is counter to what is expected from a Fitts’ law result where the index of difficulty would increase with distance. Another paradox effect found is that occlusion actually increased ballistic performance (albeit at the cost of error rates). Apparently, the inability to use visual feedback to correct ballistic motion increases throughput.

7 Evolution of Lua API Syntax

In early instances of the Lua API, closeness to existing Lua APIs such as World of Warcraft’s interface modding Lua API was a primary design decision. Given the popularity of the game and the stock of existing Lua code that created interfaces, potential transferability of this existing code base to UrMus seemed attractive. The initial design of the API focused on giving access to immediate functionality. With the introduction of the UrMus web editing interface Lua programming became much more important, and, in particular, live coding in this interface made the structure of the syntax more important. Concise syntax is more conducive to live coding practices than longer ones. Hence we streamlined the API and provided short-hand versions of the API and provided more crisp methods to represent more complex relationships, particularly in the API controlling the dataflow engine. To see the change made to this part of the API compare old and new notations, we compare two equivalent code segments. Both map the x and y axis of the accelerometer sensor to frequency and amplitude of a sine oscillator.

Here is an example of the old API syntax:

accel = _G["FBAccel"]

mySinOsc = _G["FBSinOsc"]

accel:SetPushLink(0, mySinOsc, 0)

accel:SetPushLink(1, mySinOsc, 1)

dac:SetPullLink(0,mySinOsc,0)

A compact new API syntax API of the same code now looks like this:

mySinOsc = FlowBox(FBSinOsc)

FBAccel.X:SetPush(mySinOsc.Freq)

FBAccel.Y:SetPush(mySinOsc.Amp)

FBDac.In:SetPull(mySinOsc.Out)

The main change is that the factory versions of the flowbox can now be used directly if it is a sensor or some other non-instantiable flowbox. In the later version, FBAccel is used directly in the patch-building. A more complicated change is the conversion of the FlowBoxes into objects that carry their inputs and outputs as human readable member variables. In the previous version, these inputs and outputs were represented numerically. Connection creation is now directly available as member functions to inlet and outlets of a flowbox. Method names have been shortened (SetPushLink to SetPush). Overall this shortens the typing effort while increasing human readability. The question of syntax in live-coding is a long-standing one. For example, it was important in shaping ChucK’s syntax (Wang & Cook, 2004) and relates to design considerations in Gibber API design (Roberts et al., 2014, pp. 239–242).

8 Web-Based Code Editor and Collaborative Live Coding

Early on, an HTTP server was integrated with UrMus to allow UrMus to provide a javascript-based web editing environment on a web browser. One of the motivations for adding this functionality was the need to create independence from specific operating system-specific compilers. For example, for iOS development XCode as well as access to the Apple Development program was necessary. By moving programming into a web-editor, platform-independence and the need for provisioning of apps was achieved. This enables developers to quickly prototype their music applications without trouble of local compilation and transferring update to the device, while preserving the convenience of using a laptop or desktop screen and keyboard environment.

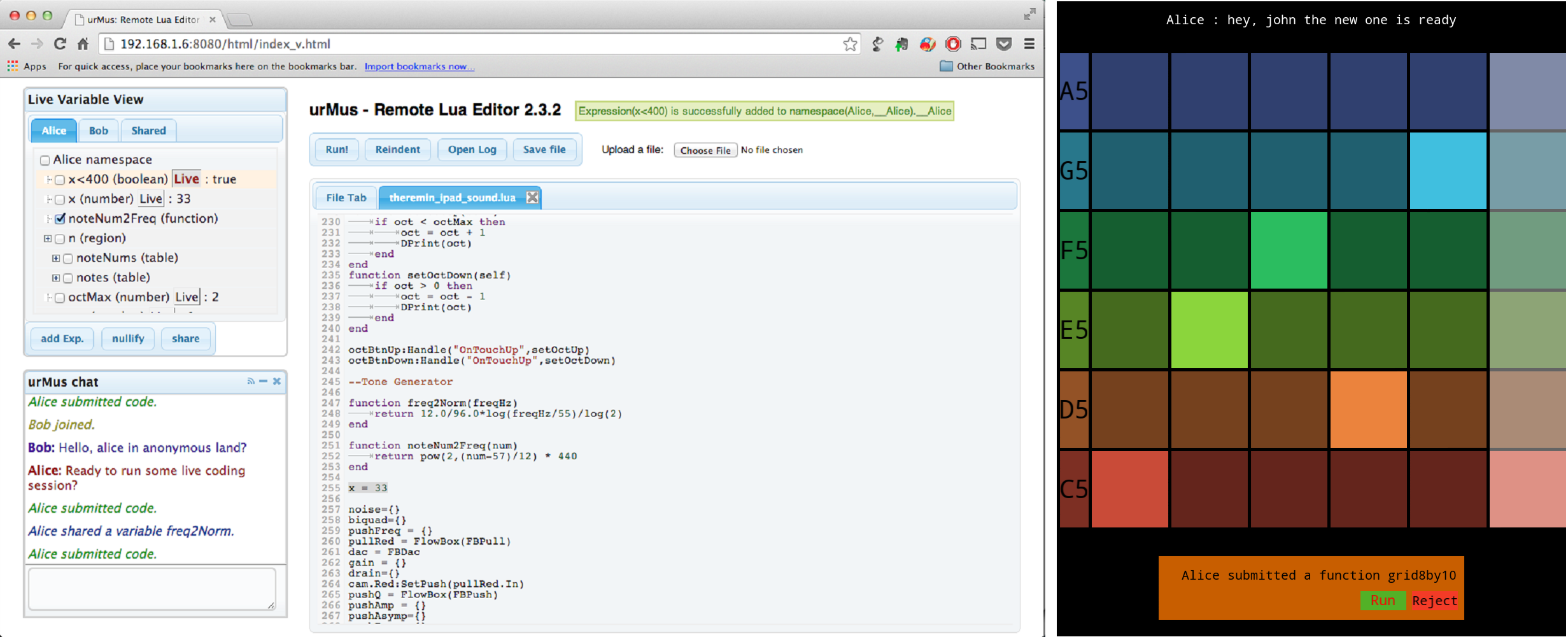

The remote web-based run-time programming environment facilitated thinking about making the web editor itself a performance platform and support text-based live coding where the outcome of the program is running on a mobile device (Lee & Essl, 2013, pp. 28–34). This provides an alternative route to live coding that is not necessarily through the mobile device itself, but by one or more remote collaborators. Important aspects of this form of performance are communication, the management of programming on a shared run-time environment, and code-block and other live code execution strategies. The original editor was CodeMirror(6) but it was found to lack good support for live collaboration. Hence we developed number of extensions to the editor (Lee & Essl, 2014, pp. 263–268) as can be seen in Fig. 9 (left). Communication can also be extended onto the mobile device interface itself, as depicted in the same figure (right).

Numerous aspects of these extension advance new notions of supporting liveness collaboratively. For example, the variable view has the ability to display live updates of variable states, making it explicit to multiple collaborators, both remote programmers and a user on the mobile device, how currently running code changes them. Each collaborator starts off with an individual variable namespace that safeguards from potential conflicts in code (e.g. variable/function naming). Individual namespaces can be merged in a controlled manner. This way naming and state conflicts can be explicitly managed between collaborators. The live chat not only facilitates message-based communication but also displays when new functions have been added to the name space (by running them), or if a variable has entered the shared name space. These extensions are especially designed to consider live modification of a mobile music application as a live performance, where remote programmers can change the nature of the mobile musical instrument that is being played by a performer. Using the controlled name space, the code execution can be delegated to another remote programmer by sharing a function so that one can synchronise the timing of code updates. The performer on the device can also control the code executing by a programmer prompting a “run” button on the screen that will trigger the code-update on the instrument.(7)

9 Validation of UrMus

The main platform for validation and feedback on UrMus has been provided in the context of a regular interdisciplinary course offered at the University of Michigan. Furthermore, the course was designed to end with a final public concert in which the students would present performances of their own creation.

9.1 Utility in Interdisciplinary Pedagogy

The course was offered at the University of Michigan five times over the last six years. The course was offered for the first time in 2009. At the time the first version of UrMus was completed and used in the course.

The course was designed for Engineering majors and entering graduate students with an interest in music, as well as music majors with an interest in technology. The interdisciplinary nature of the course poses some challenges. Engineering students have completed a long sequence of programming courses when entering the course, while music students have completed at most an introductory programming course. Conversely, music students have extensive experience in performance, composition, and engaging in stage work, while many engineering students had no such prior education or experience.

Initially the course was conceived taking a bottom-up strategy. This means that low level technical features were introduced first and built upon. For this reason XCode based projects in Objective-C++ formed the early part of course projects and only for later parts, when the class was transitioning towards preparing their final class concert projects, was UrMus introduced as a higher level abstraction on top of the basic functionality (such as realising basic audio streaming, or accessing accelerometer and multi-touch sensor data). Observing the students’ success with the approach, we witnessed that low-level programming was too challenging for some Music students who had limited programming background, while the approach appeared appropriate for Engineering students with substantial programming background.

Hence during the second time the course was offered in 2010 we restructured the course to utilise UrMus early in the hopes to address these observed challenges. Rather than take a bottom-up approach we developed a top-down approach, were very high level basic patching in UrMus’s default patching interface formed early project, followed by interface and instrument design in Lua. In late parts of the course a technology track gave students opportunities to engage in low level Objective-C++ programming.

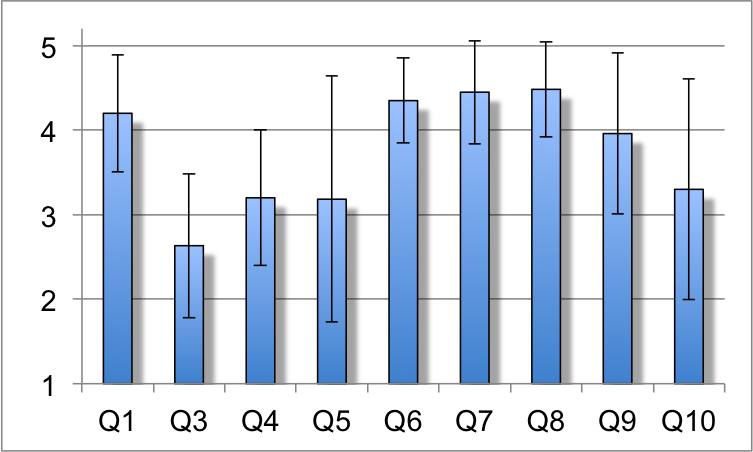

In order to assess the impact of these adoptions we were interested in structured feedback. During the last week of the second course offering in 2010 we collected questionnaire data that probed the students’ view on the interdisciplinary integration of the course (Essl, 2015). Six students voluntarily and anonymously answered the questionnaire. One of the six students omitted the questions of the second page (Q9–11). The answer to each question was to be graphically indicated on a continuous extension of a five-point Likert-scale which at each point provided labels (far left was labelled Strongly Disagree, far right was labelled Strongly Agree, and the centre was labelled Neutral. However, the students were able to mark positions between Likert points on a line. In post-survey evaluation, we measured marker positions and kept position accuracy of one-tenth of Likert-scale levels (i.e., 3.1 was a possible score).

Here we present all the questions except two questions (Q2 and Q11) that were related to the mobile hardware loan program used in the course. The remaining survey questions were: Q1 The integration of programming/technology and creativity worked for me, Q3 The learning curve for the programming aspects was too hard, Q4 The learning curve for the programming aspects was too easy, Q5 I had extensive programming experience prior to the course, Q6 I learned many aspects of designing mobile music instruments, Q7 I learned how to think about mobile technologies in new ways, Q8 I learned about new performance practices, Q9 I wanted more programming, Q10 I wanted more music performance. The results of average scores and standard deviation are depicted in Fig. 10.

Q5 was designed to capture the programming related background of the population. The answers to the question (avg=3.18, stdv=1.46) reveal a wide spread of prior programming background from no prior programming experience to extensive programming knowledge. This is compatible with the student population which consisted of a mix of Music and Engineering majors. Overall (Q1) the interdisciplinary nature of the course was successfully achieved according to the students’ feedback (avg=4.2, stdv=0.69). Tuning of the learning curve with respect to programming was a major motivation for the change. Hence, numerous questions probed this aspect. Q3 and Q4 probed whether students felt overly challenged or experienced a lack of challenge. Students felt that, on average, the programming was not too easy (Q3 avg=2.63, stdv=0.85) and also not too hard (Q4 avg=3.2, stdv=0.8). However, the averages are close to neutral responses in both ways suggesting, that a sensible middle ground was achieved on average. Questions Q6 and Q7 probed students’ perception of the degree of learning mobile musical instrument design and creative use of mobile technologies. There were overall strong positive responses from all students (Q6 avg=4.35, stdv=0.5, Q7 avg=4.45, stdv=0.61). Q8 sought to probe the learning success of new performance practices and its answers were comparably positive (avg=4.48, stdv=0.57). The final two questions probed the students’ preference for additional content. In general students did desire more programming in the course (Q9 avg=3.96, stdv=0.95), while opinions about more music performance showed a wide spread of reactions ranging from approval to disapproval (Q10 avg=3.3, stdv=1.3). Overall, at the time we interpreted the questionnaire results as justifying the approach and validating the utility of UrMus’s layered structure to support mixed student populations with widely differing programming expertise. In the following years, the course successfully continued to accommodate student groups with diverse prior educational background, and students presented the product of both engineering disciplines and artistic visions through the final concerts.

9.2 Exploration, Expressiveness and Collaboration in Student Concerts

To get a sense of the degree of creativity expressed in UrMus we report here a post-hoc analysis of all pieces presented at five final class concerts performed over a period of six years.

For the purpose of this analysis, we will follow a subset of a taxonomy of creativity recently proposed by Cherry & Latulipe (2014, pp. 1–25) and incorporated in their Creativity Support Index (CSI). The taxonomy consists of six dimensions. For our analysis, we will use three dimensions: exploration, expressiveness, and collaboration. We chose these dimensions because they are most suitable for post-hoc analysis. The three excluded dimensions of the CSI: Enjoyment, Immersion, and Results Worth Effort, require subjective evaluation either by the user or by an observer and so will be excluded here. We chose a subset of the CSI dimensions to allow some degree of comparability to other works that may use the CSI.

It is important to note that while numerous approaches to assessing creativity have been proposed, a persistent problem remains: the difficulty related to the ill-defined nature of creativity itself (Cherry & Latulipe, 2014, pp. 1–25). Definitions of creativity have involved external observation (Boden, 2004) or internal self-evaluation (Cherry & Latulipe, 2014, pp. 1–25). The difficulty and shortcomings of a range of evaluation techniques were reviewed by Hewett et al. (2005).

We interpret the use of a wide range of UrMus capabilities in the concert pieces as indicative of support for exploration by the system. Under this assumption, we can derive quantitative data from the analysis of the UrMus source code of the performance realisations implemented by the students for their concert performances. UrMus provides access to a wide range of input, output, and networking capabilities. We analysed the pieces for output to speaker, screen, external projection. We further analysed them for input via microphone, multitouch, accelerometer sensor, gyroscope sensor, magnetic field sensor, and camera. Finally, we analysed the use networking. GPS sensing, while supported in UrMus, was excluded from the analysis, as all pieces were conceptualised for indoor concert hall performances, where GPS signals are not available. Some sensors were not available in early iOS devices. First generation iPod touches as well as first generation iPads did not contain gyroscope, magnetic sensors, and cameras. The use of these sensors only appeared after 2011, when students had access to devices with these capabilities. Also, some pieces used external hardware for some of their rendering (such as OSC driven synthesis on a laptop). In our analysis, we will only include sensor capabilities directly supported by UrMus. For example, speaker output produced by a laptop that interfaces with UrMus over network will not be counted.

A total of 37 pieces were composed and performed at five final class concerts. Four pieces did not use UrMus, and therefore will not be considered further. Of the remaining 33 pieces, 30 used speaker output (3 remaining pieces used laptop based speaker output), 30 used screen output, 11 used projection driven by the mobile devices (7 more pieces included projection from laptops), 4 pieces used microphone input, 30 pieces used multi-touch, 20 pieces used accelerometers, 1 piece used the gyroscope sensor, 4 pieces used the camera, and 11 pieces used networking. No piece used the magnetic field sensor. Hence nine of ten analysed capabilities were used in pieces, suggesting that many of the capabilities in UrMus were accessible to exploration.

The video recordings of the concerts provide evidence for the range of artistic expression enabled by UrMus. We take a wide range of artistic output to be indicative of the expressive range of UrMus. The full list of pieces including links to the videos can be found in Appendix A.

Musically, we find a wide range in core musical structure, style, performance characteristics, and audience involvement in the 33 pieces that used UrMus. Pieces range from using noise and glitch (“Controlling the Conversation”) to continuous pitch variation without tonic center (“Shepard’s Escher”) to manipulation of live recorded voice (“Self-Spoken”). Numerous pieces used natural sounds without modification. Examples of diatonic pieces include “Mobile Color Organ” or “Prosperity of Transience”. Stylistically pieces range from classical (“In memoriam: Oror – Armenian Lullaby” and “Unsung A Capella”) to dance music (“Spinning”). In a number of pieces, motion played an integral role that related to the function of the mobile device in performance. Pieces used the visual dimension of mobile devices in motion (for example, “The Infinitesimal Ballad of Roy G. Biv” and “Phantasm”), dance-like motion (“There is no I in Guitar” and “Reflection on Flag and Electronics”), and various forms of musical gestures (for example, “T’ai Chi Theremin” and “Himalayan Singing Bowl” among many others). Pieces involved audience participation via live microphone recordings (“Self-Spoken”) or mobile flash lights (“Swarm”). One piece utilised mobile video projection (“Super Mario”). One piece involved live-coding a mobile musical instrument during performance (“Live Coding”). A range of pieces included narrative or theatrical components (e.g., “Mr. Noisy”, “Distress Signal”). Some pieces deconstructed the notion of traditional musical instruments (“There is no I in Guitar” and “Twinkle Twinkle Smartphone Star”) or engaged with musical notation (“Mobile Color Organ” and “Falling”). Numerous metaphors were used to create new notions of musical instruments, such as the notion of the spotlight as performing gesture (“Glow-Music”).

The range of metaphors, styles, performance practices, and instrument designs realised in the five final class concerts confirm that the students creatively pushed the boundaries of traditional musical performances and that this form of expression was enabled in the meta-environment provided by UrMus.

Finally, we document the size of collaborative groups giving evidence of collaborative support of the environment. Students were encouraged to form groups although some students expressed a strong preference for creating their own individual performance. Collaboration occurred in two forms. The first was with respect to creating the performances. In this case the students “composed” the musical instrument in UrMus along with the rest of the performance concept. This form is most relevant in terms of collaborative support in the creative process within UrMus. Analysing the credited composers for each piece we find across 33 pieces, the average number of collaborators per piece was 2.85. Five pieces were composed by a single student. The largest group consisted of six composers.

The second type of collaboration occurred during the actual performances. Here we are interested in strategies supported by the students’ instrument design to facilitate such collaboration. Numerous non-technological strategies were employed to enable ensemble play such as conducting, and rehearsals. Additionally, a number of pieces used networking to communicate between performers and enable performance collaboration. For example, the piece “JalGalBandi” used network communication on the performance interface to co-ordinate handovers among individual performers. In the piece “There is no I in Guitar”, the note positions of the individual “guitar-finger” performers were communicated to the performance interface of the “guitar strumming” performer, who could then assess if the chording was correct. The piece “Glow-Music” explored the notion of conducting by using a flashlight to perform the intensity aspect of the sound. Hence, the collaboration and role of the conductor and the performers is blurred in the piece, leading to new forms of collaborative organisation of performances. These kinds of creative coordination strategies were enabled by the capabilities of UrMus.

Following the exploration, expression, and collaboration categories of the CSI, we see evidence that UrMus supports a broad range of exploration of its technical possibilities in the design of mobile musical instruments. Students using UrMus as a platform display a wide range of musical expression, and learn that UrMus can be used by collaborative teams.

9.2.1 Student Feedback within the Design Cycle

In their pieces, students created their own artistic vision. The process of observing whether their vision was realisable in UrMus provided important feedback regarding UrMus’s ability to serve the intended purpose of open support of creativity on mobile devices. This interaction was also part of the development cycle of UrMus. Some of UrMus’s designs were motivated by observations made during class. For example, it became clear once network support was added that the difficulty to configure the network was a substantial obstacle. For this reason, support of zeroconf discovery (see Section 3.3) was added to create a clean, high level solution that is easy to grasp and use. The simplification of the Lua language with respect to controlling dataflow connections (see Section 7) was another example of changes that came out of observed student struggles.

9.3 Other Audiences

UrMus was also used in a guest lecture in computational art in the School of Art & Design with the audience being visual Art and Design majors. It was used in a workshop as part of the NIME conference in Oslo, Norway as well as at Harvestworks in the context of the ICMC conference in New York City to audiences of working academics and graduate students. It has been used during a summer workshop for high school seniors interested in University of Michigan’s Performing Arts Technology program. For this workshop, we limited the scope of the project to using UrMus’s default interface and building simple yet interesting interactions and using them to explain some basic sound synthesis. UrMus has also been used in outreach activities that were targeting non-college users such as a bring-your-children-to-work event (see Fig. 11).

10 Future Developments

It is rare that a music system reaches a stable state. Extensions are frequent. This is a natural consequence of ever evolving technological possibility that begs to be exploited for artistic purposes. Some of these technological trends are already a reality now, but have not been fully incorporated into the repertoire of mobile music practice.

10.1 Power of the Cloud

Cloud computing and the availability of ever increasing ability to access large scales of data and media online offer attractive potential for networked music performances (Hindle, 2014). We recently presented two networked live programming musical practices that utilise cloud services for data transfer: Crowd in C[loud], an audience participation music piece (Lee et al., 2016) and SuperCopair, a shared editor for SuperCollider (de Carvalho et al., 2015). While these two works were done outside UrMus, we realise that the availability of such integration would certainly provide large-scale networked performance potential and enable remote collaboration paradigm in UrMus, hence integration of cloud computing is planned for the immediate future.

10.2 Web, WebKit or Custom?

An interesting current trend in music environments is the use of web-based technologies. The distributed nature and cross-platform abstraction of the web is very appealing (Wyse & Subramanian, 2013, pp. 10–23) and has sparked substantial efforts to leverage the web for audio and musical systems (Roberts & Kuchera-Morin, 2012;; Schnell et al., 2015; Taylor & Allison, 2015; Wyse, 2014, pp. 1065–1068). The web is used to prototype and upload interfaces for mobile music performance using the web-based NexusUI interface building system (Taylor et al., 2014, pp. 257–262).

Given that mobile devices offer web browsers, web music platforms can be seen as mobile music platforms. Currently, the power of mobile devices is too weak to support complex music systems in a browser. The immediate advantage is the ease of cross-platform portability. Given the progressive advances in mobile CPU performance, one can expect this trade-off to eventually favour the portability advantages. The web serves as an agreed upon standard that is realised on many operating systems. UrMus currently does not have this benefit automatically. Differences between operating systems have to be accounted for to create UrMus’ own abstraction at the Lua level. The current disadvantage of web-based technology is performance, while performance is one of UrMus’ strengths. An interesting possible intermediate solution is to incorporate aspects of WebKit, the source base of virtually all modern web browsers into UrMus. This would make web-conformant technologies available to UrMus while retaining the high performance of its many specialised modules that are not currently available on a web platform. Another alternative is to put UrMus into JavaScript, WebAudio, and related technologies. Finally, the current architecture may remain the most viable approach, at the cost of persistent cross-platform development. We plan to conduct quick pilot implementations of each version to get an informed assessment which strategy has the best long-term impact.

11 Conclusions

In this article, we discussed six years of design and development of UrMus, a meta-environment for mobile music. UrMus does not provide a single representation or interaction paradigm, but rather is designed so that multiple representations can be realised democratically on the mobile device itself. A layered design allows for users with various degrees of computational expertise to develop mobile music performances. High degree of exposure of the device’s hardware capability at a high level allows both accessible yet flexible programming. UrMus supports a range of networking strategies and has explicit support for the emerging field of collaborative mobile live coding. UrMus is available at: http://UrMus.eecs.umich.edu/.

12 Acknowledgements

The authors would like to thank everybody who contributed to UrMus: Bryan Summersett (first web-editor, Lua libraries), Jongwook Kim (first Android port), Alexander Müller (interface design), Patrick O’Keefe (camera API and tutorials), Nate Derbinsky (Soar Integration, API and examples), Qi Yang (multiple representations studies and their realisation in UrMus), as well as all the students in numerous Michigan courses using UrMus who provided invaluable feedback, and created many exciting new performance interfaces and systems in UrMus! We also gratefully acknowledge insightful comments and corrections provided by two anonymous reviewers which significantly improved the manuscript.

References

- Allison, J. & Dell, C. (2012). Aural: A mobile interactive system for geo-locative audio synthesis. In Proceedings of the International Conference on New Interfaces for Musical Expression. Ann Arbor, MI: University of Michigan Press.

- Axford, E.C. (2015). Music apps for musicians and music teachers. Rowman & Littlefield.

- Behrendt, F. (2005). Handymusik: Klangkunst und “mobile devices”. Epos. Available online at: www.epos.uos.de/music/templates/buch.php?id=57.

- Boden, M.A. (2004). The creative mind: Myths and mechanisms. Oxford: Routledge.

- Boulanger, R. (2000). The Csound book: Perspectives in software synthesis, sound design, signal processing, and programming. Cambridge, MA: MIT Press.

- Brinkmann, P., Kirn, P., Lawler, R., McCormick, C., Roth, M. & Steiner, H.-C. (2011). Embedding pure data with libpd. In Pure Data Convention.

- Bryan, N.J., Herrera, J., Oh, J. & Wang, G. (2010). Momu: A mobile music toolkit. In Proceedings of the International Conference on New Interfaces for Musical Expression (pp. 174–177). Sydney, Australia.

- Caramiaux, B. & Tanaka, A. (2013). Machine learning of musical gestures. In proceedings of the International Conference on New Interfaces for Musical Expression (NIME 2013). Seoul, South Korea.

- Cherry, E. & Latulipe, C. (2014). Quantifying the creativity support of digital tools through the creativity support index. ACM Trans. Comput.-Hum. Interact., 21(4), 21:1–21:25.

- Collins, N. (2011). Live coding of consequence. Leonardo, 44(3), 207–211.

- Cook, P.R. & Scavone, G.P. (1999). The synthesis toolkit (stk). In Proceedings of the International Computer Music Conference (ICMC).

- Dannenberg, R. (1997a). The implementation of Nyquist, a sound synthesis language. Computer Music Journal, 21(3), 71–82.

- Dannenberg, R. (1997b). Machine tongues XIX: Nyquist, a language for composition and sound synthesis. Computer Music Journal, 21(3), 50–60.

- Dannenberg, R., McAvinney, P. & Rubine, D. (1986). Arctic: A Functional Aapproach to real-time control. Computer Music Journal, 10(4), 67–78.

- de Carvalho, A., Lee, S.W. & Essl, G. (2015). SuperCopair: Collaborative live coding on Supercollider through the cloud. In Proceedings of the International Conference on Live Coding (ICLC). Leeds, United Kingdom.

- Derbinsky, N. & Essl, G. (2011). Cognitive architecture in mobile music interactions. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME). Oslo.

- Derbinsky, N. & Essl, G. (2012). Exploring reinforcement learning for mobile percussive collaboration. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME). Ann Arbor.

- Dubus, G., Hansen, K.F. & Bresin, R. (2012). An overview of sound and music applications for android available on the market. In Serafin, S. (Ed.), Proceedings of the 9th Sound and Music Computing Conference (pp. 541–546). Berlin: Logos Verlag.

- Eaglestone, B., Ford, N., Holdridge, P. & Carter, J. (2007). Are cognitive styles an important factor in the design of electroacoustic music software? In Proceedings of the 2007 International Computer Music Conferenc (pp. 466–473). International Computer Music Association.

- Essl, G. (2009). SpeedDial: Rapid and on-the-fly mapping of mobile phone instruments. In Proceedings of the International Conference on New Interfaces for Musical Expression. Pittsburgh.

- Essl, G. (2010a). The mobile phone ensemble as classroom. In Proceedings of the International Computer Music Conference (ICMC). Stony Brooks/New York.

- Essl, G. (2010b). UrMus: An environment for mobile instrument design and performance. In Proceedings of the International Computer Music Conference (ICMC). Stony Brooks/New York.

- Essl, G. (2010c). UrSound: Live patching of audio and multimedia using a multi-rate mormed single-stream data-flow engine. In Proceedings of the International Computer Music Conference (ICMC). Stony Brooks/New York.