1 Introduction

Music composition can be a very complex process. Previous studies suggest that there are as many different creative processes in composing music as there are composers in the world (Collins, 2016; DeMain, 2004). Based on these previous studies and observations, a logical starting point for further investigation in songwriting processes might involve a model which takes into account what best reflects actual practices of various methods of composing. There are many styles and traditions of lyric-based storytelling traditions such as ballads, romances, blues, mornas, country music and most recently rap and hip-hop, all of which could make for excellent case studies. While these styles share many common traces with each other (as well as past and present traditions), there is one style of songwriting in particular which is a very peculiar and unique method of storytelling with very distinctive features: Portuguese fado. Fado is considered Intangible Cultural Heritage by UNESCO,(1) providing further motivation for pursuing this research within our own interests (songwriting), while simultaneously aiding in the preservation and memory of this performance practice.

In this article we will briefly present and characterise the practice of Portuguese fado. The description presented herein is based on previous ethnomusicological and historiographical studies. These studies are complemented with additional research conducted on a representative collection of 100 encoded musical transcriptions. Following the characterisation of fado, we will proceed with a brief discussion about the current state and practice of algorithmic composition. We will refer to several approaches conducted focusing on the type of algorithmic compositional processes being modelled. The previous observations and characterisation of the fado practice and the analyses of the collection of transcriptions will shape and highlight the various processes that inform our own model. We present a generative model, one which will be able to generate instrumental music similar in style and structure to the music of the representative sample collection, starting from some minimal structural features of the characteristic lyrical shape and constrain the subsequent musical materials that follow. Such a model requires our methodology be a hierarchical linear generative process that translates into a series of constraint-satisfaction problems resorting to mixed-techniques. We describe in detail how we implemented our model as a system built using the Symbolic Composer software. Our system consists of a number of modules used to deal with specific musical parameters, from the general structure of the new compositions to melody, harmony, instrumentation and performance, leading to the generation of MIDI files. We propose a method for creating sound files departing from the generated MIDI files. Subsequently, we evaluate the output both by devising an automatic test using a supervised classification system, and also human feedback collected in the context of a quasi-Turing test. We conclude that the output of our system approaches the one from the representative collection and maintains a relative pleasantness to human listeners. However, there is room for improvement. We realise that a module dealing with full lyrical content (including coherent semantic content) might be crucial for optimal results, that the original collection should be enlarged in order to collect more data and that further parametrisation of human performance is needed.

2 Fado

Several previous studies show how the concept and practice of fado historically emerged, what it represents, and how it has changed. These studies have mainly followed the lines of ethnomusicology resorting to ethnographic and historiographic methods namely, (Carvalho, 1994; Castelo-Branco, 1998; Côrte-Real, 2000; Gray, 2013; Nery, 2004, 2010a, 2010b; Videira, 2015). For a more detailed survey see Holton (2006). From these various studies one can conclude that fado tells a sorrowful tale, a narrative emerging from the life experience of the people, using the vocabulary and lexical field appropriate to be shared by the people who relate to the story. This narrative is then embodied and communicated through the means of several gestural codes. The text shapes the form of the music. The structure of the narratives, which are usually organised in stanzas of equal length, translates into strophic musical forms repeated over and over, while more complex texts typically generate more complex forms. Since most people have limited musical knowledge, the most common practice is to play very simple musical patterns (often just arpeggios, other times simple stock figurations and repetitive ostinati), maximising the number of open strings and the easiest fingering positions, using the simplest time signature. Therefore, a binary pulse and a chord progression alternating tonic and dominant is vastly preferred over any other more complex variation. The first description of the music that accompanied fado practice was described on the musical dictionary of Ernesto Vieira:

A section of eight bars in binary tempo, divided into two equal and symmetric parts, with two melodic contours each; preferably in the minor mode, although many times it modulates into the major, carrying the same melody or another; harmony built on an arpeggio in sixteenth-notes using only alternating tonic and dominant chords, each lasting two bars. (Vieira, 1890, p. 238)

If skilled performers are available, then the degree of complexity and inventiveness of a fado tends to increase accordingly. These conditions constrain the way the melody is shaped. In fado practice, the combined ability to manipulate, not only the rhythm of a melody, but also the pitch, is called estilar (styling). Styling is a kind of improvisation, and is similar in practice to that found in other musical styles that rely heavily on improvisation, such as Jazz (Gray, 2013). The rhythm of the melody is dependent both on the prosody of the text and the time signature chosen, while the pitches of the melody are constrained by the underlying chord progression presented in the instrumental accompaniment. The tempo, dynamics and articulations of the same melody are constrained by the emotional (semantic) content of the lyrics and by the physical characteristics of the fadistas (performers of fado). The performer’s body shape, the way he/she positions him/herself, his/her age and health condition all affect and determine the acoustic and phonatory characteristics of the voice (Mendes et al., 2013), thus shaping most of the timbral characteristics of the melody and how they will be perceived by the audience. These various physical and musical elements combine to create a complex network of relationships and interdependencies.

The available musical instruments determine the musical arrangement. The most common ensemble since the twentieth century consists of one classical six-string nylon or steel guitar (named viola, in European Portuguese) and one Portuguese Guitar (pear shaped twelve-string lute, called guitarra). The viola provides the pulse and harmony, guiding the singer. The guitarra complements the voice using stock melodic formulas. Armando Augusto Freire, “Armandinho” (1891–1946), significantly renewed the accompaniment practice by introducing guitarra countermelodies between the sung melodic lines. These were so characteristic that in many cases they were appropriated and integrated by the community into the fados where they were first performed (Nery, 2010b, pp. 131–132; Brito, 1994; Freitas, 1973). The timbral uniqueness of these guitarra gestures, absent from other musical practices and styles, makes them a key feature in the characterisation of fado. During the nineteenth century several written and iconographic sources show fadistas accompanying themselves, often with a guitarra, playing arpeggiated chords. Sources also mention other string instruments, the accordion or the piano (Cabral, 1982; Castelo-Branco, 1994; Cristo, 2014; Nery, 2004; Sá de Oliveira, 2014). We speculate that one would play with whatever was available in the given context. Due to the limitation of recording technologies in the early-twentieth century, fados were often recorded using pianos and wind or brass instruments (Losa, 2013). The instrumental group’s function is to provide a ground for the fadista, to provide a foundation on which to create, and the spatial organisation of instruments in performance reflects this hierarchical organisation. Usually the instrumentalists are on the background, they are seldom mentioned and their interaction with the audience is minimal (Côrte-Real, 1991, pp. 30–35). Several contemporary examples of live fado performance in the context of tertúlia (“informal gathering”) can be found in the YouTube channel “Tertúlia”.(2)

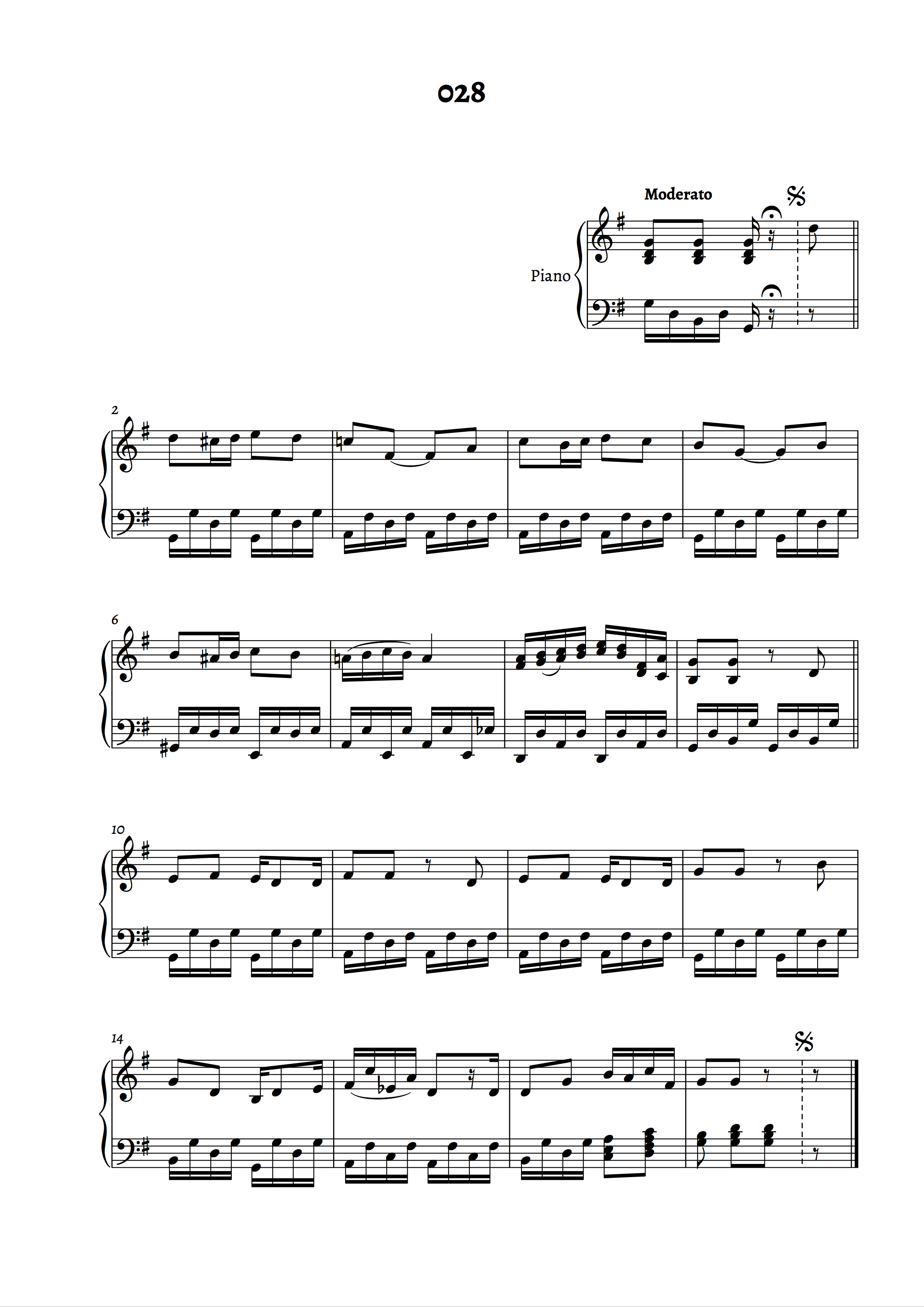

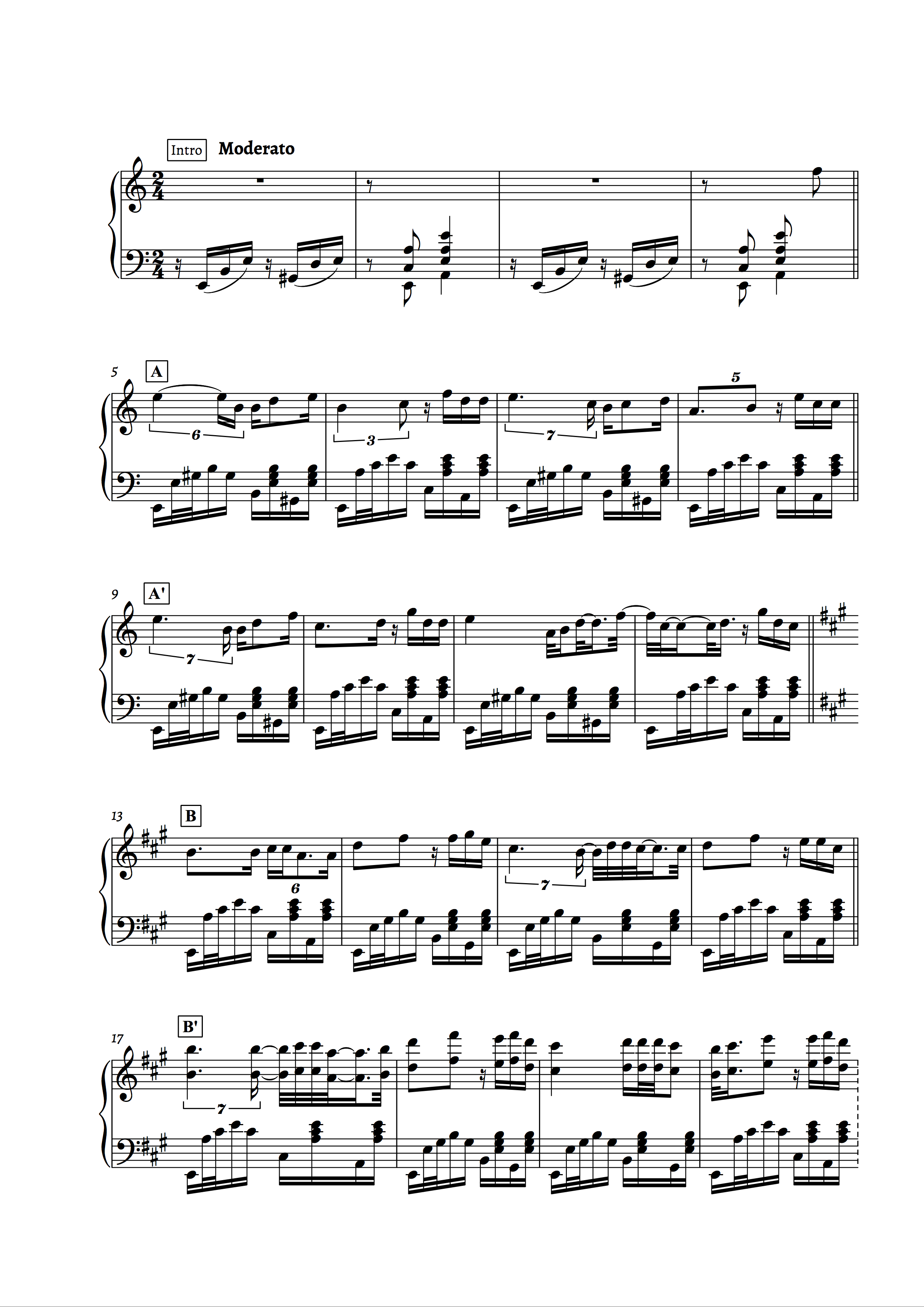

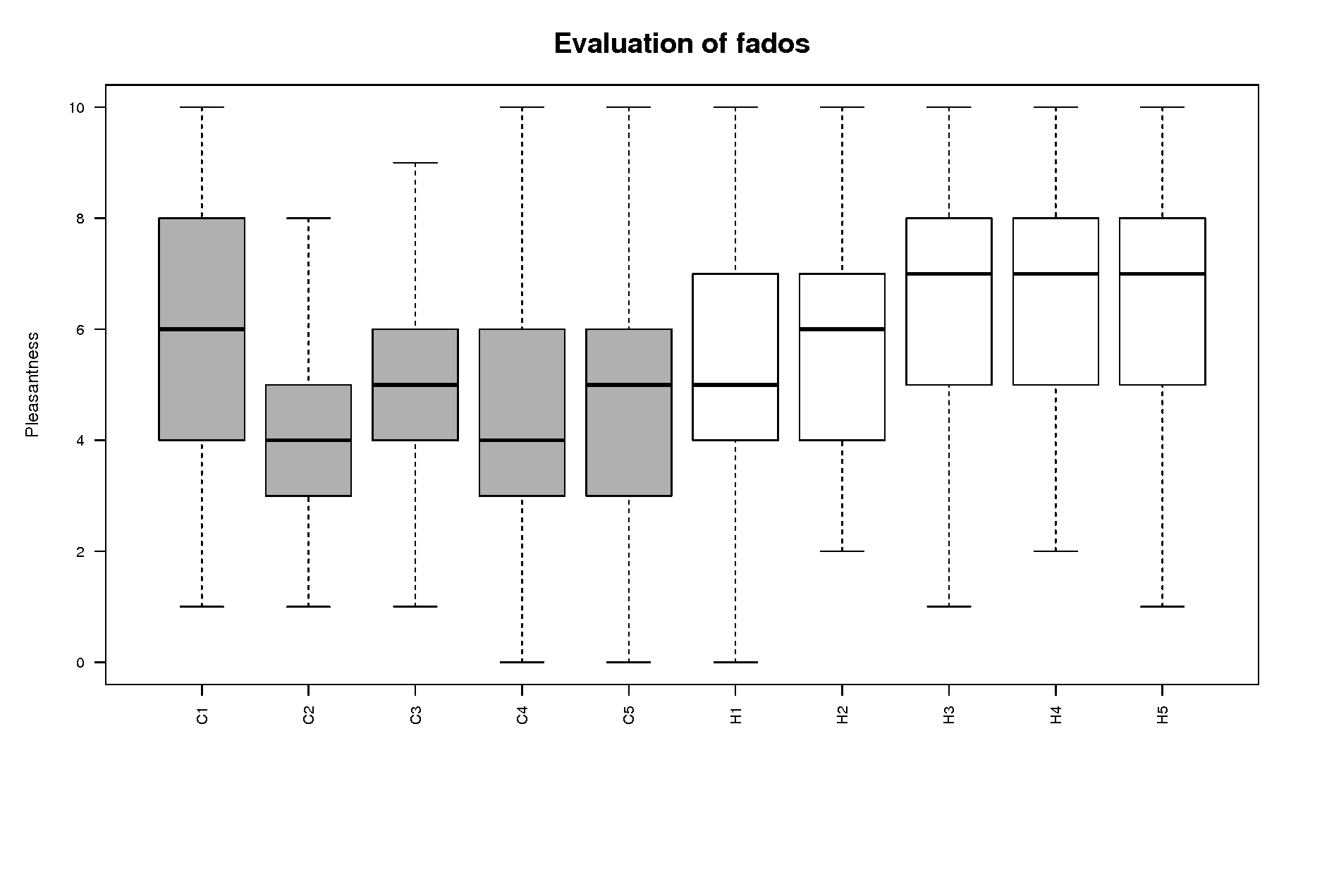

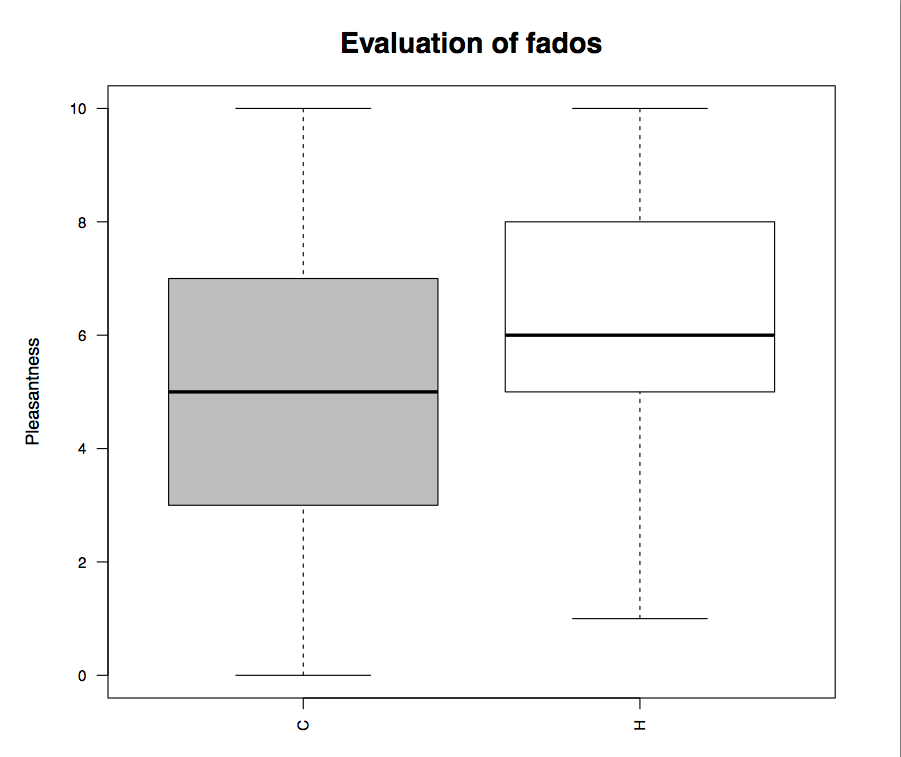

We have complemented the ethnographic and historiographic analyses discussed above with the use of computational musicology on empirical data. Since fado performance predates the era of sound recordings the study of symbolic notation is required to access the music of the earlier periods. There were no previously encoded transcriptions of fado available for computational research. Therefore, we compiled a representative collection of 100 samples to perform our initial research. Given time and budget constraints we privileged the older sources that were already photographed. The Cancioneiro de Músicas Populares, compiled by César das Neves and Gualdim Campos (Neves & Campos, 1893), contains several traditional and popular songs transcribed during the second half of the nineteenth century, 48 of which categorised as fados by the collectors, either in the title or in the footnotes. This set was accompanied by a transcription of Fado do Marinheiro, provided and claimed by Pimentel to be the oldest fado available (Pimentel, 1904, p. 35). We have also had access to the collection of musical scores from the Theatre Museum in Lisbon.(3) The first 51 scores categorised as fados were selected in order to reach the desired sample number of 100 representative works. We estimate that there are at least 200 more works categorised as fados to be found in the collection, and several others in other collections. Future work will be to encode more scores categorised as fados and enlarge the database. These transcriptions represent the practice c. 1840–1970, and one example is shown in Fig. 1.

These transcriptions are piano reductions (adapted for the domestic market), of both the instrumental accompaniment and vocal line, the vocal line being reduced to an instrumental version. Most of the transcriptions confirm the model described by Ernesto Vieira. The guitarra countermelodies and ornamental motifs are largely absent in the musical scores from the nineteenth century and in the earlier recordings, however they become rather common in later examples. This sample collection has been edited and is currently available as a digital database.(4) This database consists of the musical scores, MIDI files, and analytical, formal and philological commentaries, as well as slots for relevant information (sources, designations, date(s), authorship(s)) for each fado. The creation of this new digital object is relevant for archival and patrimonial purposes. We have applied music information retrieval techniques, followed by statistical procedures on the sample collection in order to identify some patterns and rules for shaping its characteristics. This allowed us to retrieve information regarding form, harmonic progressions and rhythmic patterns, among many other features (Videira, 2015).

3 Algorithmic Composition

During the most recent decade, the references and discussions around the core problems of the field of algorithmic composition grew exponentially. The main references for what constitutes the field of algorithmic composition and its primary achievements rapidly become outdated or redundant. The research and books by Curtis Roads (1996), Eduardo Reck Miranda (2001) and David Cope (1996; 2000; 2004; 2005; 2008) will always be historically important and full of relevant information, taking into account their historical context, but Nierhaus’ (2009; 2015) books on algorithmic composition provide new updates to the field and are quickly becoming references as modern state-of-the-art surveys. Jose David Fernández and Francisco Vico (Fernández & Vico, 2013) also present a very complete and up-to-date survey for the field, and therefore we will use their paper as a point of departure to present a brief overview.

The use of algorithms in music composition precedes computers by hundreds of years. However, Fernández and Vico use a more restricted version and present algorithmic composition as a subset of a larger area: computational creativity. They claim that the problem of computational creativity in and of itself is still difficult to define and formalise. It can essentially be said to be “the computational analysis and/or synthesis of works of art, in a partially or fully automated way” (Fernández & Vico, 2013, p. 513). Fernández and Vico also mention that the most common approaches and problems algorithmic composition had to solve are now systematised and well-defined.

Colton and Wiggins refer that much of mainstream AI practice is characterised as “being within a problem solving paradigm: an intelligent task, that we desire to automate, is formulated as a particular type of problem to be solved. The type of reasoning/processing required to find solutions determines how the intelligent task will then be treated” (Colton & Wiggins, 2012, p. 22). On the other hand, “in Computational Creativity research, we prefer to work within an artifact generation paradigm, where the automation of an intelligent task is seen as an opportunity to produce something of cultural value” (Colton & Wiggins, 2012, p. 22). In their view, computational creativity research is a subfield of Artificial intelligence research, and define it as “The philosophy, science and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviors that unbiased observers would deem to be creative” (Colton & Wiggins, 2012, p. 21).

Although these visions seem to differ on whether the goal of modelling particular processes is to generate specific artefacts or if artefacts will emerge as a consequence of such processes, they share the relevant idea that some processes are being automated or modelled. The kind of processes used and how they are used seems, to us, a relevant starting point to survey the field.

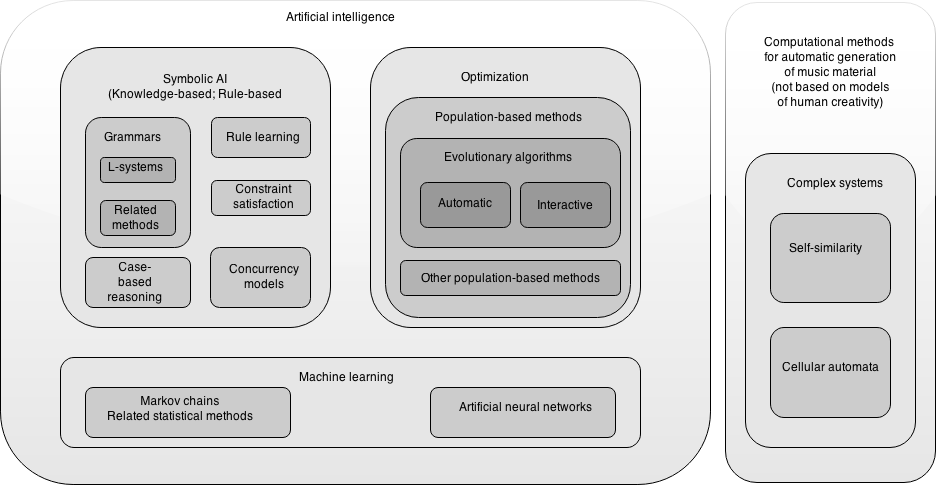

In the classification system Fernández and Vico adopted (Fig. 2) there are two primary areas representing two radically different approaches. Either one can observe how intelligent beings typically create and then model their behaviour or reasoning (artificial intelligence) or one can simply focus on getting good musical results even if a human would never be able to do it that way. These last approaches usually represent processes found in nature and are assumed to be the result of chaos or complex interactions without any intelligence behind. Among these complex systems one can find self-similarity processes (fractals, for instance) and cellular automata.

Among artificial intelligence, one finds processes that intelligent beings use. Those are divided into three main areas: symbolic processes, optimisation and machine learning. Symbolic artificial intelligence relies mainly on modelling the process of creation by the use of simple rules. They usually represent the creation of symbols and their subsequent manipulation and transformation. These approaches depend on the idea that the creative processes can be described in formal terms and reduced to a theory. The problems must be well-defined and their boundaries established, as well as its constants, variables and sets of operations. In this case, the rules must be conveyed by the programmer, who obtained them through the previous observation and analysis of the processes involved.

Optimisation processes derive mainly from the idea that one can depart from very simple units or imperfect samples (members of a representative population) and combine, recombine and eventually transform them through successive trial-and-error operations. The strongest and fittest combinations are selected, while the weakest and most unsuitable ones are discarded, until results start to improve and eventually get optimal. These processes resemble the Darwinist idea of evolution.

Machine learning processes, on the other hand, focus on analysing the outcomes of previous creations. A representative sample is constituted, processed and analysed, and then new predictive or prescriptive rules are inferred by the machine. Using those rules the computer will then be able to generate new works.

Following the example of David Cope (Cope, 2005), we tried to teach the computer to compose like we ourselves would do. In order to achieve that, we tried to learn how some people compose music and vocal sounds associated with fado, then we tried to do it ourselves. We spent a long period learning and perfecting the craft of composing and producing music using computers and virtual instruments. We had to understand not only more analogical tasks, such as how to create melodies from lyrics having just a viola or a guitarra in our hands, but also the process of creating a score using a music notation program. Then, to edit it in a DAW (Digital Audio Workstation), to record, mix and master it, up to the point of obtaining a convincing sound result. Derived from all that experience, we now have a new conception of how to perform and complete the compositional process from scratch. If someone asks us to compose music for a fado, we have a way of doing it. And now we are teaching the computer the most effective way to replicate the way we do it.

By trying to imitate a style and creating an endless repertoire of songs, several goals are met: not only is a relevant problem being addressed (the formalisation of specific style of composition process), but also an attempt is being made at creating something to fulfil a cultural and social need: the endless desire for music. At present there are numerous software applications based in algorithmic composition for people to try out and generate music at their will, like PG Music Band-in-a-Box(5) or Dunn’s Artwonk,(6) as well as leisure and relaxing environments that generate music in real-time with interactive components, not only in computers, but also in smartphones, for instance, Brian Eno’s Bloom or Trope(7) or the more academic ANTracks (Schulz et al., 2009).

4 The Model

Following the ideas of Brian Eno, it seemed to us that the most elegant model is the one that takes the least amount of information possible and is able to extract the maximum output and still retain the core ingredients of the process, the necessary steps in composing a song. That translates into a generative process like the one of planting a seed and then seeing how an entire tree grows out of it (Eno, 1996). This seems to imply strong hierarchical structures and a good degree of self-similarity. Our prior ethnographic and historiographic work and observations of the practice suggest that given a lyrical text (what would be literally a fado) the entire musical work might derive from it using a set of predefined conventions, shared grammars and transformations. This is a starting hypothesis for our model. The idea of a text shaping several other musical parameters is not without precedent (Nketia, 2002; Pattison, 2010). However, there are songs without words, and composers sometimes start from textless melodies (DeMain, 2004; Zak III, 2001). This might imply that, in the compositional process, the semantic content of a text could be less relevant than its structure. As such, one could work with only some structural information, namely the number of stanzas, lines and syllables. Having this seminal information, and the correct rules and transformations defined, then, one would be able to, just through following those rules, obtain a suitable musical work complying with the genre modelled.

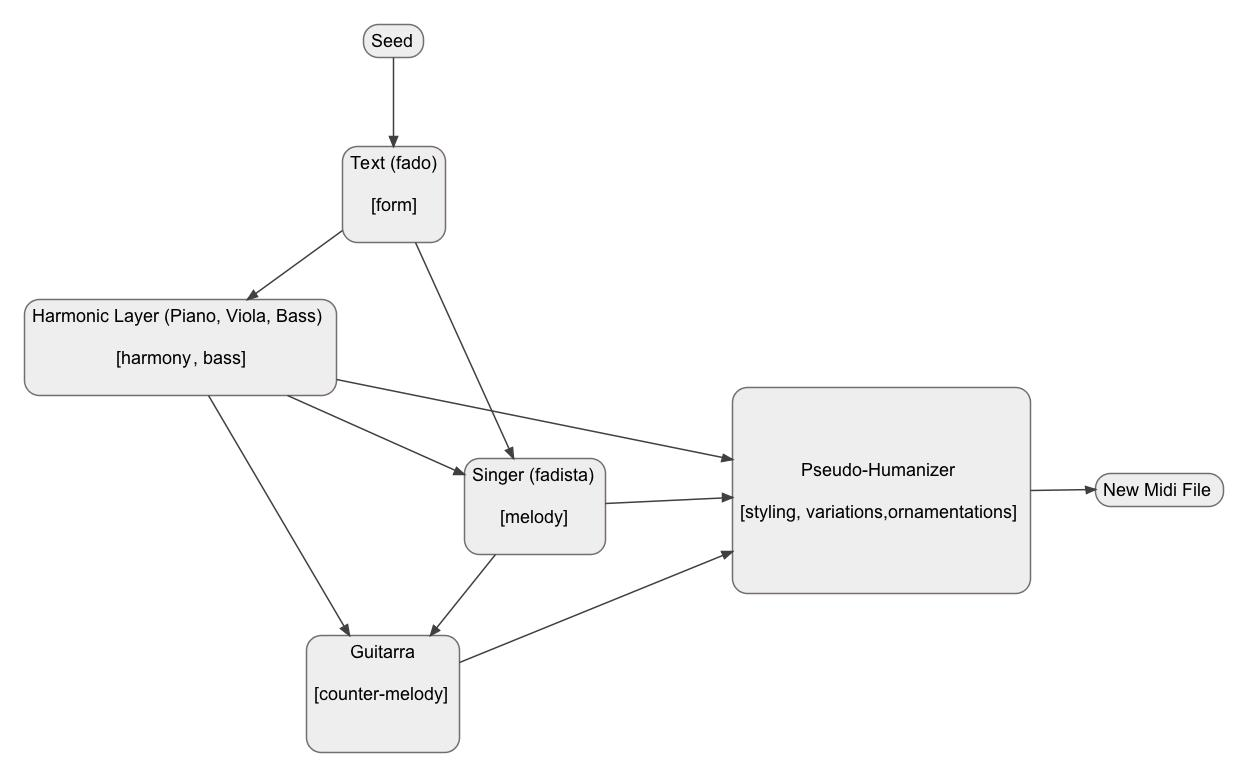

The model (Fig. 3) assumes the sequential order through which fados are typically performed, and simulates the various agents and decision processes involved. The point of departure is a text (a fado, in the form of a poem). Fado narratives are usually styled and improvised on top of stock musical structures. At this stage, we are not working with actual lyrics or textual content, although that would be ideal in future endeavours Therefore, the first module is only pretending that there is a text, and, based on a seed, it creates a list of symbols in numeric format. Those numbers represent the relevant information an original text would carry: namely the number of stanzas, lines per stanza and syllables per line. One could argue that in a real text all information is relevant (namely semantic content); however, fado practitioners claim that as long as the metrical structure is the same, the lyrics can be easily interchangeable between the fados. So, while it can still be argued that accents and vowel quality might affect the prosody (and therefore the melody to some extent), the core values that define the importance of a text, in structural terms, are the ones we are indeed retrieving/generating. This is because the number of stanzas determines the number of sections the work will have (hence, the overall form), and the number of lines determines the length of each section (number of melodic phrases per section). The number of syllables of each line determines the rhythms of the melody (constraints the number of notes of each melodic phrase).

A second module simulates the agents involved in the instrumental harmonic accompaniment (either a piano, or, more commonly, a viola and a bass). This module retrieves information from the “text” module (the form and general structure of the sections) and generates suitable harmonic progressions and scales to fit those progressions. It also generates ostinati and bass lines based on those progressions and scales. A third module simulates the singer. This module retrieves the information from the “text” module (namely the number of syllables of each line) to generate the rhythms of the vocal melody. It combines this information with the harmonic information retrieved from the second module to assign suitable pitches, therefore simulating the creation of melodic lines on top of the previously known stock-accompaniment.

A fourth module simulates the guitarra. This module retrieves information on all previous modules and is then able to generate suitable counter-melodies or melodic figurations that simultaneously fit in the harmonic progressions, and at the same time, simulate a reaction to the melodies generated by the singer.

All four of these modules generate what we would call an “Urtext” score, similar to the ones in the corpus. This is a quantised ideal to be performed. This performance implies the repetition of sections and styling. A MIDI file exported at this stage could be printed as a music score and representation of the output from the first four modules.

A fifth module, a pseudo-humaniser, consists of a series of functions that will transform some of the generated parameters to originate variants. The transformations, when applied to the melodies, will simulate the styling of fadistas, therefore slightly changing rhythms, pitches and adding ornamentation. When applied to the accompaniments they might originate pauses, and small rhythmic and melodic variations as well. This will simulate a real performance practice, guaranteeing that virtually any repetition of a section will sound slightly different each time. A MIDI file exported at this stage reflects the performed fado, not suitable to be printed as a score, but perfect to be imported into a digital audio workstation to be recorded with virtual instruments.

A sixth module is idealised as an extension of the fifth module to be implemented at a later stage. It will involve micro-transformations to enhance the humaniser results, namely by controlling “groove” and syncopation in a not random fashion, and also controlling pitch bend messages to be able to generate portamentos, glides, slides and more convincing tremolos and mordentes.

5 Methodology

The observation of the performance practice, the processes it encompasses, and the model obtained determines the methodology to be followed. Since the problem of song composition, in general, and the musics and vocal sounds of fado, in particular, has been modelled as a hierarchical linear generative process, a symbolic artificial intelligence is the logical choice. Each step of the process involves either the generation of symbolic material, its retrieval from another source (a table, a list), or its transformation, via a set of rules. It is either a chain of choices or orders, each one having boundaries and probabilities of happening or not. Therefore, it is mainly a consecutive series of constraint-satisfaction problems.

An inspirational figure in this area, David Cope (1991; 1996; 2000; 2002; 2004; 2005; 2008), uses a borderline approach based on grammars and transition networks; however, he also relies on direct feeding from corpora for style imitation. The strong aspect of his work are the actual results, since most of the works displayed seem well-formed and coherent structurally. They could be the manual labour of any aspiring composition student imitating the style of past masters. Although Cope’s arguments and descriptions are clear, the actual computational processes seem obscure and his algorithms are never made explicit enough for replication. On the other hand, the more recent approach of François Pachet, Pierre Roy and Fiammetta Ghedini, the Flow Machines project (Pachet & Roy, 2014; Pachet et al., 2013), seems promising and more of an actual source of reliable inspiration. Still, their integration with lyrics is not yet done and one is not able to discern how the text influences or shapes the music. Also, their examples of jazz lead-sheets lack the depth of a fully developed song in terms of form and variation. Moreover, their content sometimes resembles just a collage in which their individual components lack the semantic context from which they were originally derived, therefore the ending result losing coherence. The work of Jukka Toivanen, Hannu Toivonen and Alessandro Valitutti (Toivanen et al., 2013), however, seems a good example of what it is pursued: they aim at automatic composition of lyrical songs, and create a module that actually generates text and this text directly influences the musical content. This approach, theoretically, makes perfect sense. The mapping of the textual parameters regarding the musical ones seem consequent regarding what are the actual processes and practices observed. They also imply that the semantic content of the text should affect the mood of the music (according to some arbitrary Western-European conventions), by mapping it to tonality, which seems also logical within many genres. However, their program also lacks the formal coherence of what would be expected in a typical song. There is not a clear phrase structure, neither a complete form defined over time (regarding repetition and variations, the songs just seem run-on), nor does it have a performative layer: the program merely generates quantised MIDI scores.

These detailed examples, two of them still in progress, act as direct inspirations for the model and all of them rely on hybrid symbolic approaches mixing several techniques to solve the constrained-choices along the way, or to generate specific sets of material. Therefore, it has been decided to pursue the same path, learning from their research and trying to integrate in the model the best ideas one could find among them, while discarding, changing or improving the ones thought to not be adequate. Hence, a hybrid constraint-satisfaction method has been applied, in order to be possible to build the main structures of the instrumental music desired.

6 Implementation of the Model

Taking into account prior projects of the experts and references in the area (Cope, 2004, 2008; Nierhaus, 2009), it was decided to implement the model using a Lisp-based language. We started practicing and implementing some algorithms in Common Music, an open source software developed by Henrich Taube.(8) However, after some insipid, yet important, developments we soon reached the conclusion that Common Music was not powerful enough for the desired purposes because it lacked predefined functions ready to deal with a model of tonal music implementation. It would have taken several months to create those functions from scratch. That work, however, had already been done by Tonality Systems and their software Symbolic Composer,(9) also Lisp-based, but with a set of numerous musical functions already predefined which would allow us to save time and resources and concentrate on the concrete problem and work in higher-level detail, thus enhancing the final result greatly. According to the Tonality Systems website:

Symbolic Composer uses two grammars to define the section and instrumentation structures: The section grammar and the instrumentation grammar. Both are tree-like structures with as many elements and levels as needed. Each node contains definitions of class properties. Class properties are inherited with the inheritance trees of the section and instrumentation grammars …. Inheritation mechanism allows to define common properties among instruments and sections.… A section consist of any number of instruments. Each instrument is defined by inherited musical properties called classes. Classes are: symbol, length, tonality, zone, velocity, channel, program, controller, tempo, duration, tempo-zones and groove. Obligatory classes are symbol, length, tonality, zone and velocity. The other classes are optional. Classes can be cross-converted to other classes, or cloned between instruments and sections, and global operations can be distributed over sections. Section has default class properties, which are used if they are not specified for instruments.

During the conception of the model, we were constantly struggling with the consilience of the performance practice emulation with the practice reflected in the representative corpus of musical scores. The initial idea was to somehow build an artificial intelligence that mimicked and represented this corpus in the generation of new scores similar to the existent ones. In order to do that, we have built a program that mainly recombined weighted lists containing fragments, and specific parameter values extracted from that same corpus with little to no variation. It was a simple approach that gave us controlled and predictable results lying within the desire scope, but that lacked inventiveness, the ability of going further. Moreover, it neither expressed algorithmically any particular new idea regarding the methods of composing with computers, nor it was challenging to code regarding the current state of the art. As a consequence of this fact, after the first round of initial programming we decided to explore a second approach and build a second artificial intelligence that, instead of just using lists with weights and data derived from the corpus, actually generates lists from scratch using functions. This second approach was much more challenging, because we actually had to try to formalise the sub-processes of musical composition. The result was a series of abstractions able to generate a whole series of different parameters that were later combined with one another and integrated and nested in the previously designed architecture. Due to the very nature of what an abstraction is, and how a function works, it allows the users either to be very strict and confined, thus providing predictable results, or if they decide to use arguments outside the expected range, it will provide totally unexpected and unpredictable ones. Furthermore, it can be even more unpredictable if one decides to let a randomiser pick what the possible range is and what parameters it may randomise. The point is that, using this approach, we have obtained a very flexible artificial intelligence and anyone can decide how much further one wants to go exploring it.

Each module comprises a series of constraint-satisfaction problems to be solved. As such, having arrived at this point, we decided that the best solution would be the combination of both approaches. Either they can be solved by resorting to a symbolic artificial intelligence based on the statistical data retrieved from the corpus (thus the weighted lists, the fragments) or via a second symbolic artificial intelligence working with formalisations of the sub-process involved (translated into grammars, rule-based algorithms or other iterative procedures). The combination of both approaches is more flexible and unpredictable regarding the results users may have in their minds, increasing the possibilities of interactivity (by allowing them to customise and manipulate more parameters). In order for this to work in a manageable way, we have designed a coefficient of representativeness (CR) that varies between 0 and 100, 100 being total representativeness of the corpus and 0 total inventiveness. So, when the user decides this coefficient is 100, only the artificial intelligence dealing with the corpus generates results. When it is 0, all the parameters are retrieved from the lists generated by the second artificial intelligence. When it is any value in between, this value equates the percentage of probability, for each calculated parameter, to be picked from either of them. Ideally, the default should be something along the lines of 70 if one wants a general, predictable score with a spice of novelty, but it can obviously be changed if the user is willing to take some risks and decides to have a more out of boundaries musical piece.

7 First Module

The first module of the model corresponds to the triggering of a seed that will cause the information retrieval of a simulated lyrical text, in order to constrain subsequent choices and structures.

7.1 Seed

A generative program like the one coded relies heavily on random functions and constrained decisions that depend on seeds to run. There is no true randomness inside the chaotic and random functions, as they all depend on a seed to perform their calculations. Along with this issue comes the fact that, if one wants each and any instance of the program to be reproducible, then they must all be attached to the same seed. Therefore, we have decided to program right at the beginning of the generator a function that generates a variable called “my-random-seed”. Every time the program is called, it will generate a different result. But, at the same time, if the user likes any of the outcomes and wants to replicate it, then they are able to do so by choosing exactly the same seed. So, in other words, each seed represents an instance of a given score, and it is like its own ID number. And the user has total control over it:

(setq my-random-seed (* (float (/ (get-universal-time)

1000000000000)) (rnd-in 100) ))

(init-rnd my-random-seed)The block of code shown uses the universal clock of the computer to generate a seed. And it has a resolution up to the milliseconds. So virtually every time the program is run it will generate a different score, because the time will always be different. If one likes it, one just has to keep track of the inspector window and take note of the number that will appear there and one can keep that seed for later use. The entire score depends on this seed in order to work properly and it is the initial trigger to derive everything that follows.

7.2 Text

The text is in fact just a simulation of what would be a complete lyrical text and the relevant numerical information about its structure. It corresponds to the parameters number of stanzas, lines per stanza and syllables per lines. These three parameters are positive integer numbers and are stored in variables. This can be done as merely a constrained random generation or they can be defined by the user.

Those numbers, in theory, can be whatever the user envisions, but in the actual practice they are constrained among specific values. The number of stanzas is usually a small number between two and six, four being the most common by far; the number of lines usually also varies between three and ten, four being the most common and five and six being rather common as well, especially since the beginning of the twentieth century; the number of syllables per line varies between four and twelve, seven being the most common (Gouveia, 2010). Given the strophic nature of most fados, in our model these numbers are fixed for the entire composition.

For the purpose of demonstration, we are assuming in the version presented in this article four stanzas with four lines and seven syllables, but all other values can be implemented with some future refining .

7.3 Form

The overall form will impose a hierarchical superstructure containing and constraining everything else that follows. The form of a fado, in particular, and of any song in general, is decided by the lyrical content: namely, the number of stanzas and lines. At this stage we are not dealing with lyrical content in concrete; however, one can deal with its consequences in an abstract way by following its trends. A text with four quatrains in heptasyllabic lines, for instance, can be extrapolated into how one would typically sing such a text. Following Ernesto Vieira’s description of fado, one would sing the first line over a two bar antecedent melodic phrase (a1), and the second line over a two bar consequent melodic phrase (a2). These two lines of text would represent a typical A section, four bars long, on top of a harmonic progression. This A section could be immediately repeated (representing the textual repetition of the same two lines) or it could be followed by a contrasting B section representing the following two lines of the quatrain. So, departing from this simple quatrain one could end up with several possible combinations – AB, AAB, AABB, ABB, ABAB. At this point, and knowing that there are still three quatrains to sing, several options arise: either they would be exact replications of the first one, and, therefore, a typical Ernesto Vieira’s strophic fado: ABAB ABAB ABAB ABAB or AABB AABB AABB AABB, for instance. Alternately, it could be assumed that the first two quatrains were one fado in the minor mode and the last two quatrains could have a different music in the major mode, and so they would represent a doubled version of the same model, and it would be something like ABAB ABAB CDCD CDCD or AABB AABB CCDD CCDD.

Having this in mind, one then realises that even if the concrete text is not known (its semantic content), one can try to model the form of the music based on its structure (number of stanzas, lines per stanza and syllables per line). The simplest way to formalise this is to simply define a list containing the distribution of the sections, each section represented by an alphabetic character. Going one step further, we assume for the model different versions of each section to represent slightly different performances of the same section whenever they are repeated. A different version is obtained by applying transformations to some parameters in order to obtain what can be called variations. The concrete implementation of these variations will be discussed later. This increases variety and models what often happens in performance. We append an integer to each variation to identify them. There is no clear boundary on how many variations one could have. In theory, each instance of any given section is sung uniquely, therefore it could be a variation, resulting in at least as many variations as the total numbers of stanzas. Therefore, the resulting variables assume the form:

\begin{equation*} \text{Section}=Xn, x \in \{ A,B,C,D \dots \}, n \in \mathbb{N}_0 \end{equation*}A form list is then the chronologically ordered sequence of these variables, reflecting the idiosyncrasies of the lyrical text, completed with the uniquely titled sections “introduction” (Intro), optional “intermezzos” and “coda”. Our example of the four heptasyllabic quatrains following the simplest Ernesto Vieira’s model, without redundancy, would result in the list:

\begin{equation*} F=\langle \text{Intro}, A0, B0, A1, B1, A2, B2, A3, B3, \text{coda} \rangle \end{equation*}In practice such a list might be doubled, since in many fados the singers repeat the lines. This list represents the superstructure of the piece, its overall form. It is, in fact, the formalisation of the time dimension in a linear fashion.

There are at least three ways of generating such a list. The first one is to let it literally be defined by the user. In a fully developed application this could be a decision mediated by a graphic user interface, in order to simplify the process, based on the structure of the text. The user would just need to provide the number of stanzas, the number of lines and the number of syllables, at their will.

The second one is using the retrieved simplified forms from the corpus and building a weighted list of all reasonable structures. There are 53 different simplified forms in the corpus, and a table with the most common of them is presented below. These are the simplified forms that appear at least three times.

| Simplified form |

# |

|---|---|

| AABB | 23 |

| AABC | 7 |

| ABAC DEDE |

5 |

| AABB CCDD |

4 |

| ABAB | 4 |

| ABCC | 3 |

| ABCD | 3 |

These simplified forms can be implemented simply by letting the computer randomly pick one according to its weight, and then subsequently transforming it in order to obtain a full well-constructed form. The first transformation is the decision of how many times the chosen simplified form is to be repeated, which can be done by appending the list to itself the amount of times desired (usually no more than four if one does not want an endless song): $T_1(F) \rightarrow F \cup F \cup F \dotsc$

The second transformation is to concatenate each symbol, of the resulting list, with an integer corresponding to its variation. This integer is randomly picked from another list. For the purpose of keeping the program within a reasonable degree of simplicity, we are generating only three versions of each section which is more than enough for demonstrative purposes:

\begin{equation*} T_2(F) \rightarrow \forall X \in F, X \Rightarrow Xn: n \in \{ 0,1,2 \}. \end{equation*}However, technically one could have as many variations as there are unique elements in the list to be transformed, so the general formula would be:

\begin{equation*} T_2(F) \rightarrow \forall X\in F, X \Rightarrow Xn: n \in \{ n \in \mathbb{N}_0 | 0 \leq n \leq i : i = \# \langle \forall j, k \in F\rangle : j \neq k \}. \end{equation*}The third transformation consists in appending the Intro section to the beginning of the list, and the coda to the end, which is just a trivial list manipulation operation:

\begin{equation*} T_3(F) \rightarrow \langle \text{Intro} \rangle \cup F \cup \langle \text{coda} \rangle \end{equation*}The result could look something like this:

\begin{equation*} F =\langle \text{Intro},A2,A1,B1,B1,A0,A2,B1,B0,A0,A1,B1,B2,A0,A0,B2,B0,\text{coda} \rangle \end{equation*}Notice that the variation indexes are random and do not follow any ordered criterion; the point being that there is not a hierarchy concerning variations, since they are all equally valid versions of a given material, therefore the users are oblivious to which one is the presumed “original” and which one are the variations.

The third way to generate a well-constructed form is formalising an abstraction that randomly generates a simplified form. A well-constructed simplified form can be implemented as:

- Define the total number of sections as $i$, which is a positive integer: $ i \in N $

- Create the empty list $F$ and the empty set $S$: $F = \langle \rangle ; S = \{ \} $

- Define $p$ as the length of the unique elements of $F$ plus 1:

- $p = 1 + \# \langle \forall j, k \in F : j \neq k \rangle $

- Append the alphabetic character in position $p$ to set $S$:

- $S = S \cup \{ X_p \} : X \in \{ A, B, C, D,\dotsc \}$

- Append a randomly picked element from $S$ to $F$: $F = F \cup \langle s_n \rangle : s \in S$

- Repeat 3 to 5 $i$ times

This will generate a simplified form that can be subsequently transformed into a well-constructed form using the same procedures exemplified in the simplified form derived from the corpus.

In the generator we implemented both solutions and one or the other is triggered, depending on the coefficient of representativeness.

7.4 Section

Each section can be seen as a hierarchical class that has one relevant time-domain property: length. This length can be one single value, but often it is a set of them, each one corresponding to a bar unit, mirroring the typical divisions of notated music. Therefore, its implementation takes the form of a list of lengths:

\begin{equation*} \forall \, \# \text{Section}_{Xn} = \langle \text{bar unit}_1 , \text{bar unit}_2 , \text{bar unit}_3 , \dotsc \rangle \end{equation*}This definition formalises the whole-form of the song, encompassing its total time span and constraining all the other time-dependent elements hierarchically below this level. The value these lengths assume depends, of course, on the song and style to be modelled, and can be fixed or variable. They can be randomly generated or defined by the user. Its implementation depends largely on the problem to be solved. In the specific case of fado, and following empirical data retrieved, it is assumed that each section corresponds to four bars long, each one with a length of 2/4, and this has been implemented as such:

\begin{equation*} \forall \, \# \text{Section}_{Xn} = \langle \frac{2}{4} , \frac{2}{4} , \frac{2}{4} , \frac{2}{4} \rangle \end{equation*}Modifying these values could radically change the final results and the entire perception of the practice.

7.5 Example of a Single Section

At this point, and while we may be getting ahead of the formalisation of the other structural elements, we must stress that in terms of organisation of the narrative it is important to explain how the hierarchical structures were actually implemented in code within Symbolic Composer. While the formalisation of the model allows it to be implemented in other languages and probably using other kinds of nesting, the software Symbolic Composer that we have used to code the generator has its own architecture of predefined objects and classes, simplifying some of the low-level operations. After the overall form is decided and one has all the sections needed, it is possible to build a modular frame based on this superstructure that can be used as a template for any other different superstructure in the future. We assumed each section as a basic module to support all other parameters inside.

Therefore, we will exemplify how we have made a template defining one single section representing four bars of a dummy song and how it can be similar for all subsequent sections and variants.

Inside this template section we will explain the global parameters that will apply to all instruments in this section. They are called “default” parameters and in this case the parameters are “zone” and “tempo”.

“Zone” is defined as the total length of the section. In this example, we are assigning four 2/4 bars for the A section, but it can be any length one wishes, depending on whatever genre one is modelling.

“Tempo” stands for at how many beats per minute the song will be played. One can decide to go for a strict tempo, or let the computer decide it randomly within a certain range acceptable for that section. “Tempo” is a list of values, each value corresponding to a zone. If one has a list with four zones and a list with four different tempos, the computer will use a different tempo for each zone, but one can assign just one tempo and it will play the same tempo within all the four zones.

Then we need to define the instruments. In this example we initially just used the right hand of a piano, which we have called “voice” (it creates the melody) and left hand that we have simply called “ostinato” (it creates the accompaniment), very much like the scores from the corpus. One can create as many instruments as one desires.

Inside each of these instruments we have defined our local parameters; this means the parameters that only affect a particular instrument or voice.

“Tonality” stands for the scales and chords that were used in this section. In this example we have decided to base everything in scales and chords without accidentals and then use a variable as a transposition factor to contemplate the other possibilities.

“Length” is the rhythm of each voice, and is basically a list of durations. Ideally the sum of these durations should match the length of the previously defined zone. If it is shorter, the rhythm will loop until that value is exhausted, if it is longer, then it is truncated.

“Symbol” is a list of alphabetic characters and accidental symbols that will be associated both with the chords and scales defined in tonality (and thus becoming pitches) and with the durations defined in length, becoming notes. As with previous parameters the number of elements in the pitch list should match the number of elements in the length list for ideal correspondence. In case of a mismatch, the shorter list will be repeated and mapped onto the other until it exhausts all of its elements.

“Velocity” is a list of velocities, ranging from 0 to 127, associated with each note generated by the previous parameters. In order to obtain maximum correspondence and efficiency, the number of elements of this list should also match the number of elements both in symbol and length.

“Program” is the timbre of the instrument. Usually it is a value between 0–127 that corresponds to a list of the available sounds according to the synthesisers available. In case of a generic MIDI set, the value 0 stands for a piano.

“Channel” is the MIDI channel associated with that particular instrument, ranging from 1 to 16, and number 10 is typically reserved for percussion.

So a template in Symbolic Composer, like a blank canvas for generating one section, is something that is coded like this:

;;section A

(def-section A

default

zone

tempo

voice

tonality

length

symbol

velocity

program

channel

ostinato

tonality

length

symbol

velocity

program

channel

)This template was the same for any section or sub-section we ever needed to compose. We found it very reasonable, because it employs pretty much the same parameters one would use to compose with pen and paper or with a notation program.

After this stage, one just needs to know exactly what is desired inside each section and either provide or generate the material for every possible parameter listed. We can fill in the values for an archetypical basic section A.

Inside section A, we were trying to generate our first four bars of music, meaning we were in fact combining sub-sections a1 and a2 regarding some of the parameters. Therefore the zone has to be a list that adds up to four bars of 2/4. Notice it can be any length one wants, so it can be easily adapted to any other genre or variation one may want in the future. Notice how a list is coded as simply a bracketed set of numbers preceded by an apostrophe (’)

'(2/4 2/4 2/4 2/4)Then, there were many options for tempo. For the sake of simplicity we could have simply pointed out to a single value. Another option was to have an entire interval available, therefore the best way was to tell the computer to generate a random integer and add a value to it. Because tempo needs to be a list of values (even when it has only one value) we needed to tell the computer to make it a list.

(list (+ (rnd-in 12) 72))Then we started to deal with the instrument “voice”.

For the sake of simplicity, in this example one is assuming a tonal melody that is going to be built on top of an archetypical T|D|D|T harmonic progression. The melody will be built on top of a major scale. To be in a range compatible with a voice it is best to centre it around octave 5 (in Symbolic Composer the first symbol of octave 5 is one octave above central C, midi pitch 60). So we have assigned:

(activate-tonality (0 major c 5))Note that it can be any tonality or tonalities one wants in any range desired. It can be even detuned tonalities. One can further explore this at one’s will or even change the score later for any other scale one wants and see the differences.

The next step is to define the rhythm of the melody. There are at least two approaches, the first is to assign a list of specific durations, either totally determined, or picked up from a set of different choices. The second approach is to have a generative function that, based on algorithms, creates a list of suitable durations from scratch. For this example we will just assign an archetypical fado rhythm, however, other options will be explored further ahead:

'(1/8 1/8 1/16 1/8 1/16 1/8 1/8 -1/8 1/8 1/8 1/8

1/16 1/8 1/16 1/8 1/8 -1/8 1/8)Notice how the durations fitting four entire measures are being defined, which means the same two-bar scheme repeated twice. Notice also the negative values, which represent pauses. Finally, notice as well that the way the structure was built a typical pick-up note is missing because it pertains to a previous section (an introduction for instance), and how the last notes following the pause are actually pick-up notes for the following sections.

The following step was to generate the pitches of the melody, so symbols were needed. Each symbol is basically a letter representing each element of the scale previously activated in an ordinal position, relative to the tonic defined. In this case, the scale was C major starting in the octave 5. As such, the first symbol “a” represents the first element of that scale, which is the pitch class C5. The second symbol “b” represents the second element of that scale, which is the pitch class D5. Negative symbols work the same way in the opposite direction: a symbol “-b” represents the pitch class B4 and a symbol “-c” represents the pitch class A4. As such one can define a melodic contour using a string of symbols that can then be imposed on any scale desired.

There are countless ways of generating a list of symbols that will make more or less sense depending on the processes one is trying to model. In this dummy example, and just to sparkle and foster ideas, we have used a random-walk generation around the centre of the scale :

(find-minimal (vector-to-symbol -d d

(gen-noise-Brownian 4 my-random-seed 0.5)))We essentially used a mathematical function that comes with the program “gen-noise-Brownian” (there are many others) that generates a random walk, a singable contour, based on Brownian noise. Using another preset function “vector-to-symbol” we mapped this numeric contour into symbols within the range “-d” up to “d” (so around the centre of our scale that would be “a”), and used another preset function called “find-minimal” to remove all repetitions while still preserving the general shape of the curve. Running this set of nested functions once, we have obtained the following result:

--> (a -c -b -d -c -b d c d b c a b a)This is a perfectly singable undulating line. One can try out with different values, seeds and nested combinations and see what happens. One can try out some of the other mathematical functions that come with the program, like “gen-pink-noise”, etc. More possibilities and a discussion about the ones that make more sense and are actually used in the generator are explored further ahead.

Then we just needed to define the velocities. A velocity is a number between 0 and 127 that represents the rate at which a MIDI note is sounded, representing how loud a note sounds. For this dummy example, we have opted for another kind of randomisation, using again a Brownian-noise generator:

(vector-round 64 100 (gen-noise-Brownian 5 0.75 0.75))Again, there are countless ways of doing this, some making more sense than others. This is just one of many. One can play with these values and see what happens. We were just happy with these results after trial and error. The generated values will be all between 64 and 100, so, all in the range of mezzo-forte. A more sophisticated algorithm for dynamics used in the generator will be explored in its own section.

Then, keeping the example simple, we assigned the default instrument piano to the voice, which is program 1. We told it to play on channel 1 as well. One can assign any number between 0–127 for instrument – 74, for instance, is a general MIDI flute.

program 1

channel 1And this concludes the definition of the first four bars of melody. As one can see it is pretty much free within the confines of some constrains: a melody with a tonal sound around a range of one octave around C5 and with an archetypical rhythm will be obtained.

Then we needed to define an ostinato to accompany the melody. For the ostinato we decided exactly what we wanted. We defined the same zone so the two layers will match. The tonalities employed (since we needed the T|D|D|T movement in the major mode) were the appropriate chords – C major and G7 major – complemented with the appropriate voice leading inversions provided. Therefore:

(activate-tonality (2 ch-maj c 4) (0 ch-7 g 4)

(0 ch-7 g 4) (2 ch-maj c 4))We have centred the ostinato around octave 4 so it does not clash with the melody and it is in the appropriate lower register. We have provided a defined length, since it is a stock march/fox rhythmic pattern. This list represents the durations as we would write them on paper or a notation program. Notice how we just need to write out two bars of the ostinato, even if the zone is four bars long, since it is assumed that, in case where the list is shorter, it will loop until it exhausts the time.

'(1/8 1/8 1/8 1/8 1/8 1/16 1/16 1/8 1/8)The same is true also for the symbols, which in this case are all pre-determined.

'((-12 a) bcd -b bcd -c bcd bcd -b bcd)Notice the logic of construction around the symbols – the “-12” attached to the first symbol indicates to play it twelve semi-tones below, forcing it to be a bass note, as expected in this kind of ostinato. The cluster “bcd” means that these three symbols are played as simultaneously as possible.

We kept the same solution for the velocities as we did in the melody: let them be somewhat random:

(vector-round 64 100 (gen-noise-Brownian 5 0.75 0.75))For the program we kept the piano, but we wanted this hand to be played on another channel. So we assigned it to channel 2.

program 1

channel 2At his point the coding of a four bar long section A is finished. It looks like this:

(def-section A

default

zone '(2/4 2/4 2/4 2/4)

tempo (list (+ (rnd-in 12) 72))

voice

tonality (activate-tonality (0 major c 5))

length '(1/8 1/8 1/16 1/8 1/16 1/8 1/8 -1/8 1/8 1/8

1/8 1/16 1/8 1/16 1/8 1/8 -1/8 1/8)

symbol (find-minimal (vector-to-symbol -d d

(gen-noise-Brownian 4 my-random-seed 0.5)))

velocity (vector-round 64 100

(gen-noise-Brownian 5 0.75 0.75))

program 1

channel 1

ostinato

tonality (activate-tonality (2 ch-maj c 4) (0 ch-7 g 4)

(0 ch-7 g 4) (2 ch-maj c 4))

length '(1/8 1/8 1/8 1/8 1/8 1/16 1/16 1/8 1/8)

symbol '((-12 a) bcd -b bcd -c bcd bcd -b bcd)

velocity (vector-round 64 100

(gen-noise-Brownian 5 0.75 0.75))

program 1

channel 2

)As one can easily understand, if we wanted to proceed and define a section B, all we had to do was to copy-paste the same template and change the desired parameters, and repeat the process as many times as sections needed in order to complete the piece.

An efficient way to automate this process is to consider this example as an archetype and to define an abstraction that generates as many sections desired, from scratch, just changing the relevant parameters.

Having presented a way of implementing sections, nested inside the superstructure of form, one can now understand the hierarchical dimension of the model. While it has a vertical dimension of six modules representing all the agents involved, in their orders of dependency, one also understands that it has a horizontal dimension of one section at a time, and how each section seems to be independent from each other. The implementation of the following modules consists of generating the materials for these agents in a modular fashion. Generating the material for a hypothetical section A, and then repeating the entire process for a section B, and again for a section C and so on.

8 Second Module

In this section we will detail how we have formalised the harmonic layer and which assumptions and algorithms were used.

In order to formalise any accompaniment or melodic process, the harmonic environment has to be defined, and represented in symbolic terms. Fado musics are tonal and, as such, tonality has to be modelled. That kind of formal exercise has already been done several times, namely in the work of Elaine Chew, who presented a mathematical model for tonality and its description in formal terms (Chew, 2014). We have also drawn inspiration from the work of Martin Rohrmeier (2011), who proposed a generative syntax of tonal harmony.

The predefined objects and classes in the software that we have used shaped how the problem was formalised, in the sense that we were building our reasoning on top of them. Tonality, in Symbolic Composer, is a class defined as a foundational set of audible frequencies: $Tonality_x=\{freq_1 , freq_2 , freq_3 , \dotsc , freq_n \}$. Therefore, a tonality is often equated to a scale from which the pitches will be derived. These sounds can be virtually anything, and are not constrained by any boundaries. Users can literally build artificial sonic worlds by defining their own tonalities. While it is possible to define a tonality in terms like:

\begin{equation*} Tonality_{example}=\{245\,hz , 277\,hz , 389\,hz , 411\,hz , 447\,hz \} \end{equation*}For the most part, this is not convenient. Alternatively, by using a morphism it is possible to map frequencies into a pair of symbols such that the first one is an alphabetic character corresponding to a pitch and the second one is an integer corresponding to an octave. An octave establishes a principle of equivalence such as an increase of a unit corresponds to the doubling of the frequency. And within an octave there is room for twelve equidistant different pitches. The 128 available MIDI pitches are defined this way, with a reference frequency of A4 = 440 Hz. At present, this convention is widely used and there is no need for further exploration and formalisation in the scope of this article. It suffices to say that a tonality can be also defined, using this convention equivalence, in terms of a scale, such as:

\begin{equation*} Tonality_{example}=\{C3, D\#3, E3, F\#3, G3, A\#3, B3\}. \end{equation*}In the particular case of fado, as observed in the practice and from the examples of the corpus, there are two tonalities involved and their possible transpositions within the twelve tone system. They correspond to the major and minor modes, since fado is a part of a tonal tradition in the Western sense of the term. Software limitations prevent us from actually defining different ascending and descending versions of a scale, as a melodic minor mode would require. Since scales, in this case, are merely sets of frequencies (or pitch classes) in abstract, melodic movement can only be dealt locally and contextually, requiring more information. As such, three different minor sets are defined so we can switch between them, locally, as needed:

\begin{equation*} Tonality_{Major}=\{C3, D3, E3, F3, G3, A3, B3\} \end{equation*} \begin{equation*} Tonality_{Minor}=\{C3, D3, Eb3, F3, G3, Ab3, Bb3\} \end{equation*} \begin{equation*} Tonality_{Harmonic}=\{C3, D3, Eb3, F3, G3, Ab3, B3\} \end{equation*} \begin{equation*} Tonality_{Melodic}=\{C3, D3, Eb3, F3, G3, A3, B3\} \end{equation*}Two modifier functions were also considered to account for all possible transpositions, and to allow the definition of either local or global modulations:

\begin{equation*} f:\forall n \in Tonality_x \rightarrow n = (n+t+m):\{t, m \in \mathbb{Z} | [-6,6]\} \end{equation*}In this way, the sonic domain for fado is defined and constrained in a modular fashion. It could be argued that not all transpositions have the same weight, and indeed within the corpus there are some degrees that are never present. Therefore, the value of $t$, formalised above, could be further constrained to reflect these weights. At present we do not feel that need, since in the current practice, and among skilled performers, transpositions are often chosen based on the vocal range of the singer and do not reflect the original tonality the composer has chosen. Moreover, the lack of use among some tonalities often reflected either the easiness to play them in the chosen instruments or the mere inability of amateurs to perform them, it did not reflect any intrinsic aesthetic decision regarding the musical content. Since these works are to be performed by the computer, and the computer has no difficulty in performing them in whatever transposition assigned, then it makes no sense to artificially constrain them. The modifier $m$, however, is constrained, and only makes sense in very specific cases. Not all modulations occur in the practice, and often this modifier is contextually attached to the harmonic progressions used, namely to generate secondary dominants, which is something to be developed further ahead.

While it is now possible to derive all possible pitches for the harmonies and melodies from this foundational base, it is clear that this structure, by itself, is a huge determinant in shaping the final sounds and in the characterisation of the practice. It seems also obvious that a simple change in these definitions can radically alter the final result. After some experimentation we dare to say that entirely different practices and genres might be achieved simply by changing this parameter alone.

The problem of automatic harmonisation is now considered a well-defined problem, especially when seen from the typical viewpoint of generating logical, yet inventive, chords to accompany a given melody. One of the most recent papers by Pachet and Roy has shown that “standard Markov models, using various vertical viewpoints, are not adapted for such a task, because the problem is basically overconstrained” (Pachet & Roy, 2014). Their proposed solution consists in implementing their technique of Markov constraints to fioriture (melodic ornamentation, defined as random walks with unary constraints). The end results are claimed to be more effective and musically interesting.

While these approaches seem promising, they all rely on the idea that there is a base melody per se to be harmonised. However, in the case of fado, what one usually sees in performance is the reverse: there is a prior harmonic structure, on top of which the text is sung. So, while the rhythm of the melody and its general contour derive from constraints emerging from the lyrical structure, the pitches are mostly derived from the scalar structure of the underlying harmonic progression. Hence, one needs another kind of approach to solve this problem.

If the overall form is a foundation to structure the span of time and the internal recurrences of patterns inside a fado, the harmonic foundation reflected in the scalar structures, is the foundation of each section of fado regarding the way the pitches behave. By choosing a scale, derived from the tonalities assigned, one is able to derive pitches that will be coherent with the melody, accompaniment and counter-melodies, within context, and will make the existence of the piece possible.

8.1 Harmonic Progressions

We made a list containing all the relevant harmonic progressions found in the musical scores and their respective weights.

A chord is formalised as an object corresponding to a set of symbols that is defined using four attributes (or modifiers): quality, root, octave and inversion.

\begin{equation*} Ch_x = \langle Q, r, o, i \rangle \end{equation*}The quality is a set that specifies the amount and relation between the symbols that compose the chord. A major chord is predefined as a triad in which the interval between the root and the second symbol is of four semi-tones, while the interval between the root and third symbol is of seven semi-tones, for instance:

\begin{equation*} Q_{maj} = \{0,4,7\} ; Q_{min} = \{0,3,7\} ; Q_7 = \{0,4,7,10\} , \text{etc.} \end{equation*}There are numerous possible qualities for chords (diminished, augmented, extensions …) and all may be formalised in the same fashion.

The root of a chord is a modifier that assumes the form of an alphabetic symbol relative to the pitch of the defined tonality that is active at the time the chord is called. Hence, in the case of a scale C major in octave 3, a root “a” or “-a” would correspond to pitch C3, a root “b” would correspond to pitch D3, and a root “c” to E3. Negative values work in the opposite direction, as such, a root “-b” would correspond to pitch B2 and a root “-c” to a pitch A2, etc.

The octave of a chord is also another modifier, which is an integer corresponding to the octave in which the root is to be placed. The inversion is yet another modifier (a movable rotation), corresponding to an integer, which will determine which element from the ones available in the quality set will correspond to the first note. As such, in a C major scale in octave 3, a root “b”, and a quality “minor” (defined as $\{0,3,7\}$), would give back pitches “D3, F3, A3”. So, an inversion “0” would mean “D3, F3, A3”; an inversion “1”, “F3, A3, D4”; “2”, “A3, D4, F4”; “3”, “D4, F4, A4”. As always, negative values work in opposite directions, so, an inversion “-1” would mean “A2, D3, F3”; “-2”, “F2, A2, D3”; “-3”, “D2, F2, A2”, etc.

A harmonic progression can be thus formalised as a list of chords:

\begin{equation*} HP_x = \langle Ch_1 , Ch_2 , Ch_3 , Ch_4 , \dotsc \rangle \end{equation*}As an example, the formalisation of a typical Tonic | Dominant | Dominant | Tonic progression can be done as:

\begin{equation*} \begin{aligned} HP_{T|D|D|T} = \langle \langle \{0,4,7\}, c, 3, 2\rangle, \langle \{0,4,7,10\}, g, 3, 0\rangle, \\ \langle \{0,4,7,10\}, g, 3, 0\rangle, \langle \{0,4,7\}, c, 3, 2\rangle \rangle \end{aligned} \end{equation*}This specific case reflects the formalisation of a progression of I6/4 | V7 | V7 | I6/4, in the third octave. In this example, the tonic chord is presented in the second inversion, so that its bass note is a common note with the following dominant.

Most of these modifiers, instead of being fixed, can be assigned to variables. The modifier “inversion” can be further modified by an offset variable, to increase variability. As an example attaching a fixed offset to every inversion in each chord, could be easily done as such:

\begin{equation*} \begin{aligned} HP_{T|D|D|T} = \langle \langle \{0,4,7\}, c, 3, (2+i)\rangle, \langle \{0,4,7,10\}, g, 3, i\rangle, \\ \langle \{0,4,7,10\}, g, 3, i\rangle, \langle \{0,4,7\}, c, 3, (2+i)\rangle \rangle : i \in \mathbb{Z} \end{aligned} \end{equation*}Defining the variable $i$ as “+ 1”, would cause all chords to be offset by one inversion, so that the tonic chord would be presented in “third inversion”. In this case, that would be the fundamental state again (one octave higher though), while the dominant chord would go to the first inversion, which would still create a very acceptable voice-leading context with minimum finger movement (approaching what a human player would do in a real instrument).

The implementation of the harmonic progressions, as seen in the corpus, can be done as weighted lists. To optimise resources, all possible progressions are defined as a sequence, in which the position in the list identifies the progression.

\begin{equation*} Possible\; HPs = \{HP_1 , HP_2 , HP_3 , HP_4 , \dotsc \} \end{equation*}Each defined progression has scales associated with it, reflecting both the tonality that is active and a modifier offset for local modulations (concrete values for the parameter $m$). For instance, if in a given progression there is a secondary dominant, this means that this progression involves two different versions of a given scale, therefore the modifier has to change accordingly. If a progression has borrowed chords from the relative, then it involves two different scales. As such, parallel to the sequence of possible harmonic progressions a sequence of lists of scales is also defined, respecting the same order, so that their position matches.

\begin{equation*} Scale_x = \langle Tonality_x, t, m \rangle \end{equation*} \begin{equation*} S_x = \langle Scale_1, Scale_2, Scale_3, \dotsc \rangle \end{equation*} \begin{equation*} Possible\; Scales = \{ S_1 , S_2 , S_3 , S_4 , \dotsc \} \end{equation*}Then, further sets for each section are built containing the weights, in the same order the progressions and scales were defined.

\begin{equation*} Weights\; for\; Section\; Xn = \{w_1 , w_2 , w_3 , w_4 , \dotsc \} \end{equation*}As such, when one wants to assign a progression and a scale for a section $Xn$ there is a probability $w_n$, constraining both the choice of $HP_n$ and $S_n$ simultaneously.

\begin{equation*} \forall \; w_n\; \exists \; HP_n , S_n : (HP_n , S_n) \Rightarrow Xn \end{equation*}Implementing the progressions in this modular fashion means one only has to define them once, and can alter them at any stage. It is also easy to change the weights among each section, alter them, nullify some of its elements (meaning that in any given sections, some progressions will never be picked up, even when they are defined) or force them by choosing very high values. This also means one can actually make them vary with time, if one decides to define the list of weights as variables instead of fixed values. The same can be said about the progressions and scales themselves, since they are defined as symbolic data. In this way, instead of needing to create a complex generative function to act as a second artificial intelligence to deal with the coefficient of representativeness, we just had to make a second set of weights with a more unpredictable behaviour This way, the program might either pick up progressions and scales from the set of weights that fully mirror the corpus, or pick up progressions and scales drawing values from the unpredictable set of weights, hence creating more adventurous musical results.

The option to use weighted lists instead of other methods relies on the fact that we believe this best represents the process that actually happens in the practice. People do not generate progressions out of thin air; instead, they seem to rely on previous implicit knowledge, in a kind of mental scheme of already known progressions that work within the tradition. This is done either by copying the records, other musicians or by resorting to other songs they already know. It is not by chance that harmonic progressions in the musics of fado also depend on the education of the musicians. Schooled ones like Reynaldo Varela, Alain Oulman or Jorge Fernando(10) often employ more complex progressions, since they are borrowing them from other traditions, namely from the erudite music or jazz universes. Therefore, by using weighted lists this previous knowledge of which progressions work best and which not (also according to their temporal context within the repertoire), its distribution among the overall population, and the choice to pick them, is modelled.

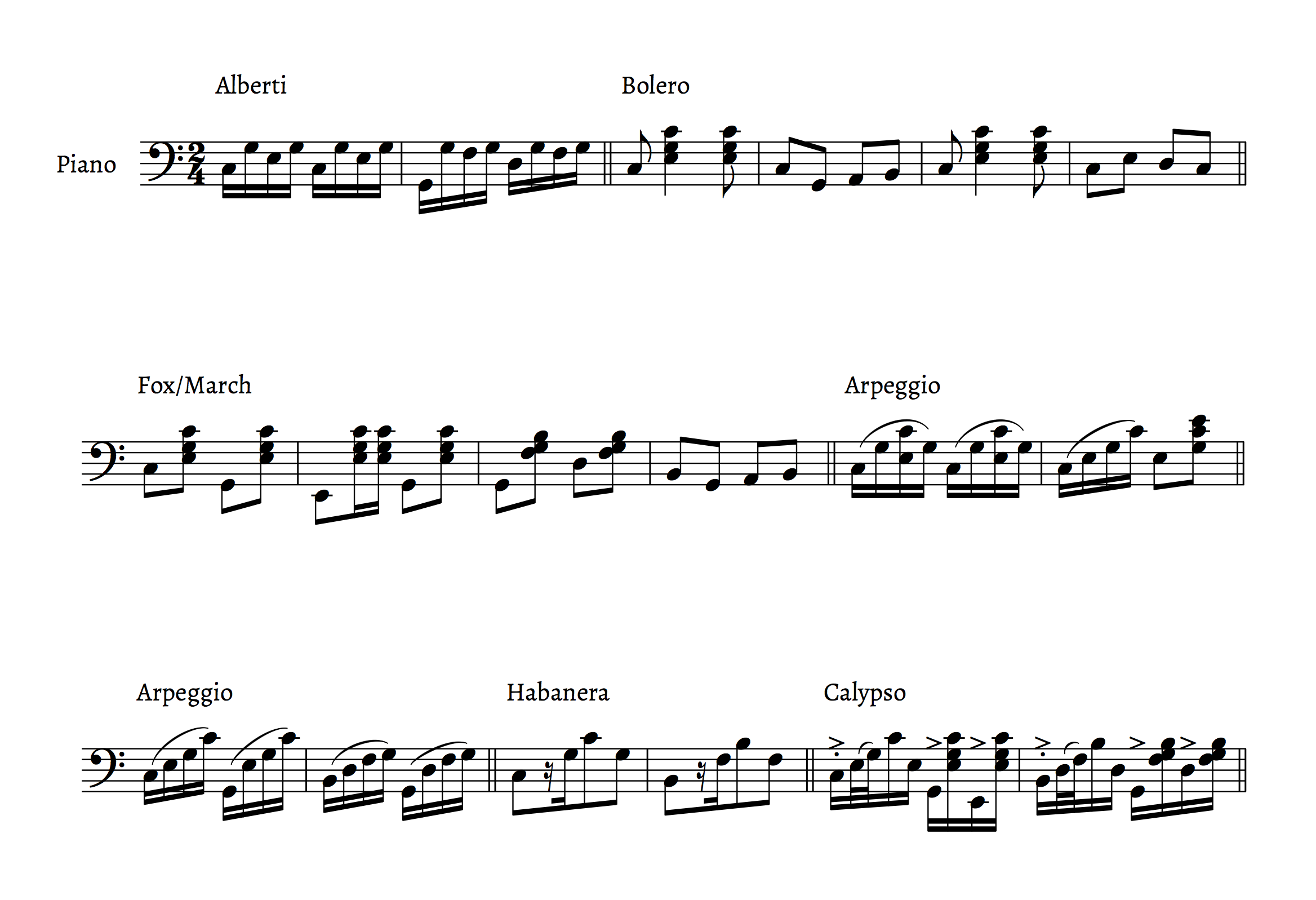

8.2 Ostinati

The analysis of the corpus and the observation of players have shown that the accompaniment layer in fados is mainly comprised of ostinati: a stock rhythmic pattern that repeats itself over and over during a certain period. Sometimes it goes along the entire fado, but other times it just spans through some sections, having other contrasting ostinati in the other sections. In order to mimic this layer, we have studied the kind of ostinati that are more often used. Most of these ostinati are recurrent figurations vastly used in other performative traditions and already have designations and categories by which they are known and there are already some established rules regarding the way players should approach them. One can find popular march, Alberti-bass-like figurations, habanera, cuban bolero, fox, and hybrid versions between them, among several others.

The first approach regarding this problem was to make a weighted list of all the relevant patterns observed and then let the computer pick one, because this models what happens in the actual practice: players just chose one figuration from a previously known group. The formalisation of these stock patterns was done by literally expressing the archetypical stock figurations as music fragments, as shown (Fig. 4).

In order to facilitate the process, each ostinato was broken in two parts, representing what happens in the real practice: a bass layer and a harmonic chordal layer. Often, the bass and chordal layers are played by the same instrument and they do not overlap each other, but other times they can be separated, especially when there is a bass instrument present. Moreover, the chordal layer usually has notes deriving from the chords while the bass layer may present additional scalar material. Both layers were expressed as lists containing the explicit durations and symbols of the patterns in their atomic form. The atomic form is the minimum fragment that needs to be provided so it can be looped in order to generate the complete accompaniment. In order to be looped correctly, the total duration of this fragment should match the total duration of a section or at least be divisible by it without a remainder. In the case of fado, since the sections are four binary bars long, equivalent to eight quarter notes, this implies fragments with the length equivalent to two, four, or eight quarter notes. Both the durations and symbols are represented in lists in which the position matters, since it is this linearity and correspondence that allows each value to be matched with the others at a later stage.