Music is a temporal art: the sequencing of events in time is at its core. This means that a listener can form expectations of what is to follow, and those expectations may reflect every aspect of the music (pitch, timbre, loudness, event timing) such that they may change not only as new events occur (Jones, 1992), but also as past information is integrated (Bailes et al., 2013). Concomitant with the musical flow and its accompanying expectations most listeners may perceive and experience affect.

My purpose here is to use time series analysis (TSA) models of live- performed musical flow and its cognition (Dean & Bailes, 2010), to develop BANG, the beat and note generator, a live algorithm that generates further musical streams. The Live Algorithms in Music network was created by Tim Blackwell and Michael Young in 2004, who spearheaded this movement (Blackwell & Young, 2004). Most approaches to generating musical streams are consequent on input which have used Markov-chain models that primarily aim to generate outputs that retain the conventions of the input system, be it tonality in music, or syntacticality in text. BANG does not aim to retain conventions, and is thus widely applicable; time series models, particularly multivariate models, seem not to have been used previously in this generative context and are quite distinct from Markov models. TSA recognises that streams of events such as those in music (Beran, 2004) or in most perceptions are autocorrelated: that is, a certain number of previous events to predict (and may influence) the next few events to some degree and in a systematic manner. Consequently the most common form of TSA is called autoregressive modelling (AR), and may also use other “external” time series as predictors. Time series of acoustic data are usually highly autoregressive and thus to some extent self- predictive (e.g., Dean & Bailes, 2010; Hazan et al., 2009; Serra et al., 2012). A time series of acoustic intensity measures (now treated as external or “exogenous”) may also be useful in modelling listeners' perceptions (“endogenous”) of the accompanying structural change and expressed affect: more broadly, musical structure may aid predicting perceived affect. AR models are a large and common univariate subset of the broad field of time series analysis, which I will describe more fully in a later section of the Introduction, where I also discuss somewhat more ecological multivariate models.

A long-standing approach to understanding musical affect indeed focuses on expectation. In Meyer’s original view (Meyer, 1956), the fulfilment of an expectation might induce an impression of a positive progression, and vice versa, and these ideas have been developed extensively (Huron, 2006). Since Meyer, two complementary types of computational modelling approaches have been used to understand the interfaces between music structure and affect: Markov chain modelling and its application in information theoretic analysis (Cope, 1991; Pearce & Wiggins, 2006), and time series analysis. Some studies have begun to show how these approaches may complement each other (Pearce, 2011; Gingras et al., 2016). Given their application to modelling both structure and affect, the approaches may be considered as potentially not only modelling music itself, but also music cognition. We aim to bring these two approaches together in the generation of music, and our purpose in the present article is to develop a generative application of the time series modelling approach. For Markovian models, Pachet’s Continuator is a well established approach (Pachet, 2003): Pachet and Roy view a Markov chain primarily as a local prediction mechanism: “items at locations early on in the sequence have no effect on the items at locations further on” (Pachet & Roy, 2013, p. 2). We will compare Continuator with the present work in Section 6. There are numerous other data learning algorithms, most reviewed in Fernández & Vico (2013), and some algorithms are newly attracting attention in event-based systems like music because of their power, such as the extreme learning algorithm (Tapson et al., 2015).

The organisation of the article is as follows. In the remaining sections of the Introduction, I describe briefly the background in information theoretic and more extensively that in time series models of music, concluding with a description of the objectives of BANG and some background in generative music systems. After a description of a univariate implementation (Section 2), I proceed to a multivariate system (Section 3). Section 4 considers transformations of the BANG output that a user may apply, and Section 5 considers memory and autonomy. Section 6 addresses creative and generative uses of BANG, and its future development and a path towards evaluation.

Information theoretic analysis stems from the classic work of Shannon, and considers music, for example, as a discrete alphabet of pitches, whose sequencing is determined systematically by a music creator, and may provide statistical predictabilities to a listener. Modelling approaches, notably those encoded in IDyOM (the information dynamics of music model: see Pearce, 2005; Pearce & Wiggins, 2012) treat note sequences as variable “order” Markov chains, and allow prediction of future sequences on the basis of both current and past ones, treated as symbolic events. “Order” refers to the number of immediately preceding events used in the model; note that musical events are not usually evenly spaced in time, an issue to which we return in the discussion. Multiple “viewpoints” may be used (Conklin, 2013; Conklin & Witten, 1995), where pitch, velocity and duration (i.e., rhythmic) information streams, and derived information streams (such as pitch interval, the distance between pitches) can all be used for mutual prediction. An information theoretic approach can take account both of the current piece, and also of the larger corpus of music in which it is embedded, as part of its process of prediction. In spite of this, with IDyOM, the longest order of Markov chain which is usually found useful in models is about 10 events, so that it provides a combination of local and corpus-wide modelling. What it can predict at any point is the ongoing sequence.

While Markovian approaches have been developed so far primarily for symbolic (that is note-based) music, such as that of the piano, they are extensible to sound-based music. By sound-based music (Landy, 2009) we mean music that does not necessarily emphasise pitch and regular rhythms, nor even necessarily use instrumental sounds (such as those of the piano or the violin). An information theoretic approach to such music can for example use sequences of MFCC (mel- frequency cepstral coefficient) time-windowed analyses of the spectral content, either directly for Markovian analysis, or as the basis for discovery of recurrent MFCC- sequence patterns that can be grouped as symbolic representations for treatment in a similar way to pitch in note-based music. PyOracle is an open source Python tool for this, and OMAX a commercial system, developed by Dubnov, together with colleagues at IRCAM with large resources (Bloch et al., 2008; Surges & Dubnov, 2013). These have been used both on note- and sound-based sequences for generative purposes. In all approaches, controlled temporal variation and imprecision occur (an event in a notated piece shown as representing one unit of time may commonly occupy anything between 0.75 and 1.5 units), and grouping needs to be able to allow for this, a confluence of the continuous variable, time, and discrete event parameters in the case of note-based music. In using this approach to generate music, simulation (that is using a formed model to generate events) or analysis and response (that is, using incoming data as the sole or a contributing source for ongoing modelling and prediction or simulation) may be useful. IDyOM has been used generatively only to a limited extent, to make (tonal) music (Wiggins et al., 2009).

Autoregressive time series analysis (hereafter referred to as TSA) is important in many fields from oceanography and ecology to cognitive science (Box et al., 1994; Cryer & Chan, 2008). In all these fields, serial correlations (termed autocorrelation) between events occur, in that an event is to some degree influenced and/or predicted by the preceding event(s). In some cases, such as the motor activity of reaching for an object, it is an obvious necessity that the next discernible position of the grasping hand will be close to the last, which thus becomes both a strong predictor and influence. In other cases, the expectation of such serial correlation is less obvious, and its neglect has caused (and does cause) many serious errors of interpretation in data analysis (Bailes et al., 2015). An autocorrelated series of data is not an independent set, and so most of the conventional statistical approaches to analysis (which assume normal distributions of independent variables) are not meaningfully applicable. For example, even a recurrent sequence of simple cognitive tests may reveal that the result of one is influenced by those of preceding events (Dyson & Quinlan, 2010), and lack of attention to autocorrelation has been noted as a huge problem contributing to the unreliability of many results in neuroscience (Button et al., 2013; Carp, 2012).

So one essence of time series analysis is discerning the nature of the autocorrelations, and incorporating this into the development of autoregressive models of the process. TSA has a long history in music as last reviewed extensively in 2004 (Beran, 2004). Beran (2004) especially discusses applications to the analysis of symbolic musical structure, such as note pitches, but also event timings. Early uses included identification of beats and rhythmic structure, evidenced by autocorrelation (Brown, 1993), and many audio analyses involve elements of it (e.g., linear predictive coding). Eck, (2006) showed how an autocorrelation phase matrix could be useful for metrical and temporal structure analysis. It is perhaps helpful to note that a temporal model of a signal, such as that of TSA, has a precise counterpart in spectral models; and related to this, some acoustic filters have time series properties, though only a few, such as the ARMA and Kalman filters (e.g., Deng & Leung, 2015) are data-driven models, rather than fixed filters. Harvey (1990) provides a clear in-depth explanation of these relationships.

TSA is also used in music information retrieval, often as a component of systems for tasks such as detection of genre and instrument classification. For example, in a study on the detection of cover songs in popular music (Serra et al., 2012), it was found that AR and threshold AR (in which different AR models apply in different parameter ranges), could predict time series of acoustic and musical descriptors, though modestly in most cases such as tonal descriptors. Prediction was best for rhythm descriptors, probably because of the longer time windows over which these are necessarily determined. The purpose of this study was not to test hypotheses about the “process underlying music signals” (p. 21) but rather to develop models of the descriptors on a piece-by-piece basis so that they could be used for cross-prediction (the model of one piece predicting the descriptor series of another). The degree to which this was successful was then harnessed successfully as a measure to detect the similarities the covers share with their originals, notably in tonal descriptors. It should be noted that one common acoustic analysis trait, often seen in MIR, is to measure descriptors with overlapping windows, and then combine a group of window measurements to form an element of the descriptor series studied: for TSA this has dangers, as the overlap itself creates a degree of autocorrelation, since successive measures share some data. Care needs to be taken with this.

Time series models can take account both of the autocorrelations and of so- called “exogenous” factors in music perception, those external feature streams which play a role in modelling and probably influencing listener perceptions. For a music listener perceiving the expressed structural change and affect of a piece to which they listen, predictive exogenous factors include the temporal pattern of pitch, timbre, and loudness. We showed that perceived change and affect are substantially predicted by temporal sequences of acoustic intensity (Bailes & Dean, 2012; Dean et al., 2014b; Dean et al., 2014), and translated this into a successful causal intervention experiment (Dean et al., 2011). Subsequently we have obtained predictive models of affect utilising also continuous measures of engagement, perceived effort, and sonic source diversity (e.g., Olsen & Dean, 2016). Some of the more powerful and informative models within this work are obtained using multivariate Vector Autoregression (VAR), in which multiple outcome variables are considered, and they may be mutually predictive (particularly in the case of perceptual features). For this reason, we develop multivariate generative models later in this article.

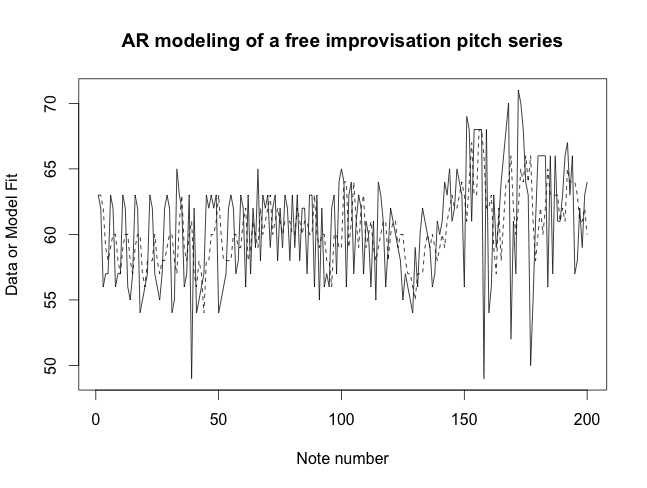

Fig. 1 shows a simple example of univariate TSA, modelling the pitch structure of part of a solo keyboard improvisation (the first 200 events). A purely autoregressive model provides a reasonable fit (squared residuals are less than 0.4% of squared data values). Note that a precise fit of a model to a pre-existent time series depends on knowledge of the whole series: it is not a prediction. If a well fitting model is made of the first 90% of a time series, and then used to predict the remaining 10% without any further input data on which to autoregress, then its fit will be far worse.

The improvisation was amongst those by professional improvisers analysed in published (Dean et al., 2014a; Dean & Bailes, 2013) and forthcoming work, and was the first item performed by the musician (prior to any experimental instructions, which applied to subsequent pieces, as discussed there). The performer used a Yamaha Grand Piano with MIDI-attachment (the Disklavier), as detailed previously. The first 200 events of this improvisation are displayed as the “data” (solid line), and the pitch series is provided within the supplementary material. An AR model derived by our standard code is graphed as as the “model fit” (dotted line). The values displayed are MIDI note numbers (where 60 corresponds to middle C of the piano). The model was AR4 (four autoregressive lags), and required first differencing. The statistical fit shown is that to the undifferenced original data (dotted line). It is apparent that the fit has a more limited range than the data, but the squared residuals are only about 0.4% of the squared data points, so the fit profile is fair.

Our purpose here is not to obtain a best fit (smallest residuals) model of the past data, which is commonly a purpose in MIR analyses, though not in that described above (Serra et al., 2012). Nor is our purpose to obtain the optimised (lowest information criterion) model. It is rather to obtain a representation of the process (in this case, what creates the pitch progression) at work as fast as possible. So the process is represented in the model as the autoregressive lags, and in later cases lags of other predictors, together with corresponding coefficients. In some such models and often in IDyOM, models can be improved by adding derived parameters such as pitch class (recognising that pitches an octave apart, which have a frequency ratio of 1:2, bear a special relation and belong to the same pitch class). We do not pursue this here because the aim is an approach that is neutral to tonality (loosely, the use of key centres) and to the choice of tuning system: to operate both with 12-tone equal temperament (in which the piano and the orchestra are normally tuned) and in other microtonal systems were there may not even be octave relationships (Dean, 2009). Other work (Gingras et al., 2016) shows that the two approaches (IDyOM and TSA) can contribute mutually in modelling aspects of performance and reception. While the IDyOM approach, as mentioned, is primarily a local model of upcoming events in the music, though influenced by statistical “knowledge” of all the preceding events and potentially of a corpus of related music, the TSA approach constitutes solely a model of the piece as a whole, which it assumes represents a steady state single process. This means that the coefficients remain constant and the selection of predictor variables in the model remains unchanged throughout (though of course the variable values change).

As the article proceeds, we focus first on univariate time series models: models which predict a single outcome variable, regardless of how many other input variables may potentially complement the autoregressive component. For example, a pitch sequence may be modelled and simulated either on the basis solely of autoregression (as illustrated in Fig. 1) or more plausibly, on the basis also of preceding exogenous variables such as dynamics and rhythms (noting that these too are almost always highly autoregressive). The article subsequently addresses such multivariate time series analyses and simulations, which are counterparts to the multiple-viewpoint approach of IDyOM.

The application is aimed to provide facilities for use in live performance, in other words, to be a live algorithm. This term is used to delineate a performance framework in which running algorithms permit user interaction, in three forms: a)parameter adjustment within pre-formed models; b)continuous data inflow to an analytical module resulting not only in new output (cf. classics such as Cypher (Rowe, 2001) and Voyager (Lewis, 1993; 1999); but also c) creating new model structures on the fly, as with machine learning approaches, neural nets in general, and the present case in particular. The normal performance mode is for a keyboard improviser to play note data into BANG and simultaneously interact with its interface. The live algorithm constructed in this article (BANG) is also capable of functioning autonomously, that is, without requiring any live input whatsoever. Nor is real-time (essentially instant) computational generation required, because this involves response to very short chunks of input events, whereas BANG will model and generate larger scale musical processes.

In his influential book on interactive music systems (Rowe, 1993), Rowe uses three dimensions to provide a useful classification of interactive systems. The first separates score-driven from performance-driven systems. The second distinguishes transformative, generative or sequenced methods. And the third describes instrument and player paradigms. The first two dimensions are fairly self explanatory, while the third may require slight elaboration. The instrument approach provides an interface that interprets performer gestures; these are expected to be non-audio gestures, in that Rowe indicates that a single performer would produce what seemed to be a solo using the system. The player on the other hand tries “to construct an artificial player … [that] may vary the degree to which it follows the lead of a human partner”. In its normal usage with a single keyboard player also controlling the interface, BANG falls into the performance-driven and player categories, but hybridises the transformative and generative methods. Rowe analyses several other interesting systems, amongst which is an early form of what is now known as Voyager. In one of several interesting articles discussing and analysing this program, its author George Lewis explains its “non-hierarchical” interactive environment for improvisation (Lewis, 2000) and its embodiment of “African-American aesthetics and musical practices” (p. 33). A core of this is the concept of multidominance, which includes treating multiple rhythms, metres, melodies, timbres, tonalities as co-dominant rather than hierarchical. This is in part reflected in the structure of the software, in part in its use in performance. Using ensembles of instruments, 15 melody algorithms, and probabilistic control of most elements which is generally reset at intervals between 5 and 7 seconds, it produces “multiple parallel streams of music” (p. 34). It pays attention to input information, and may imitate, oppose or ignore. It uses this information in both an immediate time frame, and one smoothed or averaged over a “mid-level” duration (p. 35). Voyager is a complex and powerful system, designed to avoid “uniformity” (p. 36).

A single instance of BANG is much simpler, but largely deals in the details of longer periods of input information. But it can produce “beats”, short repetitive rhythmic patterns like some used in electronic dance music (EDM). This occurs when the input and corresponding output sequences are short, and then used repeatedly (and sounded with appropriate midi instruments). It is interesting that traditionally, beats (and more so glitches) have sometimes been used while hardly metrical, in that they display little internal repetition to emphasise the overall periodic repetition of the whole beat (Kelly, 2009). In common with the ethos of BANG, these do not necessarily retain normal conventions, in this case, that of hierarchical isochronic meters. Putting this another way, strong metricality not only requires a repeating periodic structure, but also substructures which have durations that are either all the same, or comprise multiples of a common duration, thus creating at least two hierarchical layers. In sum, the concern in BANG is flexible computational generative music making with relatively few stylistic constraints. The multiple strands of information it outputs show modest multidominance, in Lewis’s terminology, and this can be extended by use of multiple instances and co-improvisors.

Boden & Edmonds (2009) have elaborated on the question “what is generative art?” They provide a definitional taxonomy of the field, and I would like to indicate the intended position of the present work within it. Of the 11 categories they enumerate, BANG, the beat and note generator I describe below, belongs to the first six, centrally category 5, “G-art” (generative art) but also category 6, in which “CG-art is produced by leaving a computer program to run by itself, with minimal or zero interference from a human being”. BANG can make “CG-art” but it does so by use of either pre-stored human input, or random input. In Section 6, we consider the respects in which BANG provides computational creativity so far. BANG seems to be the first system to significantly exploit multivariate TSA for live generative music-making.

The digital creativity design task undertaken here is now apparent: it requires a) an input module that can receive a musical stream from a keyboard or other MIDI- note-based performer; b) a module which can analyse appropriate large segments of this stream, creating a time series model, and then use the time series model by simulation and potentially further transformation to create a new musical stream; c) an interface for user interaction in controlling parameters of the creation and/or realisation of that new stream; and finally d), an output module which can create the sounded musical counterpart to the resultant musical information. Note that the MIDI-performer may play silently (in effect solely offer source materials) or audibly (i.e., directly participate in the musical dialogue with BANG). Besides a–d, BANG also offers: e) random sequence generation facilities (which can dispense with the live performer); f) preformed time series models which can themselves be used without further pitch sequence input, but which are based on ecologically valid models observed in performance; and g) sequence stores (memory), so that preformed or previously performed sequences can be used as the basis for live generation. The random sequences may be obtained in MAX or by using any of the wide range of statistical distributions available in R. Classic music generative systems operating live or less frequently in real-time, like Cypher and Voyager share a similar general framework, but the unusual feature in BANG is the use of time series modelling.

There is no single computational software platform which combines all our required features, thus two platforms are used, each highly developed towards efficient fulfilment of the functions they serve. R, the open access statistical platform with probably the largest library of coded statistical and analytical tools available, serves the time series analysis and simulation part. No other platform can compete with it in this respect: Lisp (in which IDyOM was originally written) and C are poorly supported with statistical code, and Python only moderately in comparison with R; Matlab is well supported but not comparably. MAXMSP serves the other three functions, and is well developed for this. It is object-oriented and has a comprehensible graphic user interface, and is commercially maintained and widely used. Alternatively Pd, its open source counterpart could be used, and for those preferring line-coding, XSuperCollider.

Thus communication between MAX and R is required, and this is implemented using simple computer sockets. A socket is an address-specified connection between two computational threads, which may be on a single machine, or distributed on several. We use a TCP socket which is capable of stream delivery. Musical events are received by MAX, and stored as indexed collections of pitch, key velocity, note duration, and event inter-onset time in a MAX “coll” object. A coll is simply a collection of data with indices that permit the separate storage and retrieval of individual items in the collection. The coll object corresponding to a particular musical stream is continuously replenished, and initially a 200 event sequence is chosen as its capacity. At any time, the data in this object can be sent by socket for analysis on an R “server” present on the same (or another) computer. In R, there are several alternative organisations of the univariate analyses available. We will describe the simplest, most readily comprehensible organisation, and then summarise some of the more complex forms already built, or potentially useful.

In the simplest implementation, each of the four 200-event streams of musical information, pitch, velocity, note duration, and inter-onset interval (IOI), are each modelled separately, and four independent model simulations are returned to MAX, and reassembled into a single event stream combining the four components (this is somewhat similar to the mode of operation of the Continuator). These models are thus purely autoregressive: no additional factors are considered. Fig. 1 already illustrates the modelling of the pitch sequence of a free improvisation, which happens to be post-tonal, and non-metrical. The piece was performed on a Yamaha Grand Piano with MIDI-attachment (the Disklavier).

The models are obtained by selection amongst candidates using the R function auto.arima within Hyndman’s Forecast package (Hyndman, 2011). Automated stepwise model selection (chosen for speed) is based on minimising the Bayesian Information Criterion (BIC), and not simply on MAXimising the degree of fit. The use of information criteria such as BIC has been well reviewed (Lewandowsky & Farrell, 2011). This information criterion penalises strongly for increasing model complexity, and thus helps to avoid overfitting, which otherwise tends to reduce the degree to which a model can fit new or related data sets. Model selection is based on the search of a space of permitted models: here autoregressive terms up to order 5 are permitted (because such orders are common when irregular rhythms – determined by inter-onset interval, IOI – are performed, but higher orders have not been detected (Launay et al., 2013). Lower orders are often selected, and in the case of rhythms where the pattern is fundamentally repetitive and isochronic (based on units of roughly constant length) order 1 may suffice (Wing et al., 2014), though this seems not to have been exhaustively investigated.

We choose pure AR models for two reasons. One, that alternative moving average components can be reformulated as AR terms, and quite often introduce issues of model identifiability. And two, that our simplifying decision reduces the search space and hence the time taken for model selection: BANG is used in live performance, and so its model is made available very soon after the performed data arrives. One step of differencing, which may be required to make the input data series statistically stationary, is permitted. A differenced series is simply one whose values correspond to the difference between successive members of the original series, and which is thus one member shorter. As part of inducing stationarity, differencing also removes long term (low frequency) patterns, somewhat akin to high pass filtering (indeed a highly restricted form on non-ideal high pass filter can achieve precise differencing). Stationarity basically implies that the autocorrelations between events any fixed number of steps apart are constant across the series, which also has a constant mean and variance (technical details of TSA are elaborated in depth in Hamilton (1994)). In many circumstances, including performed series which show a gradual change in a fixed direction (e.g., generally moving up the keyboard or gradually getting louder), such first differencing is necessary. The resultant model of the differenced variable can still provide fits for the undifferenced data at its original length (as in Fig. 1). The models are not allowed to include drift (that is trend), because if it is originally present, differencing normally suffices to remove it from the stream being modelled.

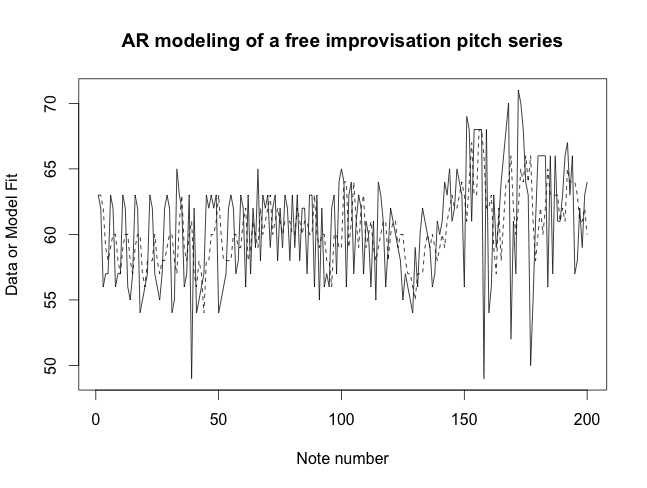

Once R has defined the selected model, it can be used to simulate a new data series. If the model has been derived from a differenced input series, then it is reconstituted into an output which also corresponds to an undifferenced data series, like the input. So the model creates (by simulation) an output which itself contains the same autoregressive features as the model itself: this is a key part of the structural continuity (with concomitant diversity and change) that BANG creates. At this point there is a choice for the user amongst a range of simulation options. The default here is to set the mean and s.d. of the simulation to those of the input set (but the user may change this). The range of values obtained from the simulation may still breach the limits of the usable musical parameter values: 20–108 for MIDI pitch, covering the conventional piano keyboard range and the majority of the audible range as used in music; 1–127 for MIDI velocity, though velocities below about 20 are commonly virtually inaudible; durations from 75–2000 msec; and IOIs from either 5–30msec or 75–2000msec (more than about 13 notes or chords per second is generally too much of an auditory blur to be very useful, and at say 20 notes/second may sound more like a single “brrr”). IOIs of 5–30msec are perceived as simultaneous, i.e., forming a chord rather than a single note (Dean et al., 2014a; Pressing, 1987), so simulation IOI values >30<75msec are shifted to 5msec. Normally I choose simply to shift the simulated values overall into the appropriate range, and only rescale them if they have a greater range than specified. Thus simulation streams which are narrow in range, responding often to performance elements which are equally narrow, are normally maintained as narrow (as in Fig. 1), and vice versa. Any of these choices might be varied by the user: for example, the simulation s.d. could be reset to an arbitrary parameter or to one calculated from any aspect of the data passing (or which has passed since startup) through the system. Fig. 2 illustrates a sequence of three successive pitch simulations from a single model, that of Fig. 1, to reveal that diversity is generated, even without further transformations.

The model of the input pitch series from Fig. 1 is shown here again as the solid line in the top graph. The remaining three lines are successive simulations from the model, illustrating the generation of diversity in comparison with the input: both forms of mirroring and profile changes across segments are apparent.

Modelling note sequence without regard for the other parameters of the stream, or of duration without regard for IOI, may contribute towards a form of multidominance (as delineated above) but is a limited approach if what is sought in the model accuracy which permits imitation and transformation of the overall process in play. On the other hand, as found with the approach just described, if what is sought is a model from which we can generate diversity by simulation while maintaining some pattern similarities with the input material, then the univariate approach has value. Given this, I also developed a multivariate approach within BANG. As noted recently, continuous-valued vector grammars have hardly, if at all, been applied to music (Rohrmeier et al., 2015). Vector autoregression with continuous values, which we have used extensively within models of perception of musical affect, constitutes the TSA counterpart to such vector grammars, and so it is interesting and novel to apply it here. The temporal variables are continuous in performed value, and pitch and velocity are treated as continuous variables and then quantised.

Therefore, instead of modelling the four musical parameters independently and recombining the results, as above, joint models of all four are made by Vector AutoRegression (VAR), using the MTS (multivariate time series) R package (Tsay, 2013). MTS can treat each musical stream as potentially a predictor of each other (an “endogenous” variable in statistical terminology): prediction can be bidirectional, but model selection will indicate which directions of influence are important (showing significant coefficients) and which not. It is common in VAR to consider issues of stationarity, as described above for AR. If one of the endogenous (to be modelled) variables is not stationary, then all variables are brought to stationarity by the same mechanism prior to modelling: usually by differencing. But there is a major debate as to whether this is necessary or even desirable within VAR (e.g., Sims, 1988), and since model output quality can be assessed, it is not a required step and it is not included here. The four musical parameters pitch, velocity, duration and ioi are all endogenous variables in a VAR, but just as there may be ARX models, so VARX models may use eXternal predictors: for example, a desired overall loudness profile, or perceptual affect profile, could be represented as a variable that impacts on a VARX output simulation.

The first step of VAR analysis in R is to determine the BIC-optimised regressive order, so that only variable lags up to that order are included in the joint VAR analysis to follow. Most commonly the BIC-selected order is 1, and occasionally it is 2. Given the desired constraints on analysis time within our algorithm, we then seek simply the removal of any uniformly unnecessary higher orders of the predictors, all of which remain endogenous (that is potentially mutually influential). It is additionally feasible in such modelling, having determined for example that variable X influences Y, but vice versa is not the case, to define (by providing a constraint) that the coefficient on Y for the contained model of X is 0, thereby effectively removing one predictor parameter, which no longer has to be estimated. This was considered a priori not a worthwhile investment of computational time in balancing considerations of minimising BIC vs. saving CPU time, and subsequent observations supported this.

The VAR analysis in Tsay’s MTS is the step which consumes much time, and not the subsequent model simulation. For an order 1 VAR (that is, one with one autoregressive lag of each variable), there are 20 parameters to be determined in the MTS function used (VARMA); for order 2 there are 36 parameters, and correspondingly the modelling commonly takes about twice as long. As with AR above, we do not use MA components in VAR (i.e., the corresponding parameters in the models are set to 0). The highest order initially considered was 4, to be coherent with the chosen maximum of 5 for the AR models, and given that VAR virtually always selects lower orders. A VAR of order 4 has 68 parameters, and commonly took almost ten times as long as order 1. A much faster version of the VARMA function is available within MTS, VARMACpp (which in places directly calls C code instead of using R code). We arbitrarily determined that for live performance a model and its simulation had to be returned to MAX within 30 seconds of request and hence constrained the order of the VAR to be 3 or lower, and used VARMACpp. Although arbitrary, 30 seconds is reasonable given that most musical phrases are complete within such a time period. The time required can be reduced further, at the cost of slightly loss of flexibility in the models, by using the basic multivariate autoregressive Yule-Walker function in R core’s stats package (ar.yw) instead of VARMACpp from MTS. Then simulation (which is fast) from the resultant model can still be done with MTS functions.

As with the univariate AR model simulations, a range of options apply before and during VARMA simulation: many can be applied within the covariance matrix upon which the simulation operates. As with AR, the resultant simulation again reflects the autoregressive but now also the inter-variable relationships of the model derived from the input, but transforms them further.

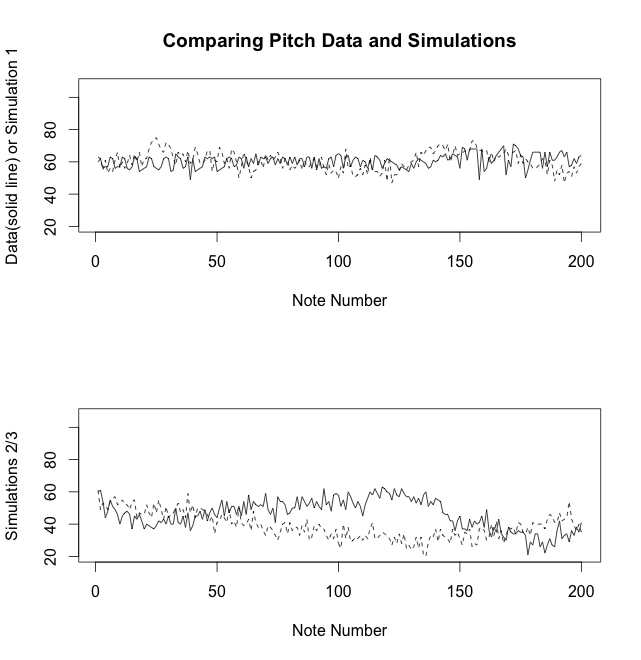

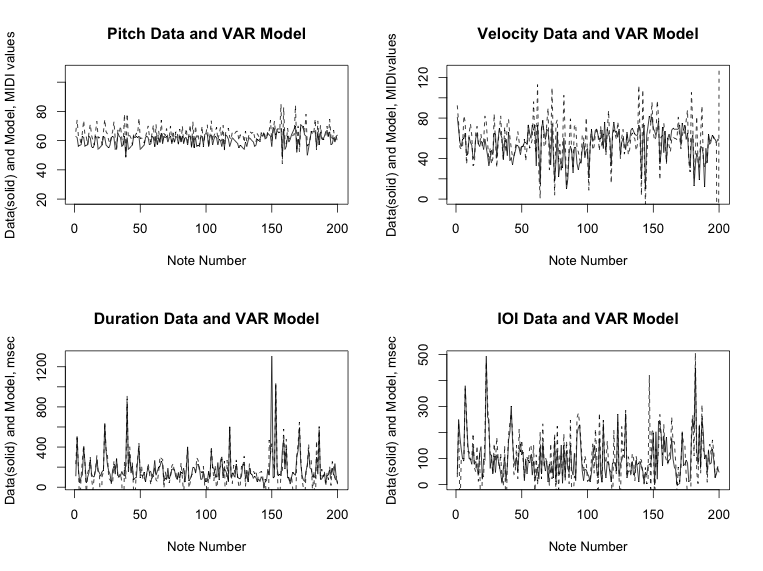

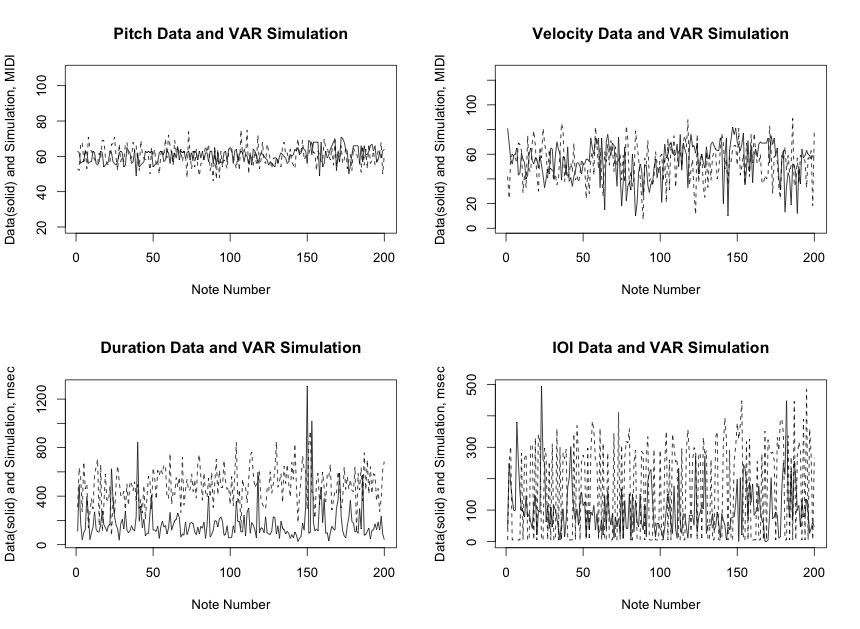

For illustration of the modelling, Fig. 3 shows a joint VAR model of all four variables (p,v,d,ioi) within the improvisation performance analysed already, and compares each with the original data. All variables are highly autoregressive, as expected. The overall model preserves the patterns of all four features, and provides a good model of duration and ioi, which turn out on inspection of the model to be mutually influential. It provides a fair but poorer model of pitch than the AR model described above (squared residuals are 0.8% of squared data values), and a fair model of velocity. We do not require that such a model will necessarily be an improvement in fit for any individual data component over an AR model, but rather that it will more fairly represent the joint and mutual processes of the four parameters, and hence be useful for simulation, which duly follows, with some analogous options to those described for the univariate simulations. Note again that by design the R script does not permit differencing prior to the VAR modelling, and so this can sometimes limit precision in any case.

Here all four components of the input 200 event time series are jointly analysed, as described in the text. Each subsection of the graph compares the original input (solid line) with the model (dotted). It is apparent that the temporal values are better modelled than the pitch and key velocity (both shown as MIDI numbers, where velocity can run from 0–127). Note that correspondingly the model was not derived for MAXimum precision of fit, and differencing (found necessary for the AR model of Fig. 1, was not permitted).

Fig. 4 shows an example of a VAR simulation, in comparison with the original data input streams. In this particular case, the divergence of the simulation series from those of the data is clear, but particularly for duration and IOI.

The VAR model of Fig. 3 is used to generate a new VAR set of series by simulation. Each panel shows the input data (solid line) and the simulated output (dotted), again showing the generation of diversity, and illustrating that even the better modelled temporal variables (duration and IOI) can readily diversify during the simulation.

Once the R simulation has been obtained, a wide range of further transformations may be effected, as coded or interactively chosen by the user, and this is done most conveniently in the MAX patch. An exhaustive discussion is not needed here, but some key features can be brought out. Perhaps the most obvious possibilities are choosing silent periods in the operation of BANG (no output), and controlling the range over which its parameters function within the MAX patch. For example, a performer may choose to have considerable gaps between successive realisations of a generated 200 event series (which is set by default to recycle) and/or between the end of a realisation and the initiation of a new model and its realisation. User interface objects permit (or in some cases could permit) such control, on a continuous-valued basis, which may be randomised. Similarly, there are control parameters for the range of velocities sounded, or the range of IOIs. Preformed and partially randomised velocity profiles, operating on the simulation output, can be valuable (and have been used in the previous Serial Generator algorithm (Dean, 2014).

There are two autonomous generators within BANG (one in MAX, based on randomised generation; and one in R, based on AR simulation using preformed models and coefficients, or using random outputs from a range of statistical distributions). Within limits, the (V)AR simulation values can themselves be randomised, the limits being the requirement of the simulation algorithm that the model in question be stationary.

Memory functions are also contained within BANG. The MAX patch contains a store of pre-formed MIDI-performances, which can be played in to the system (audibly or silently), and can then form part of the current input storage in a “coll” object. In turn, the input coll is cumulated in an “input memory” coll, which currently accumulates up to 2000 events. Similarly, the data from the coll in which TSA- generated event series are temporarily stored for realisation are also accumulated in a “generated memory” coll of up to 2000 events. These memory storages could be used at any time for any of BANG’s purposes, and it also is useful to be able to shuffle them. For example, playback of an event series obtained by hybridising two different stored series provides a new mode of variation, which may be an interesting and unpredictable component in an improvisation.

A brief comparison of the computational memory functions with those of humans may be of interest. Human memory is conventionally divided into different time ranges, such as working memory, short term memory, and long term memory (see Snyder (2000) for an introduction to memory studies in the context of music). The first two components are considered to occupy up to about 30 seconds, and this correlates well with the observation that assimilation of the statistical impact of pitch sequences in a harmonic context takes at least 20 seconds (Bailes et al., 2013). Long term memory can remain for any length of time, but of course is selective and decaying (Lewandowsky & Farrell, 2011). Recent studies in our lab (Herff et al, submitted for publication) contribute to an increasing body of work that suggests, surprisingly, that memory for musical melodies can be prolonged and even perhaps regenerative. We suggest that regeneration may be possible because of a representation which involves a co-relation between components such as pitch and rhythm such that memory of two parts of this three part system (two components, one relation) can regenerate the third. Even after up to 195 intervening melodies, we found unchanged recognition of an earlier presented melody, and others have presented related observations (Schellenberg & Habashi, 2015) over shorter delays. Thus 2000 events in our computational coll is an appropriately comparable value (corresponding roughly to 195 10-note melodies), though computer memory need not be restricted in this way.

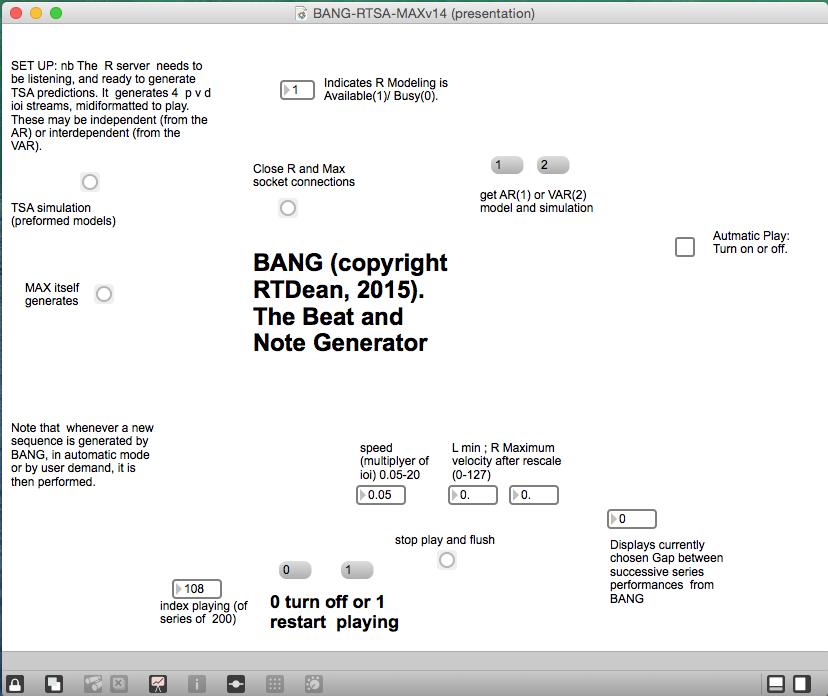

Given all the controls described, it is obvious that BANG can be provided with start-up choices automatically (randomised, or pre-selected in systematic groups using the MAX preset object) such that it will run without further performer intervention, as an automaton. The duration of the run may be open-ended or pre- determined. The MAX user interface (which displays only some of the switches and controls available to the user) is illustrated in Fig. 5.

The interface is designed for simplicity, and a user may choose only to activate the automatic play function, or alternatively may delve further, depending on whether they are actively performing on a MIDI-instrument themselves. Only a few of the controls available to a performer are directly visible at this level.

In summary, BANG is a computational music generator for use in live performance: a live algorithm. It operates primarily on input performed (or secondarily on preformed or randomly generated) musical data, and uses time series models of various levels of complexity for responsive or autonomous music generation, with a range of memory facilities. We structure the discussion by considering first the creative/generative uses of BANG and their cognitive aspects, and then delving further into some of the interesting specifics of its design and future development and assessment.

Edmonds (Boden & Edmonds, 2009), in a personal section of that paper, makes an interesting distinction between user “interaction”, and “influence”. In interaction, the human agent (in our case, performer) can sense almost immediately the impact of their action upon the system, and hence is likely to detect its origin. In a situation of influence, the response of the system is much later, perhaps at an unpredictable time, but certainly less likely to be detected as a response to a particular action either by performer or audience. BANG provides both interaction and influence, and both can apply to the purely generative part of the system (the TSA simulation and modelling) and to the use of the memory stores it contains. BANG is thus an improvisation prosthesis, a tool of creative hyperimprovisation (Dean, 2003 ). It can generate “beats” in the sense of EDM, and equally, beats and notes within or without flexible metrical hierarchies, given that as in most music making, these involve fluctuating rather than absolutely isochronic note inter-onset intervals.

Is BANG already a contribution to computational creativity, granted that it can be developed further at the highest level (for example, the introduction of evolutionary processes) or at the lower levels (the nature of the musical material processed and generated)? Colton & Wiggins (2012) offer a useful standpoint on what computational creativity research is: “The philosophy, science and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviours that unbiased observers would deem to be creative”. The concept of “unbiased observer” is delineated sufficiently to make clear that such a person, given all the usual difficulties, is able to accept that an unfamiliar or even new style of work may well be creative, and that a cumulation of such works can suggest their own criteria and extend previous ones. One might choose to put greater emphasis on the possible virtue of computational creativity being able to emerge with outputs that are well outside current human creative norms or even possibilities, and Colton and Wiggins (2012) include this. BANG may well fit within this definition, pending scientific test (discussed in the final sub-section).

BANG hands over significant “responsibilities” to the computer, and there is essentially no “curation” (that is, selection by the user as discussed by Colton & Wiggins (2012)) amongst the outputs, required (or offered). Indeed, in an improvisation with performer and BANG, the MIDI-instrument performer has all the facilities of retrospective integration (Bailes et al., 2013) and even kinds of effective “erasure” (Smith & Dean, 1997) available to them according to what they subsequently play, given that music is temporal and the impacts of previous events depend in part on future ones. In a very limited sense, BANG uses case-based reasoning (like MuzaCazUza (Ribeiro et al., 2001)) because each 200-event time series that it analyses, and from which it creates an output by simulating the model, can be seen as a case. BANG has been used in conjunction with the Serial Collaborator, a real– ;time system developed to create and manipulate serial melodies and harmonies (Dean, 2014).

There are a range of performance modes available with BANG, some mentioned already. The normal usage involves one MIDI-performer who also initiates and controls aspects of BANG operations, and provides played series to it. There may be multiple BANG instances available, and the flow of material to them can be separated, as can their outputs. It is interesting to use two different tuning systems simultaneously with BANG having access to two different player modules (MIDI- or VST-plugin driven). Equally there can be a second performer who operates BANG, while alternatively BANG may function entirely autonomously once started.

Many current computational creativity systems are concerned with generation of artefacts which retain conventions of prior systems, be it tonality in music, or syntaticality in text. But this is not a necessary feature, and BANG does not require it. This is because it is conceived currently as a post-tonal and microtonal system: using a performer’s input, or stored memories of them, it generates structurally related material. If the inputs are highly tonal, the outputs will diverge, but still show signs of tonalness; and vice versa. This flexibility is characteristic of much note-centred music since about 1950 in particular. Thus a number of instances of BANG can cooperate, and furthermore, the memory components of BANG would readily allow multiple MIDI-players in a single instance to use the generated material simultaneously if required. In this way harmony would be generated more extensively than it is presently with a single instance of BANG in play (it generates chords as a minority of events). Generating conventional tonal harmonies is a large scale and ongoing problem (Whorley et al., 2013), but generating post-tonal harmony is in some senses simpler, and there are clear paths for its further development using VAR in which time series of chords are modelled as vectors (c.f. Tymoczko, 2011).

A model of the statistical patterns of the pitch, rhythm, and intensity components of a piece of music (be it a model from IDyOM or from TSA) may constitute a model of some the cognitive processes it entails. For example, IDyOM can be used to model certain expectancies, which in turn may influence listeners' and performers' cognitive responses to the music in other respects. Time series analysis, while couched in quite different terms, also models expected notes given a preceding set, and hence has some equivalent components. Both approaches may thus be seen to inform music generation by means of process models of preceding music, notably whole pieces (or 200-note series as presently) and corpora of such pieces. The TSA generative mechanism of BANG is a rather different complement to those which use knowledge of cognition of individual musical components to dictate the nature and frequency of their occurrence in new pieces (Brown et al., 2015) or those which take temporal streams of (neuro)physiological data and sonify them into musical elements (Daly et al., 2015). TSA can also provide models of musical affect based on input acoustic and musical variables and these models could in the future be transformed in various ways as potential music generators. In addition, BANG provides “memory” stores, which can be used in a manner akin to genuine cognitive memory stores, for intermingling, transformation, and comparison.

It is appropriate to discuss further the contrasts between BANG and Pachet’s Continuator (Pachet, 2003). The Continuator (2003) is based on a variable order Markov chain analysis, which predominantly uses the pitch sequence. It is focused on real-time operation on short chunks of input MIDI sequence. These are “systematically segmented” into phrases “using a variable temporal threshold (typically about 250msecs)”. It seems that this “threshold” concerns gaps in the input sequence, but this is not detailed. The Continuator has facilities for “biasing” the output, for example based on changing harmony: this supports one of its intended uses, in conventional jazz harmonic contexts. Although it determines note onset and offset times, the original description of the Continuator emphasises solely note duration.

Simplification functions are provided so that when an input note sequence (within the quantised, finite pitch alphabet) is not found in the database of previous chains, a compromise solution can be returned. An example given considers a pattern in semitones, so that the sequence C/D/E/G is distinct from C/D/Eb/G. Then it notes that if the sequence C/D/Eb was not found in the current database, so that there was no continuation solution, the sequence might instead be considered in groups of three semitones starting on C. Thus C/C#/D would be coded 1, while Eb/E/F would be coded 2, and F#/G/G# coded 3. Then both opening three note patterns (C/D/E, and C/D/Eb) become 1, 1, 2, and 3 could be offered as a solution continuation for either. This is an example of a “reduction function” in Continuator, and it seems that duration and velocity measures of an input are used primarily in such reduction functions rather than being jointly modelled as in our VAR approach.

In the case of output rhythm, a Continuator user has three choices: “natural rhythm” (that “encountered during the learning phase” when the sequence chosen from the database was input by a performer); “linear rhythm” (streams of quavers (eighth-notes)); or “input rhythm”, the rhythm of the input but possibly warped in length. An emphasis on fixed metrical contexts is included by allowing an approach in which the input is segmented according to a specified metre, which can then ensure the output also segments in accord with it. These features form a major contrast with BANG, which is agnostic about rhythmic and velocity features, and in the VAR module, allows them to be treated quite equally. Continuator adapts to features of the ongoing environment, for example loudness or harmonic field, by use of “fitness functions”. An example is given for loudness, showing that if the input is soft, a bias can be introduced into the output such that it tends to match. The author finds that “the system generates musical material which is both stylistically consistent [with the performed context], and sensitive to the input” when bias values are intermediate. This is analogous to the idea of an eXternal regressor series being used within BANG, in ARX or VARX (see below). Broadly, because of the emphasis on using Continuator with children, students and specifically in jazz, there is a tendency for the descriptions of it to emphasise normative processes, what Rowe compared with “regression to the mean” (Rowe, 2001), but it is clear that these are not necessary features of its modes of operation.

Later work from Pachet and colleagues, notably a second patent, published in 2013 (Pachet & Roy, 2013), continues to turn more towards the use of constraints upon the Markovian sequences, but it seems that the computational complexity of a hierarchical or joint modelling system, treating pitch, velocity, note duration and IOI equally has not yet been fulfilled, and the discussion still emphasises parallel generation of different streams, such as pitch, velocity, and duration. The main systems illustrated in the 2013 patent emphasise melody generation for conventional jazz with “a coherent harmonic structure (a sequence of chords)” (p. 9). Often a (metrical) beat generator that has fixed beat lengths is used, and it decides simply whether the output melody for a beat should be crotchets (quarter notes) or quavers (eighth notes) and “substantially constant within one beat” (p. 8) (“Detailed Description” section of the Patent). In its final paragraph, the patent points towards allowing “the elements of the sequence to have variation in more than one of their properties” (p. 18), but illustrates this by suggesting parallel Continuator instances. In contrast, BANG uses joint modelling by virtue of its equanimity about its post-tonal and potentially non-metrical outputs, and is possibly more amenable to the generation of short term large contrasts (sudden changes in dynamic or harmony) that a post-tonal free improviser such as the author might want, rather than a “regression towards the mean”. The Continuator is used as a learning tool, and Pachet’s successor constraint-based projects (for example, Virtuoso) are sometimes aiming for the other extreme of performer/improviser expertise.

The issue of the irregular spacing of the events is of interest. Unlike Markov chains, TSA normally operates on the assumption (whether or not justified by the data) that what is being modelled is regularly spaced in time. When it is not, as here, the time gap is most often simply used as an additional time series (our IOI series) or vector member. But alternatively, so called variogram techniques can be used that deal directly with this IOI variation, removing the assumption of regular temporal spacing, and they may produce distinct output features; these are more widely used in spatial autocorrelation models. Conversely, BANG can quantise output IOIs to comply with metrical structures, or modify them through a well-formed rhythm generator system MeanTimes (Milne & Dean, 2016).

Simple extensions of the current system will gradually be implemented. For example, dynamic TSA models can also be constructed, which change coefficients (and sometimes predictors too) at different points in the piece. Control of outputs in part by external independent regressor time series (perhaps an audience input stream) is simple; and projecting a time series of perceived affect into a generative process on the basis of the time series model of its production in response to exogenous variables such as acoustic one, is equally straightforward. The internal elaboration of fitness functions, and hence internal valuation of outputs would be of interest; and collaboration with other systems, notably IDyOM (and/or IDyOT) (Wiggins & Forth, 2015) is planned.

Turning to lower levels of the BANG system, there is the opportunity to use pitch-class as a derived variable (as in IDyOM). This concept can only apply if the tuning system in play involves a repeating identically subdivided interval such as an octave (e.g., in conventional equal temperament with twelve subdivisions per octave), or an octave and a fifth (Bohlen, 1978). This may be particularly useful in elucidating and integrating the symbolic structures of materials generated simultaneously in different tuning systems. The nature of the TSA modelling can also be widened. For example, the order of the VAR models could be permitted to increase (at the cost of longer computational delay, though as noted this can often be overcome by use of the Yule-Walker algorithm). Stronger and principled data-driven modelling constraints could equally be permitted: these would allow some of the component predictor coefficients of a VAR model (for example lag 2 of the IOI) to be set to 0, while retaining the other lag 2 predictors. Indeed, VAR can be used to model pitch without reference to prior pitch sequences, and correspondingly in modelling the other generated parameters.

Whereas IDyOM commonly uses both a short term (memory) model (based solely on the piece in question) and a long-term model (based on knowledge of a corpus or related work), BANG normally operates solely on the current piece, specifically primarily on the last 200 events. However, the memory stores in BANG, and the possibility of using larger accumulations of keyboard outputs than from a single performance or performer, mean that cross-sectional techniques of time series analysis (CSTSA) could be developed in future (again consuming considerably more computational time). CSTSA has been used in recent publications on perceived affect in a range of diverse works: it is essentially a form of mixed effects analysis of time series (Dean et al., 2014b; 2014c). Thus a set of pieces can be treated as items within CSTSA, potentially allowing new hybridities of composition and improvisation.

Let us turn finally to the possibilities for evaluation of interactive music systems (IMS), particularly BANG. For this purpose we consider an interaction by a single performer with a keyboard and with the BANG interface, and simultaneously the provision of MIDI-data streams from the keyboard to BANG. So far most published interactive music systems have not been subjected to any systematic evaluation, and this is currently true of BANG. It probably passes the secondary “test” in which a work declared as creative is functional within the professional artistic community, “under terms of engagement usually reserved for people” (Colton & Wiggins, 2012), as with Pachet’s Continuator (Pachet, 2003 ). The main bases for this claim in the case of the Continuator seem to be that users (children, students, performers) enjoy it, and that listeners cannot readily distinguish when and if it is the human and/or the Continuator which are sounding. In addition, children seem to be more attentive to some tasks involving it than to alternatives (Addessi & Pachet, 2004). Correspondingly, BANG is already used in professional performance and at least passes muster with both its hands-on users (such as the author) and similarly with other collaborating performers.

However, this is no more than the most preliminary form of evaluation. What might be done to pursue this in some depth? In my earlier work on interactive music systems, what I called hyperimprovisation (Dean, 2003), I argued that critical to evaluation would indeed be performers, but also others expert in the form(s) of music in question. Developing this in the context of free improvisation IMS, and reviewing the available alternatives, Linson et al. (2012) argues primarily for qualitative assessment by idiom experts, indicating that it is “especially relevant for determining whether or not a player-paradigm system itself performs at the level of a human expert”. On the other hand, a post-human aspect to an IMS is of interest, and this could not be considered in relation to human “levels”. For related reasons, a pure Turing test is not apt here.

In the broader context of free improvisation at large, it has been shown that the consensual assessment technique using experts in the field can be effective in ranking preferences (Eisenberg & Thompson, 2003). In this study, there were 10 expert assessors and 16 keyboard improvisations, one by each participant, who had all played the piano for a minimum of five years but had varied improvisation experience. They improvised at the keyboard in response to a one minute excerpt of Prokofiev’s Romeo and Juliet “in any way” they chose; no information about the kinds of music they produced is given. Inter-rater agreement was statistically acceptable, and much of the variance in the measured preferences could be explained on the basis of complexity, creativity, and technical goodness as perceived by the experts (each scored separately).

In contrast, potentially less subjective aspects can be evaluated too, with reference to distinguishing human from machine contributions. An interesting case is the study of an algorithmic machine drum and bass generator, where satisfactory examples of both human and algorithmic drum patterns were obtained as judged by a pre-trained multi-layer perceptron critic, and then assessed by 19 human listeners, in this case not especially expert in drum and bass (Pearce & Wiggins, 2001). In three separate experiments, the appropriately briefed listeners considered whether the patterns were human or machine generated, and then which style each belonged to, amongst “drum and bass”, “techno” and “other”. The comparison of results with known statistics of inputs was used to show for example that there were perceived differences between the human and algorithmic patterns, and that the algorithmic patterns were not perceived as belonging to the appropriate style.

We can synthesise these types of evaluations, and taking account of the fact that BANG is not style-bound, but does normally depend on ongoing user keyboard sounds and interface interactions, propose a future evaluation scheme for this situation. The essence of the proposal is that several professional keyboard improvisers familiar with BANG (or other qualitatively similar IMS) would perform several improvisations, and the outputs of the played keyboard and BANG would be recorded separately (this is abbreviated as the K(i)B, keyboard-interactive-BANG condition). The performances would be required each to be of a length between 115 and 125 seconds (not an unreasonable precision to demand of a professional used to recording-studio conditions). The improvisers would then perform a solo keyboard piece of the same length, after which they would improvise a second keyboard part with it (creating the KK condition). Again both keyboard results would be recorded separately. The potentially informative aspect of the design would be that listeners would evaluate both the complete K(i)B and KK performances, but also counterparts in which K(i) and B recordings are recombined, so that what is heard was not played simultaneously (and is in some sense unconnected). Similarly they would hear both original and recombined KK performances. There would be both a naïve and an expert group of evaluators, whose data are treated as distinct. Such participants could evaluate in a between-participants manner (to avoid multiple demands, such as liking and complexity becoming conflated) the complexity, technical goodness, and liking for the target items of the types described. A mixed effects linear analysis would permit the discrimination of impacts of K(i) vs. K, and real vs. recombined performance. Furthermore, the possible influence of complexity that might be a consequence of comparing K with KK or K(i) with KiB performance would be overcome. It is intended to undertake such an evaluation, hopefully at the point at which the intended TSA and IDyO(M/T) modules are both available in the performance system.

A text file provides more detail on the algorithms, including R code for the analyses and simulations and the Pitch data series which is modelled.

A Quicktime movie illustrates BANG at work together with the author, a professional improviser, playing a MIDI-keyboard and controlling the patch. All the played and generated sounds are realised as physical synthesis equal-tempered piano sounds, using PianoTeq 4. The movie is made more for the purpose of demonstration than as a musical item, and so the R code is shown in operation, and Garage Band is used half-way through to notate the ongoing events: those which remain white are those played on the keyboard, while most events heard are generated by BANG. Commonly BANG is used in ensemble improvisation, as part of the computer- interactive aspect of the author’s contribution, alongside acoustic piano playing. The version of the BANG code used is pre-final, and some of the comments and plans in it (seen in the video) have since been implemented, as noted in the article itself.

There have been no financial benefits from the application of this research and none is planned.

This work on music generation using time series analysis models was initiated by the author and Geraint Wiggins (QMUL), during reciprocal laboratory visits, and is intended to provide functionality that can cooperate with live use of information theoretic music analysis and generation by IDyOM and its developments (discussed within). Discussion with colleagues at both MARCS and QMUL, in particular Jamie Forth, Andy Milne, and Marcus Pearce, is gratefully acknowledged. The research was conducted at MARCS Institute and at The Centre for Digital Music and the Computational Creativity Laboratory, Queen Mary University of London, where Rodger T. Dean is a visiting researcher.

There are no Notes.